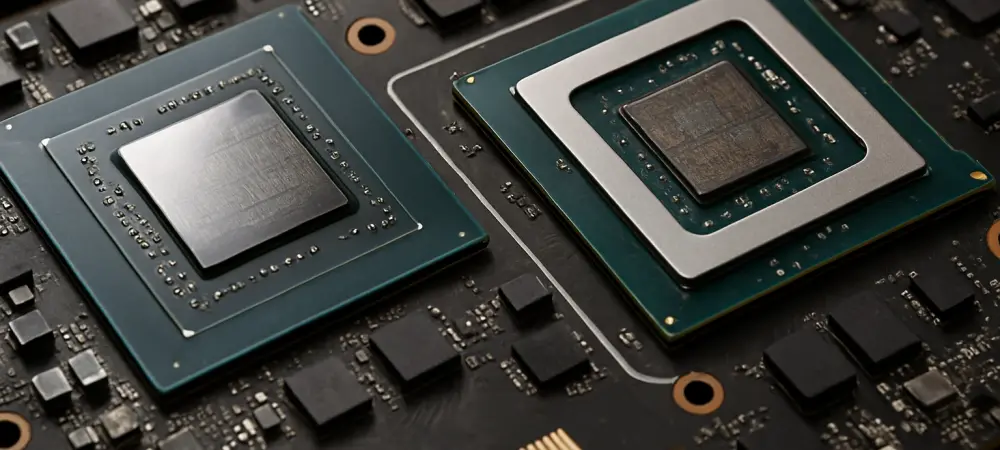

The battle for AI supremacy is often seen as a contest of silicon, yet the most formidable walls are built from code. This software fortress has secured NVIDIA’s dominance, but ZLUDA now promises a gateway. By enabling CUDA code to run on AMD hardware, this tool targets the foundation of the AI ecosystem, potentially reshaping the competitive landscape.

The Unseen Fortress Is Software the Real Barrier in the AI Hardware Race

NVIDIA’s leadership extends far beyond powerful GPUs. The company has cultivated a software ecosystem that has become the bedrock of AI development. This strategic focus has created an environment where developers default to NVIDIA hardware for its mature and extensive toolkit.

This deep integration has given rise to the “CUDA moat,” a powerful advantage that is difficult for rivals to breach. For competitors like AMD, producing powerful GPUs is only half the battle against years of accumulated code tied to a single vendor.

Understanding the CUDA Moat Why One Companys Code Rules the AI World

CUDA, or Compute Unified Device Architecture, is a complete parallel computing platform, not just a software library. It grants developers direct access to a GPU’s computational elements, allowing for optimization of complex algorithms essential for AI model training.

Its widespread adoption has cemented its status as the de facto industry standard. This entrenchment creates significant vendor lock-in, as switching hardware often requires a costly process of rewriting code, a barrier that protects NVIDIA’s market share.

Enter ZLUDA A Key to Unlocking AMD Hardware for CUDA Applications

ZLUDA emerges as a pragmatic solution to this problem. It is not an emulator but a drop-in translation layer that intercepts CUDA API calls and transparently redirects them to AMD’s ROCm software stack.

The project recently achieved support for ROCm 7, aligning it with AMD’s latest framework for modern AI workloads. ZLUDA’s journey began internally at AMD before its resurrection as an independent, open-source initiative, highlighting persistent community demand for such a tool.

A Broader Rebellion The Industrys Push for a GPU Agnostic Future

ZLUDA’s development is part of a larger industry movement away from single-vendor ecosystems. As AI becomes more integrated into technology, the risks of relying on a single provider are fueling a desire for greater flexibility.

This trend is evidenced by parallel efforts from other tech giants like Microsoft, which is also developing translation layers. These initiatives share a common goal: to make software GPU-agnostic and foster a more competitive marketplace where hardware wins on merit.

Potential and Pitfalls What ZLUDAs Future Holds

Despite its promise, ZLUDA’s road ahead is challenging, with performance being the most critical hurdle. Any translation layer introduces overhead, and its impact on demanding AI tasks remains a key unknown. For mainstream adoption, it must demonstrate near-native performance.

The project’s re-emergence marked a significant moment in the push for an open AI ecosystem. Its journey from a shelved experiment to a public tool highlighted the demand for hardware interoperability. While its ultimate impact remained to be seen, its development underscored a fundamental industry shift toward dismantling proprietary software walls.