Nvidia CEO Jensen Huang has recently been grappling with growing concerns regarding the delayed arrival of their next-generation Blackwell GPU architecture and the return on investment (ROI) from AI investments. As the company addresses these issues, a comprehensive understanding of their impact on Nvidia’s market position and future prospects is essential.

Addressing Production Delays

Manufacturing Challenges and Reassurances

Nvidia promised that its next-generation Blackwell accelerators would ship in the second half of 2024. However, reports of delays have emerged due to a manufacturing defect that necessitated a mask change. This issue has sparked significant anxiety among Nvidia’s customers and investors, negatively affecting the company’s share price. During the Goldman Sachs Tech Conference, CEO Jensen Huang sought to reassure stakeholders that Blackwell chips are in full production and slated for shipment in the fourth quarter of 2024. Despite these assurances, doubts linger among customers and market observers who worry about the potential long-term effects of these delays on Nvidia’s competitiveness.

Huang’s reassurances aim to ease the minds of customers and investors, yet the anxiety stemming from delayed schedules could have lasting ramifications. Manufacturing defects are not trivial concerns and can erode confidence in Nvidia’s ability to meet market demands promptly. If these production setbacks continue or become a trend, the company might face not just economic consequences but also a tarnished reputation for reliability. Nvidia must navigate this delicate situation deftly, ensuring that all technical issues are resolved expediently while maintaining transparent communication channels with all stakeholders involved.

Impact on Customer Relationships

The delays have tested Nvidia’s customer relationships, creating frustration among those relying on the timely release of Blackwell GPUs for their product development timelines. This has prompted Nvidia to double down on communication efforts, ensuring customers are kept in the loop regarding production progress. Long-standing partners and clients have expressed concerns, and Nvidia’s sales teams are working hard to mitigate these anxieties. Transparency in addressing production setbacks and maintaining robust support mechanisms are critical to preserving customer trust and loyalty during this challenging period.

Despite these challenges, Nvidia recognizes the importance of maintaining and nurturing customer relationships. By actively engaging with clients and providing regular updates on the status of production, the company hopes to alleviate frustration and retain customer loyalty. Nvidia’s proactive approach involves not just assurance but actionable steps to demonstrate commitment to meeting customer needs. Future contingencies, such as accelerated delivery schedules post-production or extended support services, are being considered to retain goodwill among their clients and ensure these delays do not result in long-term detriment. Through these efforts, Nvidia aims to sustain not only its technological edge but also the trust and reliance that customers place in its products.

Evaluating ROI from AI Investments

Performance Gains vs. Infrastructure Costs

Jensen Huang emphasized the substantial performance gains that GPU acceleration can offer, particularly in data processing applications like Apache Spark. For example, Spark experiences up to a 20:1 speed-up when accelerated by GPUs, justifying the high initial infrastructure costs associated with Nvidia’s technology. Even as infrastructure expenses might double, the resulting efficiencies and cost savings make the investment worthwhile. Nvidia claims that for every dollar service providers spend on their GPUs, they see a $5 return in generative AI applications. This significant ROI underscores the value proposition of Nvidia’s AI infrastructure to its customers.

The calculations of ROI point to an economic argument in favor of hefty upfront expenditures on Nvidia’s GPUs. While the infrastructure costs are certainly high, the enhanced performance metrics provide a substantial cushion of profitability and efficiency gains. Service providers can allocate resources more effectively, achieve faster processing times, and deliver better service overall, which ultimately justifies the investment. Furthermore, the long-term savings from reduced operational costs and improved workflow efficiencies present a compelling narrative for Nvidia’s potential customers. Beyond just raw performance improvements, GPU acceleration provides strategic advantages in competitive industries where speed and accuracy are paramount.

Debates on Long-Term ROI

While the immediate ROI for service providers appears favorable, there remain questions about the long-term returns on applications and services built on AI infrastructure. Industry observers are particularly interested in the sustainability and practicality of dedicated AI accelerators, like GPUs, over time. As AI technology evolves, the current phase of brute-force computing might shift, leading to changes in demand dynamics. This uncertainty about the future landscape of AI investments fuels ongoing debate about the ultimate value and relevance of Nvidia’s specialized hardware.

The conversation surrounding the long-term viability of AI investments is not without merit. Market dynamics and technological advances often render what is cutting-edge today obsolete tomorrow. The substantial investment in AI accelerators, while promising significant short-term returns, must be critically analyzed for sustainability. Future AI models may not necessitate the same level of computational brute force, potentially shifting the market’s reliance on GPU-heavy infrastructures. This evolving landscape requires Nvidia to stay ahead of the curve by continuously innovating and adapting its product offerings to meet the future needs of AI applications. For now, ongoing investments and research into alternative AI computing paradigms are essential to these discussions.

The Technological Edge: Nvidia’s Innovations

Blackwell Architecture Advantages

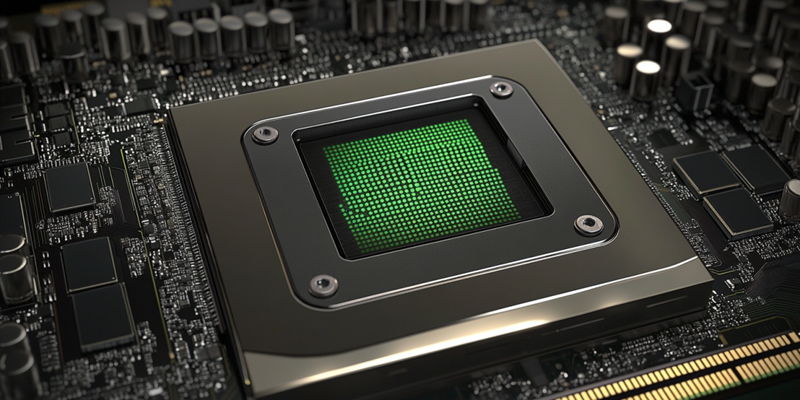

The new Blackwell architecture promises up to a 5x performance boost and more than twice the memory capacity and bandwidth compared to previous models such as the #00. These enhancements position Blackwell GPUs as a significant leap forward in accelerating AI and computing tasks. Nvidia’s technological advancements extend to proprietary software innovations like DLSS (Deep Learning Super Sampling), which enhances gaming performance by inferring pixels to generate high frame rates. These innovations not only bolster Nvidia’s competitive edge but also extend the company’s influence across various sectors, including gaming and professional graphics.

The quantum leap in performance and memory capabilities positions Blackwell GPUs as a game-changer in computational fields. Improving data throughput and processing speeds directly impacts numerous applications, from scientific research and financial modeling to entertainment and digital design. Nvidia’s DLSS technology is a testament to the practical applications of their innovations, delivering not just incremental but exponential improvements in user experience. These technological advancements aim to provide Nvidia with a comprehensive edge over competitors, ensuring their products remain the preferred choice for industries seeking high-performance computing solutions.

Expanding Impact Across Sectors

Jensen Huang envisions AI’s transformative potential across diverse industries such as autonomous vehicles, robotics, and digital biology. Nvidia’s custom AI code assistants and generative AI applications stand to streamline complex processes, reducing the need for extensive manual coding. For example, in autonomous vehicles, Nvidia’s AI technology enables more sophisticated recognition and decision-making capabilities. Similarly, in digital biology, AI accelerators support complex simulations and analyses, advancing research in fields like genomics and drug discovery. This broad applicability reinforces Nvidia’s positioning as a pivotal player in the AI revolution.

The extensive reach of Nvidia’s AI innovations highlights the multi-faceted impact of their technology. Autonomous vehicles benefit from improved perception systems, making advanced driver-assistance systems (ADAS) safer and more reliable. In robotics, Nvidia’s AI drives innovations in learning capabilities and task performance, enhancing both productivity and precision. In healthcare and life sciences, the potential to accelerate genomic sequencing and drug discovery processes represents a significant stride toward personalized medicine and innovative treatments. By continually extending the utility of their hardware and software solutions, Nvidia strengthens its leadership across a broad spectrum of industries and remains at the forefront of AI-driven technological advancements.

Optimizing Datacenter Design

SuperPODs and Efficiency

Nvidia’s commitment to efficient datacenter design lies at the heart of its strategy to drive down costs and optimize performance. The company’s modular cluster designs, known as SuperPODs, consolidate vast compute power into dense, compact systems. These SuperPODs can consume up to 120 kilowatts per rack, effectively replacing thousands of traditional server nodes. By adopting these high-density configurations, Nvidia addresses the inefficiencies of conventional datacenters that rely heavily on air for cooling—a poor heat conductor. The move towards smaller, densified datacenters with liquid cooling systems offers a more effective and efficient solution to meet modern AI compute demands.

The shift towards modular, dense datacenter configurations epitomizes Nvidia’s forward-thinking approach to computing infrastructure. Traditional server nodes, which consume significant space and rely on inefficient air cooling mechanisms, are being replaced by SuperPODs to optimize both performance and space utilization. By enhancing cooling solutions with liquid-based systems, Nvidia can maintain component temperatures more effectively, thereby extending the longevity and reliability of their hardware. These advancements reflect an acute awareness of current technological limitations and an innovative drive to overcome them, setting a new benchmark for datacenter design and efficiency.

The Future of Datacenter Architecture

Nvidia CEO Jensen Huang is currently dealing with heightened concerns over the delay in launching the company’s next-generation Blackwell GPU architecture and the uncertain return on investment (ROI) from their artificial intelligence (AI) initiatives. The postponement of the Blackwell GPUs is causing anxiety among stakeholders who are eager for the technological advancements and potential market advantages that these new GPUs promise. This delay could allow competitors to catch up or even surpass Nvidia, potentially affecting its leading position in the GPU market.

On the other hand, Nvidia’s significant investments in AI, which includes both hardware and software developments, are under scrutiny. Although AI promises high returns, the timeline for these returns is often unpredictable. Investors and analysts are eager to see tangible results to justify the capital outlay. This uncertainty adds to the pressure on Huang and his leadership team to demonstrate the real-world impact and financial benefits of their AI projects. As Nvidia works through these challenges, understanding their implications on the company’s market position and future prospects is crucial for stakeholders.