I’m thrilled to sit down with Dominic Jainy, a seasoned IT professional whose deep expertise in artificial intelligence, machine learning, and blockchain has positioned him as a thought leader in cutting-edge technologies. Today, we’re diving into the exciting world of data center innovation, specifically focusing on the groundbreaking liquid cooling solutions recently unveiled by Schneider Electric and Motivair for AI-driven environments. In this conversation, Dominic shares insights on how these advancements address the intense demands of high-density computing, the evolution of cooling tech in the AI era, and the impact of strategic collaborations on performance and scalability.

How do the new liquid cooling solutions from Schneider Electric and Motivair cater to the unique challenges of AI data centers?

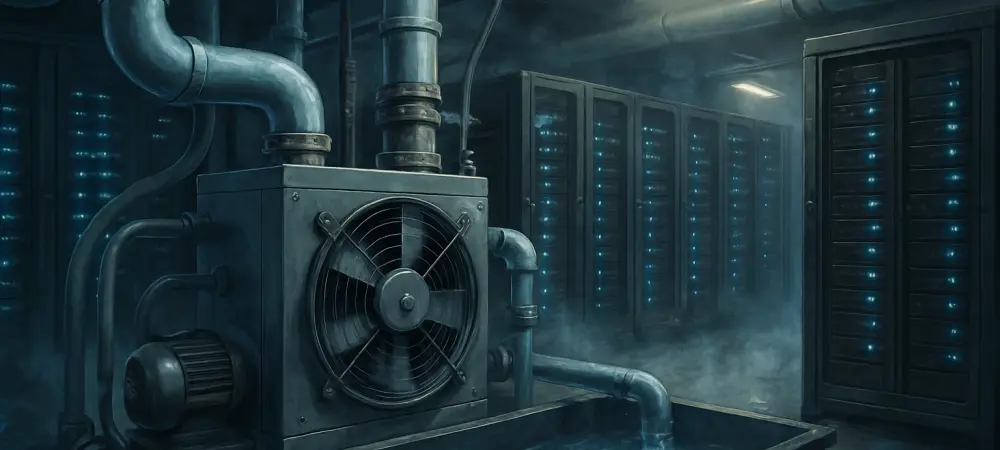

These solutions are a game-changer for AI data centers, which generate massive heat due to high-density workloads. The portfolio combines liquid and air-cooled technologies to handle extreme processing demands, ensuring reliability even under intense conditions. What’s impressive is how they’ve tailored the systems to manage GPU-intensive tasks, which are at the heart of AI operations. It’s about keeping performance steady while preventing thermal throttling, which can cripple efficiency in these environments.

What stands out about the cooling distribution unit (CDU) that can manage up to 2.5MW of processing power?

The 2.5MW capacity is a significant leap forward. For data center operators, this means they can support much larger clusters of high-performance computing hardware in a single setup. Compared to earlier solutions or even some competitors, this pushes the boundary of what’s possible, offering a bit more headroom than the previous 2.3MW cap. It directly impacts scalability, allowing operators to plan for massive AI projects without worrying about outgrowing their cooling infrastructure too quickly.

How has cooling technology had to evolve to keep pace with the demands of AI-driven workloads?

AI workloads have fundamentally changed the game. We’re talking about racks packed with GPUs that churn out unprecedented levels of heat—far beyond what traditional air cooling can handle. This has driven a shift toward liquid cooling, which is far more efficient at dissipating heat from dense setups. The challenge has been designing systems that not only cool effectively but also integrate seamlessly into existing infrastructure while anticipating future needs. That’s where constant innovation in materials, design, and software integration comes in.

Can you elaborate on the collaboration with leading GPU manufacturers and how it shapes these cooling solutions?

Working directly with top GPU makers at the silicon level is a huge advantage. It means the cooling solutions are designed with intimate knowledge of the hardware they’re supporting. This isn’t just about slapping a cooling system onto a rack; it’s about optimizing heat transfer right where it’s generated. For customers, this translates to better performance, lower energy costs, and longer hardware lifespan because the cooling is so precisely tuned to the GPUs’ behavior.

Motivair’s technology powers six of the world’s top ten supercomputers. What does this tell us about the reliability of these solutions?

Being part of those top supercomputers speaks volumes about trust and performance under pressure. Supercomputers operate at the bleeding edge of technology, with zero tolerance for downtime or inefficiency. The fact that this tech is relied upon in those environments shows it’s battle-tested for extreme conditions. For regular data center operators, this experience means they’re getting solutions proven in the most demanding scenarios, which can easily handle their needs with the same level of dependability.

Let’s dive into a specific product—the ChilledDoor rear door heat exchanger that cools up to 75kW per rack. What kind of environments benefit most from this?

The ChilledDoor is ideal for high-density setups where space is tight, and heat output is through the roof—think AI training clusters or advanced research facilities. It’s built to tackle the kind of rack densities we’re seeing with modern AI hardware, where every inch of space is packed with power-hungry components. Its ability to cool at the rack level without needing extensive facility-wide water systems makes it a flexible fit for a variety of data center designs.

How does the integration with Schneider Electric’s broader ecosystem enhance the value of this liquid cooling portfolio?

Schneider Electric brings a massive ecosystem of software, hardware, and manufacturing capabilities to the table. This integration means the cooling solutions aren’t standalone products—they’re part of a cohesive system that can be monitored, optimized, and scaled using advanced tools. For operators, this reduces complexity and speeds up deployment. It also boosts ROI because everything works together seamlessly, from power management to cooling, cutting down on inefficiencies.

What’s your forecast for the future of liquid cooling in the context of AI and data center evolution?

I see liquid cooling becoming not just a niche solution but the standard for any serious data center handling AI workloads. As AI models grow more complex and hardware densities increase, air cooling will simply fall short. We’re likely to see even more innovation in modular designs and hybrid systems that balance liquid and air for maximum efficiency. Sustainability will also play a bigger role—think cooling systems that reuse waste heat or integrate with renewable energy. The next five years will be about making liquid cooling more accessible, cost-effective, and environmentally friendly for data centers of all sizes.