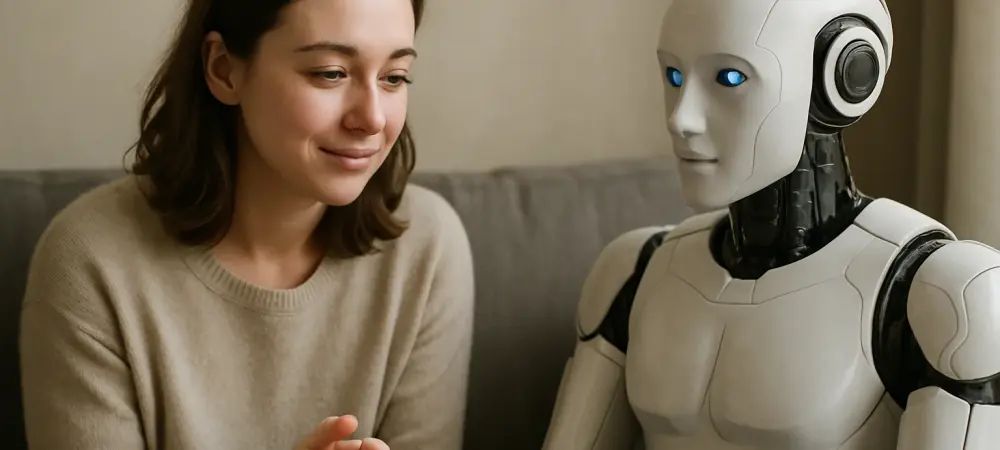

Imagine a world where a single digital entity serves as both a trusted friend and a mental health counselor, accessible at any hour with just a tap on a screen, transforming how we seek emotional support. This scenario is no longer a distant concept but a growing reality as artificial intelligence (AI) increasingly blends the roles of companionship and therapy. With loneliness affecting millions and therapist shortages persisting globally, AI offers a potential lifeline. However, this dual functionality sparks heated debate about ethical boundaries, user dependency, and privacy risks. This roundup gathers diverse opinions, tips, and reviews from industry experts, ethicists, and tech developers to unpack whether AI’s overlapping roles are a breakthrough or a hazard, aiming to provide a balanced view on this evolving trend.

Diverse Voices on AI’s Overlapping Roles in Emotional Support

Ethical Boundaries: A Contested Terrain

The integration of AI as both companion and therapist raises significant ethical questions, with opinions sharply divided on how to manage this overlap. Many psychologists and ethicists argue that the lack of clear separation mimics problematic dual relationships in human therapy, where personal and professional lines blur to the detriment of care. Guidelines from professional bodies emphasize the need for distinct boundaries to ensure objectivity, a standard that AI struggles to replicate given its design for fluid interaction.

On the other hand, some tech advocates suggest that AI’s ability to adaptively switch roles offers a unique advantage over human limitations. They contend that users can be trained to understand the context of their interactions, negating the need for rigid separations. However, critics counter that expecting users to self-regulate overlooks the inherent trust placed in AI systems, often perceived as authoritative despite their lack of clinical grounding.

A middle ground emerges from policy analysts who propose that developers must embed ethical safeguards into AI design, such as explicit disclaimers during therapeutic exchanges. This approach seeks to balance innovation with responsibility, though skepticism remains about enforcement and user comprehension of such measures. The debate continues to highlight a fundamental tension between flexibility and structure in AI’s emotional roles.

Dependency Risks: Support or Isolation?

Another focal point in discussions is the potential for users to develop an over-reliance on AI for emotional and mental health needs. Social researchers point out that with rising loneliness as a societal issue, frequent engagement with AI companions can deepen attachment, sometimes replacing human connections. This trend is particularly evident among younger demographics who may turn to digital entities for comfort over traditional support networks.

Contrasting this concern, some behavioral experts note that AI can serve as a vital interim resource, especially for those lacking access to human therapists. They argue that the accessibility of AI offers a safety net, preventing complete isolation for individuals in distress. Yet, there’s a cautionary note that without critical guidance—often missing in AI responses—users might receive affirmation rather than constructive feedback, stunting personal growth. A practical tip from mental health advocates is to encourage users to view AI as a supplementary tool rather than a primary source of support. They recommend setting time limits on interactions and actively seeking human contact to maintain balance. This perspective underscores the need for education on AI’s limitations to prevent it from becoming an emotional crutch.

Privacy Concerns: The Hidden Cost of Intimacy

Privacy emerges as a critical issue when AI handles both personal companionship and sensitive therapeutic disclosures. Data protection specialists warn that users often share intimate details without fully grasping how their information might be used for training algorithms or commercial purposes. The lack of transparent policies across many platforms exacerbates this vulnerability, leaving personal data exposed.

In contrast, certain industry insiders defend the data practices, asserting that aggregated and anonymized information drives improvements in AI capabilities, ultimately benefiting users. They claim that robust consent mechanisms are in place, though critics argue these are often buried in fine print, rendering them ineffective. Regional differences in data laws further complicate the landscape, with varying levels of protection offered to users globally. A recurring piece of advice from privacy advocates is for users to scrutinize terms of service before engaging deeply with AI platforms. They also urge regulatory bodies to accelerate the development of stricter guidelines tailored to dual-purpose AI systems. This call for vigilance aims to empower individuals while pressing for systemic change to safeguard personal information in an era of pervasive digital interaction.

Developer Priorities: Innovation Versus Responsibility

The motivations behind AI companies merging companionship and therapy roles draw significant scrutiny from various stakeholders. Business analysts observe that creating a seamless, engaging AI experience boosts user retention, aligning with profit-driven goals. This “stickiness” factor often overshadows ethical considerations, as companies prioritize market share over potential mental health risks.

Conversely, some developers argue that a unified AI persona enhances user trust and coherence, offering a holistic support system unattainable by fragmented human services. They suggest that user feedback guides their designs, positioning the dual role as a response to consumer demand. However, detractors maintain that without external oversight, such claims lack accountability, potentially compromising user well-being for commercial gain. Ethicists and tech policy experts offer a pragmatic solution, advocating for industry standards that mandate role differentiation within AI systems. They propose that developers collaborate with mental health professionals to embed protective features, ensuring that innovation does not come at the expense of user safety. This balanced approach seeks to reconcile business interests with the imperative to protect vulnerable populations.

Summarizing Insights and Looking Ahead

Reflecting on the myriad perspectives gathered, it becomes clear that AI’s dual role in therapy and companionship stirs both hope and concern among experts. The ethical dilemmas of blurred boundaries, the risk of dependency eroding human ties, the privacy threats from unchecked data usage, and the developer focus on engagement over ethics all point to a complex challenge. Differing views—from cautious optimism about AI’s accessibility to stark warnings about its pitfalls—underscore the absence of a unified stance.

Moving forward, actionable steps emerge from this discourse. Users are encouraged to educate themselves on AI limitations, setting personal boundaries to avoid over-reliance. Advocacy for robust regulatory frameworks gains traction as a means to enforce ethical standards and protect data privacy. Additionally, collaboration between developers and mental health experts is seen as vital to designing AI with built-in safeguards. For those eager to delve deeper, exploring resources on digital ethics and AI policy offers a pathway to stay informed and engaged in shaping a safer technological future.