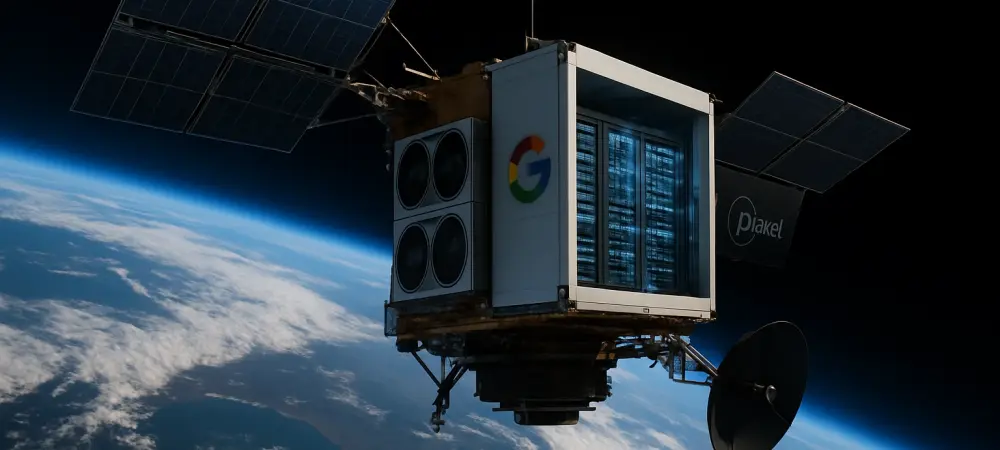

The relentless hum of servers processing artificial intelligence queries now echoes with a planetary-scale problem: an insatiable appetite for energy that is pushing terrestrial data infrastructure to its absolute limits. As the digital demands of a globally connected society escalate, the very ground beneath our feet is proving insufficient to support the future of computation. This realization has sparked a bold new space race, not for celestial bodies, but for a patch of sky to house the next generation of data centers, leading to a landmark collaboration between technology titan Google and geospatial intelligence leader Planet. Their joint initiative, Project Suncatcher, aims to move high-density AI computing off-world, transforming the vacuum of space into a viable, and perhaps necessary, new frontier for data.

The Next Frontier for AI Is Earth Running Out of Room

The explosive growth of artificial intelligence is fundamentally a story of energy consumption. Training and running sophisticated AI models require vast server farms that consume electricity on the scale of small cities, generating immense heat that demands equally powerful cooling systems. This escalating need for power and physical space is creating unprecedented strain on national energy grids and local environments, raising critical questions about the long-term sustainability of the AI revolution. As land becomes scarcer and energy costs rise, the technology industry is confronting a physical bottleneck that software optimization alone cannot solve.

Consequently, industry leaders are looking upward for a solution. The constraints that define terrestrial infrastructure—gravity, weather, finite land, and the need for constant cooling—are largely absent in low Earth orbit. Space offers an environment with abundant, uninterrupted solar energy and a natural, if challenging, heat sink. This shift in perspective reframes the cosmos not just as a final frontier for exploration, but as a practical and strategic expansion of our planet’s digital infrastructure, moving the most energy-intensive computational tasks to a realm where resources are virtually limitless.

Why the Cloud Is Looking to the Stars

A primary driver for this stellar migration is the quest for continuous, clean power. By placing data centers in a dawn-dusk sun-synchronous orbit, satellites can remain in near-constant sunlight, harvesting solar energy without the interruptions of night or cloud cover that hinder terrestrial solar farms. This arrangement promises a stable and predictable power source, perfectly suited to the unwavering 24/7 demands of AI workloads, thereby sidestepping the complex energy storage and grid-balancing challenges faced on Earth.

Moreover, the vacuum of space presents a unique solution to the critical problem of heat dissipation. On Earth, data centers rely on complex and costly air and liquid cooling systems to prevent processors from overheating. In orbit, while heat cannot be conducted away conventionally, it can be radiated directly into the cold expanse of space. Although this requires sophisticated thermal engineering, it offers a path to cooling high-performance computer chips, like Google’s TPUs, far more efficiently than terrestrial methods could ever allow, unlocking new potentials for computational density and power.

Inside Project Suncatcher A Blueprint for AI in Orbit

Project Suncatcher represents the first concrete step toward this ambitious vision. The partnership between Google and Planet leverages the distinct strengths of each company: Google’s expertise in AI hardware and cloud infrastructure, and Planet’s proven experience in designing, deploying, and managing large-scale satellite constellations. The initial phase of the project is a focused research and development effort, set to culminate in the launch of two demonstration satellites by early 2027. These prototypes will serve as a crucial testbed for the core technologies needed for orbital computing.

At the heart of these demonstration spacecraft will be Google’s specialized Tensor Processing Units (TPUs), chips explicitly designed to accelerate AI computations. The satellites, built upon Planet’s new and robust Owl satellite bus, will test the performance and resilience of these TPUs in the harsh radiation environment of space. Key mission objectives include validating novel methods for radiating heat away from the powerful processors and demonstrating the high-bandwidth laser communication links necessary for the satellites to operate as a cohesive, interconnected cluster. This “cluster system approach” is foundational, as the long-term plan envisions not single satellites, but vast, formation-flying networks of computational nodes. The ultimate vision scales this concept to a network of thousands of satellites, establishing a permanent data infrastructure in the sky.

Voices from the Vanguard Industry Titans on the Future of Data

This pioneering effort is championed by leaders who see it as an inevitable and logical progression. Planet CEO Will Marshall describes the collaboration as a “competitive win,” emphasizing that only a handful of companies possess the operational expertise to manage complex constellations at this scale. He argues that as launch costs continue to plummet, the economic case for moving energy-intensive computing into orbit becomes increasingly compelling. This project strategically aligns with Planet’s existing development of its Owl imaging satellites, creating valuable synergies in technology and infrastructure.

The sentiment is echoed across the technology sector. Google CEO Sundar Pichai has predicted that within a decade, space-based data centers will be viewed as a “more normal” component of global IT infrastructure. This forward-looking perspective is shared by other influential figures, including SpaceX’s Elon Musk and Amazon’s Jeff Bezos, who have both articulated plans for orbital data solutions. Startups such as Starcloud and Aetherflux are also entering the nascent market, signaling a broader industry consensus that the future of high-performance computing lies beyond Earth’s atmosphere.

The Orbital Gauntlet Overcoming the Challenges of Space Based Computing

Despite the immense promise, the path to a fully functional orbital cloud is fraught with formidable technical and logistical hurdles. Chief among these is thermal management. While space is cold, the vacuum is a poor conductor of heat, meaning specialized, large-scale radiator systems must be engineered to prevent the powerful TPUs from frying themselves. Furthermore, electronics in low Earth orbit are exposed to significantly higher levels of radiation than on the ground, which can corrupt data and damage sensitive components. Developing robust shielding and fault-tolerant systems is paramount to ensuring the reliability and longevity of these orbital data centers.

Beyond the immediate engineering problems, the sheer scale of the vision presents its own challenges. While launch costs have decreased, deploying and maintaining a constellation of thousands of high-powered satellites remains a monumental and expensive undertaking. Issues such as orbital debris, spectrum allocation for communication, and the development of in-space servicing capabilities must be addressed to ensure the long-term viability of such a system. Overcoming this orbital gauntlet will require sustained innovation, significant investment, and unprecedented international cooperation. The joint initiative between Google and Planet was a decisive move that shifted the concept of orbital data centers from theoretical discussion to tangible development. This venture represented a critical acknowledgment that the exponential growth of artificial intelligence required a similarly exponential leap in infrastructure, one that transcended terrestrial boundaries. The project’s initial successes in testing processing units and thermal regulation in orbit provided the foundational proof that the cold, empty void of space could, in fact, host the vibrant, energy-intensive heart of the next digital age. It was a step that reshaped the long-term roadmap for both the space and technology industries.