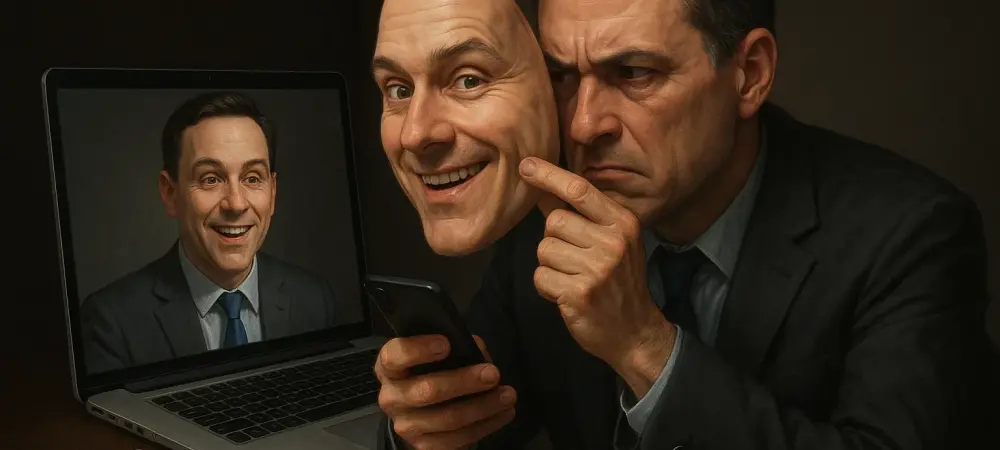

Deepfake technology has significantly altered the landscape of digital deception, ushering in a new era of fraud that employs artificial intelligence to create highly realistic audio and video content. This technology has infiltrated corporate spaces, allowing cybercriminals to impersonate trusted executives and initiate fraudulent transactions, potentially causing substantial financial damage to companies. The threat of deepfake fraud is not a distant future concern but an immediate threat, with notable worldwide financial losses already recorded. Businesses are increasingly faced with the challenge of distinguishing between reality and meticulously crafted fabrications that can deceive even the most vigilant employees. As these scams become more prevalent, organizations must strengthen their defenses against these AI-powered attacks to safeguard their resources and reputation.

The Mechanics of Deepfake Fraud

Deepfake frauds usually start by collecting publicly available media of a target, such as audio or video materials, which are then used to train advanced AI models. These models can generate synthetic voices or videos that closely resemble their real counterparts, enabling cybercriminals to impersonate high-ranking officials in calls or conferences, often creating a sense of urgency that coerces employees to make hasty decisions without thorough verification. This type of scam illustrates the seamless integration of social engineering with cutting-edge technology, creating a formidable threat to unsuspecting individuals and organizations. A widely-noted incident involved criminals using deepfake audio to impersonate a CEO’s voice, persuading a finance officer to authorize a substantial fund transfer. The technology’s sophistication is such that even a few seconds of recorded audio can be transformed into a virtually indistinguishable imitation, thus enabling criminal activities with alarming effectiveness. These convincingly orchestrated deceptions highlight the crucial necessity for strong verification systems, as even seasoned professionals can become victims.

Real-World Impacts and High-Profile Cases

The impact of deepfake fraud is palpable, notably through the financial losses endured by companies globally. A high-profile case in Hong Kong saw an Arup employee deceived into transferring HK$200 million after a seemingly authentic video call turned out to be a deepfake. These elaborate scams exhibit criminals’ refined techniques, incorporating multiple layers of deceit like fake invoices and bogus legal documentation to lend credibility to their operations.

Western companies have also fallen prey to these schemes. In one incident, deepfake technology was used to mimic voice and video likenesses of a CEO of a leading advertising firm across reputable platforms, nearly resulting in severe monetary losses. Simultaneously, similar deceptions have been executed in Asia via platforms such as Zoom and WhatsApp, leveraging both video and audio for unauthorized financial manipulations. These examples serve as stark reminders of the operational and financial vulnerabilities that companies encounter in today’s digital age.

Expert Warnings and Future Trends

Surveys continually disclose the mounting concern among finance professionals regarding the frequency and sophistication of deepfake fraud attempts. In the U.S. and U.K., more than half of respondents report encounters with such scams, with nearly half experiencing monetary losses, underscoring the pressing need for increased corporate vigilance. Experts, including those from Deloitte, predict that AI-enabled scams might result in staggering losses, potentially reaching billions in collective damages in a few years.

There is a consensus among cybersecurity professionals that rapid AI advancements exacerbate the threat environment. Reports indicate a rising trend in ‘vishing’—or voice phishing—scams, where AI-generated voices wield substantial influence to deceive individuals into compromising organizational security. Innovative tools are emerging which promise real-time detection of manipulated media, yet experts caution that these must be complemented by comprehensive policy implementations and vigilant human oversight. Companies are urged to rigorously assess their threat detection strategies by integrating AI technology with traditional safeguards.

Mitigation Strategies for Deepfake Threats

To counteract deepfake fraud effectively, cybersecurity experts advocate several strategies, including adopting multi-factor verification processes that require independent confirmation through trusted channels beyond initial communications to verify authenticity. This practice reduces impulsive decisions prompted by fraudulent communications that often exploit perceived urgency.

Moreover, implementing dual-platform confirmations can avert single-point failures in decision-making protocols, ensuring that no major financial transactions receive approval from a single individual without checks. Cybersecurity training focused on identifying signs of deepfake activity, like auditory glitches or visual anomalies, empowers employees to scrutinize dubious requests. Embedding comprehensive awareness campaigns within corporate culture ensures that protocol adherence becomes routine, promoting diligence over complacency in transactional matters.

Embracing Technological and Human Solutions

While technological solutions like AI-based monitoring tools offer promising pathways for deepfake detection, human involvement remains a critical component of the defense strategy. Cultivating a workplace culture that values vigilance and encourages skepticism about unsolicited demands contributes to a more secure organizational framework. Additionally, policies that limit executives’ media exposure help reduce the availability of source material for AI misuse. By enforcing stringent controls over proprietary data and monitoring for unusual account activity, companies can establish multiple defense layers against data breaches that facilitate deepfake scams. Ultimately, integrating technological defenses with employee education and stringent procedural compliance forms the backbone of an effective strategy against the continuously evolving threat of corporate deepfake fraud.

Navigating the Path Forward

Deepfake scams frequently originate from gathering publicly accessible media of targets, such as audio clips or video footage. These materials are vital for training sophisticated AI models capable of producing synthetic voices or videos that closely resemble authentic versions. With AI-generated likenesses, cybercriminals can mimic high-ranking company officials during calls or video meetings, urging employees to act hastily, often foregoing proper scrutiny. The emergence of such scams demonstrates the merging of social engineering with advanced technology, presenting a significant threat to unsuspecting individuals and organizations.

Deepfake technology’s utility in corporate fraud stems from its capacity to manipulate the psychological trust derived from familiar voices and appearances. For example, a well-publicized case saw criminals using deepfake audio to replicate a CEO’s voice, directing a finance officer to authorize a significant fund transfer. The technology’s sophistication means that even brief audio clips can produce nearly indistinguishable copies, facilitating criminal activity. As a result, even experienced professionals have been deceived, highlighting the pressing need for robust verification protocols.