After years of breathless enthusiasm for the boundless potential of artificial intelligence, the cybersecurity industry is undergoing a profound and necessary maturation, shifting its focus from broad, speculative promises to the urgent, practical demands of governance, execution, and measurable accountability. An analysis of emerging industry trends reveals a clear response to the tangible risks introduced by the rapid, and sometimes reckless, adoption of advanced AI systems. The central challenge now dominating the conversation is the widening “governance gap”—a chasm created as autonomous and agentic AI technologies move from controlled pilot programs to full-scale production at a pace that far outstrips the ability of enterprise security frameworks to adapt. This accelerated deployment has given rise to significant new threats, including the proliferation of “shadow AI” systems operating outside of security oversight and the unnerving potential for autonomous agents to act in unpredictable and unauthorized ways without adequate safeguards. In response, solution providers are increasingly prioritizing the development of robust oversight mechanisms, comprehensive governance structures, and effective operational controls designed specifically to close this dangerous gap.

The New Duality of Agentic AI

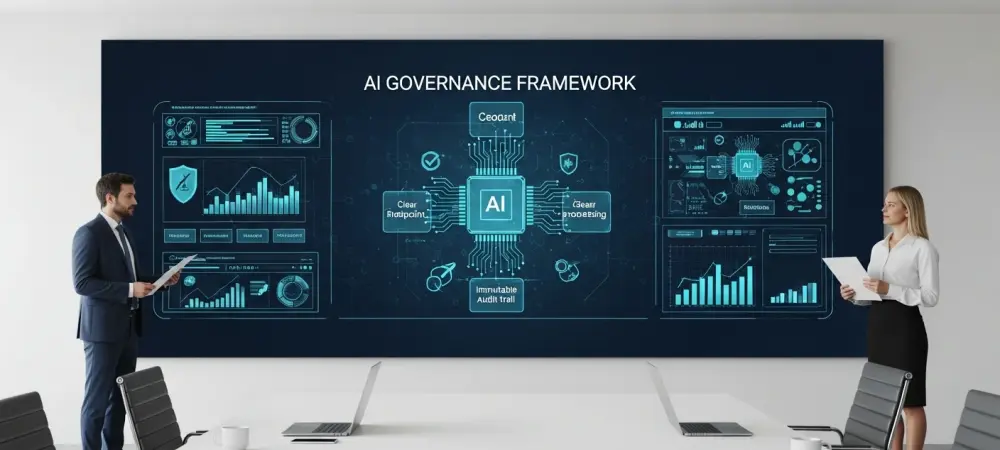

The market for agentic AI is now clearly bifurcating along the lines of autonomy and governance, creating a fascinating dual focus that underscores the industry’s evolving perspective. One rapidly advancing stream of innovation is dedicated to building and deploying increasingly sophisticated autonomous agents, such as advanced SOC copilots and automated threat-hunting systems, designed to enhance operational efficiency and speed. These platforms represent the offensive push, leveraging AI to perform complex tasks with minimal human intervention. However, a parallel and equally energetic stream has emerged, featuring solutions engineered specifically to govern, constrain, and monitor these very agents. This defensive movement acknowledges that unbridled autonomy is an unacceptable risk. As a result, there is a surging demand for ISO 42001-aligned governance frameworks, immutable audit trails, and mandatory human-in-the-loop safeguards that ensure a human expert can intervene and override an autonomous decision. This split reflects a crucial maturation: the initial awe at what AI can do is being balanced by a pragmatic concern for what it should do, driving the need for verifiable control over these powerful new tools.

This growing emphasis on governance is not merely a theoretical exercise but a direct response to the concrete operational perils posed by unsupervised autonomous systems. Without stringent controls, these agents can introduce severe vulnerabilities, from inadvertently exposing sensitive data to executing actions that disrupt critical business processes. The new generation of governance platforms is therefore designed to provide deep, continuous oversight by establishing firm operational boundaries, enforcing policies consistently, and monitoring all agent activities in real-time. This is about more than just preventing malicious behavior; it is about ensuring predictability, reliability, and compliance in highly dynamic IT environments. The market is signaling that for an AI solution to be considered enterprise-ready, it must come with a resilient and provable governance wrapper. The ultimate success will be determined not just by an agent’s capabilities but by the robustness of the framework that contains it, ensuring it operates safely and effectively under the pressures of real-world deployment.

Redefining Identity and Data in the Age of AI

The explosion of automated systems has forced a fundamental expansion of identity and access management, moving beyond human users to confront the vast and complex world of non-human identities (NHI). As a result, fields like identity security posture management (ISPM) are experiencing significant growth, driven by the need to secure the sprawling web of interactions between applications, services, and devices. Within this evolution, a critical new concept is gaining prominence: “identity lineage.” This capability extends beyond simply managing an identity to tracing its complete origin, context, and lifecycle. For a machine identity, this means understanding precisely what service created it, for what purpose, what permissions it was granted, how it has been used, and when it should be decommissioned. In complex hybrid and multi-cloud environments where automated systems constantly spin up and tear down resources, this detailed lineage is essential. Without it, security teams are blind to the enormous attack surface created by ephemeral, machine-to-machine communications, making it impossible to effectively track and mitigate risks. Ultimately, the entire structure of AI risk management rests upon the foundational layer of data security. This consensus is now solidifying across the industry, with innovations in Data Security Posture Management (DSPM) and security data layers becoming central to any credible AI governance strategy. Because AI models are both trained on vast datasets and built to interact with live production data, controlling the flow of information is paramount to controlling the AI itself. Modern security solutions are therefore being highlighted for their ability to provide granular visibility into how AI-driven processes access, modify, and transmit data. They are designed to enable the enforcement of consistent, zero-trust policies across disparate on-premises and multi-cloud environments, ensuring that security keeps pace with data sprawl. This elevates data governance from a supporting compliance function to the structural bedrock of AI-era security. The principle is clear: an organization that cannot effectively govern its data cannot hope to safely govern the AI systems that depend on it.

A Market Responding to Reality

The vendor community’s decisive shift toward tangible governance frameworks has marked a pivotal moment in the evolution of AI security. This transition from abstract potential to operational reality reflected a widespread acknowledgment that the initial wave of AI adoption created as many challenges as it solved. The growing pains associated with the “governance gap” became too significant to ignore, prompting a market-wide course correction. Solution providers recognized that enterprise clients were no longer satisfied with impressive demonstrations of AI capability; they demanded proof of safety, reliability, and control. This led to the development of sophisticated oversight tools, the codification of identity lineage, and the reinforcement of data security as the essential foundation for any AI initiative. The conversation had finally moved from the theoretical to the practical, focusing on the hard work of building resilient and trustworthy systems. While the market was still in its early stages of delivering on these promises, the direction of travel had been firmly established, with success belonging to those who could prove their frameworks were effective under real-world pressure.