The latest evolution in cyber-espionage is no longer just about sophisticated code; it is now about psychologically weaponized content generated by artificial intelligence to exploit human emotion with chilling precision. The use of artificial intelligence in malware development represents a significant advancement in offensive cyber capabilities. This review will explore the evolution of this technique, its key features as demonstrated in recent campaigns, its operational effectiveness, and the impact it has on the cybersecurity landscape. The purpose of this review is to provide a thorough understanding of the technology’s current capabilities, its strategic implications, and its potential for future development.

The Dawn of AI in Cyber Offense

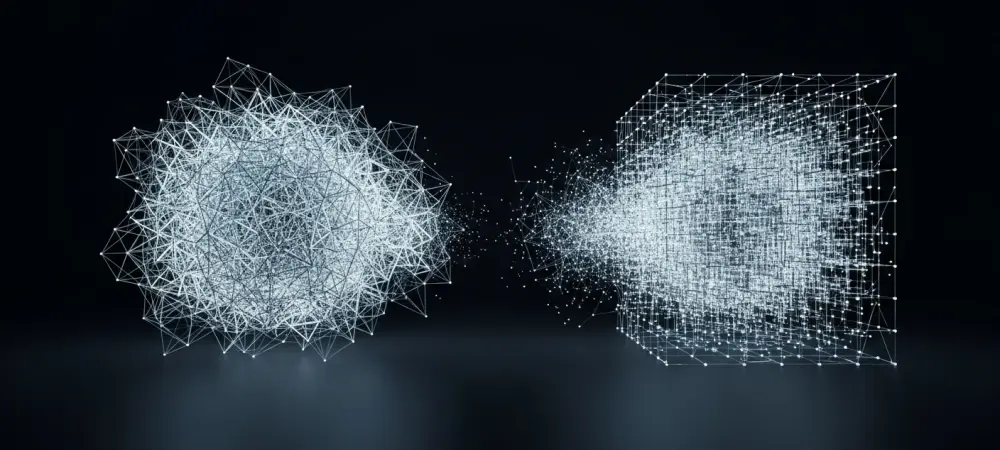

The convergence of artificial intelligence, particularly Large Language Models (LLMs), and malware creation marks a pivotal moment in offensive cyber operations. This trend is rooted in the principle of using AI to either generate malicious code from scratch or significantly augment existing malware frameworks. By automating complex coding tasks and content creation, AI provides threat actors with powerful new tools. This capability has emerged in a context of rapidly advancing, publicly accessible AI, democratizing a level of sophistication previously reserved for the most well-resourced organizations.

This development constitutes a paradigm shift, fundamentally altering the threat landscape. For novice attackers, AI lowers the barrier to entry, enabling them to craft and deploy malware that would otherwise be beyond their technical reach. Simultaneously, it enhances the capabilities of established state-aligned groups, allowing them to accelerate development cycles, create more evasive tools, and scale their operations with unprecedented efficiency. This dual-use potential ensures that AI-assisted threats will become more common and more dangerous.

An Anatomy of AI-Assisted Malware

AI-Crafted Lures and Advanced Social Engineering

The initial stage of an AI-assisted attack often hinges on psychologically manipulative social engineering, a domain where LLMs excel. Threat actors are now crafting highly detailed and convincing lures that go far beyond typical phishing emails. A prime example is the RedKitten campaign, which utilized fabricated forensic files to prey on the emotions of its targets. These lures are not merely text; they are entire narratives, complete with fabricated data, designed to create a powerful sense of urgency and grief.

AI’s ability to generate vast amounts of structured, yet fabricated, data is key to this tactic’s success. In the RedKitten case, the malware was delivered in spreadsheets containing graphic, emotionally charged details about deceased protesters. While containing internal inconsistencies detectable under close scrutiny, the initial shock value is often enough to compel a target to lower their defenses and enable the macros that initiate the infection. This demonstrates a strategic move toward exploiting human psychology as the weakest link in the security chain, with AI serving as the perfect tool for crafting the bait.

A Technical Deep Dive into the SloppyMIO Implant

At the core of many such campaigns is a sophisticated payload, and the “SloppyMIO” implant serves as an exemplary case study. Developed in C#, this malware is engineered with a polymorphic design, meaning it alters its code with each new infection. This “sloppy” or variable structure is a deliberate feature intended to thwart signature-based antivirus solutions, which rely on recognizing known malware patterns. The initial deployment vehicle is a VBA dropper embedded within a macro-enabled document, a classic but effective method for gaining an initial foothold.

Further analysis of SloppyMIO reveals compelling evidence of AI’s involvement in its creation. Researchers have identified unusual artifacts within the code, such as oddly named variables and comments that appear to be machine-generated annotations rather than human notes. These digital fingerprints suggest that an LLM was used to either write entire code blocks or assist a human developer, accelerating the production process and potentially introducing novel coding patterns that are harder for analysts to attribute to a specific group’s known tradecraft.

Modern Evasion Techniques for Stealth and Persistence

Once deployed, AI-assisted malware employs advanced techniques to remain hidden and ensure long-term access to a compromised system. SloppyMIO utilizes a process hijacking method known as AppDomain Manager Injection, where it co-opts a legitimate, signed Windows process to execute its malicious code. By running under the guise of a trusted application, the malware effectively bypasses many application whitelisting and behavioral detection systems. Persistence is subsequently established through the creation of a scheduled task, ensuring the implant automatically relaunches after a system reboot.

To further complicate detection, the malware’s command-and-control (C2) infrastructure is cleverly routed through legitimate, high-reputation web services. By using platforms like GitHub and Google Drive to retrieve configuration files and Telegram for C2 communications, the malware’s network traffic is blended with legitimate user activity. This tactic makes it exceptionally difficult for network security monitoring tools to distinguish malicious commands from benign data transfers, providing the attackers with a resilient and stealthy communication channel.

Emerging Trends in AI-Driven Threat Tactics

The innovations in AI-assisted cyber-espionage are evolving rapidly, with new tactics continually emerging. One notable development is the increased use of steganography, where attackers conceal configuration data or entire malicious payloads within the pixel data of seemingly innocuous image files. This method adds another layer of obfuscation, making the delivery of critical operational components nearly invisible to conventional security scans.

More broadly, the adoption of LLMs is becoming a pervasive trend among a diverse range of threat actors. This widespread use is beginning to blur the lines between previously distinct groups, as AI-generated toolsets lack the unique developer quirks and signatures that security researchers rely on for attribution. The result is a more homogenized threat landscape where it becomes increasingly difficult to determine if an attack originates from one state-sponsored group or another, or if it is the work of an entirely new entity leveraging shared AI development platforms.

The RedKitten Campaign A Case Study in AI-Assisted Espionage

The RedKitten campaign provides a stark, real-world example of AI-assisted malware in action. This operation specifically targeted Iranian human rights organizations, political dissidents, and activists involved in documenting civil unrest. By leveraging highly emotional, fabricated content about protest casualties, the attackers demonstrated a keen understanding of their targets’ motivations and vulnerabilities, a level of psychological targeting enhanced by AI-generated content.

The campaign’s strategic profile shows significant overlaps in tactics, techniques, and procedures (TTPs) with known state-sponsored actors, particularly the IRGC-aligned group tracked as Yellow Liderc. The use of malicious Excel documents, the specific AppDomain Manager Injection technique, and the reliance on services like Telegram for C2 are all hallmarks of this established actor. This connection places the RedKitten campaign firmly within the broader geopolitical landscape of state-sponsored cyber-espionage, while its AI-assisted components signal a significant evolution in that actor’s capabilities.

Challenges in Detection and Attribution

The rise of AI-assisted malware presents formidable obstacles for cybersecurity professionals. For defenders, the primary challenge lies in detecting threats that are designed to constantly change. Polymorphic malware like SloppyMIO renders traditional signature-based detection largely ineffective, forcing a shift toward more complex and resource-intensive behavioral analysis. Furthermore, identifying C2 traffic that is cleverly disguised within the noise of legitimate services like Google Drive or Telegram requires sophisticated network traffic analysis and anomaly detection.

This technology also severely complicates the critical process of attribution. When multiple threat actors use the same publicly available or custom-trained LLMs to generate their tools, the unique coding styles and artifacts that once served as reliable indicators of a specific group begin to disappear. This creates a scenario where attacks become increasingly anonymous, making it difficult for governments and organizations to assign responsibility and enact appropriate countermeasures. Interestingly, a limitation for attackers is that AI-generated content can still contain logical flaws, such as the internal inconsistencies in the RedKitten lures, providing a potential new avenue for detection.

Future Outlook and Strategic Implications

Looking ahead, the trajectory of AI-assisted malware development points toward greater autonomy and proliferation. Future iterations may involve fully autonomous malware capable of making decisions and adapting its tactics in real time without human intervention. Such threats could identify vulnerabilities, craft exploits, and exfiltrate data dynamically, presenting a defensive challenge of an entirely new magnitude. Moreover, as AI development tools become more user-friendly, these advanced capabilities will likely proliferate to a wider range of less-skilled actors, from cybercriminals to hacktivists.

The long-term impact on the cybersecurity industry is profound, signaling the start of a new technological arms race. Defensive strategies will need to evolve beyond traditional methods, increasingly relying on AI-powered systems to detect and respond to AI-generated threats. This will create a dynamic battlefield where defensive AI models are pitted directly against offensive ones, each learning and adapting from the other. The strategic implication is clear: organizations must invest in AI-driven security solutions to keep pace with the rapidly evolving threat landscape.

Conclusion

The current state of AI-assisted malware is a proven and potent threat, as demonstrated by sophisticated operations like the RedKitten campaign. This technology empowers threat actors to create more evasive, psychologically potent, and technically advanced tools with greater efficiency. It has already begun to reshape the cyber threat landscape by lowering entry barriers for attackers and complicating attribution for defenders. This evolution demands a fundamental rethinking of defensive strategies and attribution methodologies, moving from reactive, signature-based approaches to proactive, AI-driven analysis.