The recent unveiling of system requirements for IO Interactive’s highly anticipated spy thriller, 007 First Light, has sent a peculiar shockwave through the PC gaming community, not for its graphical demands, but for its voracious appetite for system memory. For a title targeting a smooth 60 frames per second at 1080p resolution, the modest GPU suggestion stands in stark contrast to a RAM recommendation that challenges the current industry standard, sparking a debate about the future of PC hardware requirements.

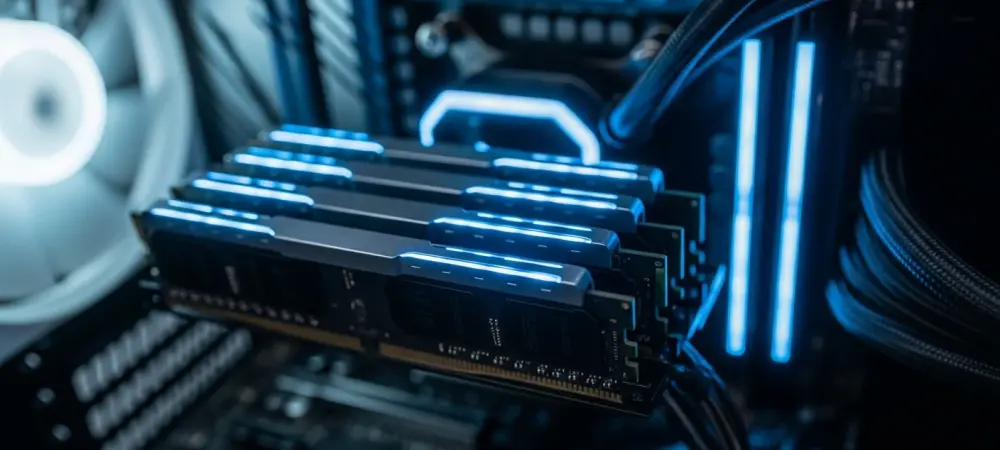

Your Graphics Card is Ready for 007 But is Your Memory

The central conflict in the hardware discussion stems from a significant technical imbalance. The game’s specifications call for a mid-range graphics card like an Nvidia RTX 3060 Ti or an AMD RX 6700 XT, hardware that has become a common baseline for many PC gamers. This accessibility, however, is immediately contrasted by the recommendation for 32GB of system RAM, a figure typically reserved for high-end 4K gaming rigs or content creation workstations. This unusual pairing has left many players questioning the developer’s optimization strategy and the underlying technology driving the game.

A New Standard or a Strange Outlier

For several years, 16GB of RAM has been the comfortable standard for PC gaming, easily handling the vast majority of titles at 1080p and even 1440p resolutions. The arrival of 007 First Light, a cinematic stealth-action title from the acclaimed developer of the Hitman series, challenges this paradigm. Its 32GB recommendation prompts a crucial question: is this an isolated case of a uniquely demanding game, or is it an early indicator of a new baseline for the next generation of cross-platform titles?

Declassifying the Technical Mission Files

An investigation into the game’s engine provides the first clues. 007 First Light is built on the proprietary Glacier 2 engine, famous for powering the dense, systemic sandboxes of the Hitman trilogy. These games are known for their large, seamless levels populated by hundreds of complex AI characters, all of which require significant memory resources to track and simulate. It is plausible that the Bond title expands on this foundation with even larger environments and more intricate systems, pushing memory usage higher.

Furthermore, the game incorporates new, memory-intensive technologies that contribute to the high requirement. The developer has announced a partnership with Nvidia to implement its latest DLSS 4 upscaling technology, which relies on high-resolution assets. Additionally, a new volumetric smoke simulation promises more realistic and dynamic environmental effects. Such features, while visually impressive, consume substantial amounts of RAM to function effectively, contributing to the overall system load.

What the Experts Suspect

Industry analysis suggests this trend is partially influenced by the latest generation of gaming consoles. Both the PlayStation 5 and Xbox Series consoles feature large pools of fast, unified memory that developers can use for both system and graphics processing. This architectural design encourages the creation of games that rely on holding vast amounts of data in memory for rapid streaming, a development practice that often carries over to the PC version of a title.

This perspective is echoed in community forums and by technical analysts, who speculate that the 32GB figure is likely a “recommended” target for the smoothest possible experience, rather than a hard minimum. Modern games increasingly use RAM to preload assets and reduce in-game stuttering, especially in open-world or large-level designs. The prevailing theory is that while the game may run on 16GB, players with 32GB will experience fewer loading hitches and a more consistent performance profile.

Gearing Up Your Rig for the Mission

For gamers preparing for the mission, the first step is a simple system diagnostic to check their current RAM amount, speed, and whether it is running in a dual-channel configuration for optimal performance. This provides a clear picture of their existing capabilities. From there, the decision to upgrade hinges on budget and patience; DDR5 RAM costs have been falling, but waiting could also see the game receive optimization patches that relax the final requirements. Ultimately, a balanced system remains the most critical factor. The game’s specified 80GB storage footprint underscores the importance of a fast NVMe solid-state drive. This component is just as crucial as the RAM, as it is responsible for feeding the game’s vast environmental and asset data into memory. A slow drive would create a bottleneck, negating the benefits of having a large amount of RAM and leading to prolonged loading times and potential in-game texture pop-in.

The extensive discussion surrounding 007 First Light’s memory needs ultimately highlighted a significant shift in game development priorities. It became clear that the focus was moving beyond raw graphical power and toward creating more complex, simulated worlds. This evolution, driven by new console architectures and advanced engine features, underscored a new reality for PC gamers, where ample system memory and fast storage became just as essential as a powerful GPU for experiencing the next generation of interactive entertainment as its creators intended.