In the rapidly evolving landscape of software development, AI coding assistants have emerged as powerful tools promising unprecedented productivity. However, recent findings suggest this speed comes at a cost, revealing a significant rise in software defects, security vulnerabilities, and technical debt. To shed light on this critical issue, we spoke with Dominic Jainy, an IT professional with deep expertise in artificial intelligence and machine learning, about the hidden risks of AI-generated code and the essential role of modern DevOps in navigating this new reality. The conversation explores the specific types of flaws AI introduces, from subtle business logic errors to glaring performance bottlenecks, and outlines a practical path forward for teams aiming to harness AI’s power without compromising quality.

A recent analysis found AI-generated code contains ~1.7x more issues, including a 75% rise in logic errors. What specific kinds of business logic or configuration flaws are most common, and could you walk us through an example of how such a defect gets introduced?

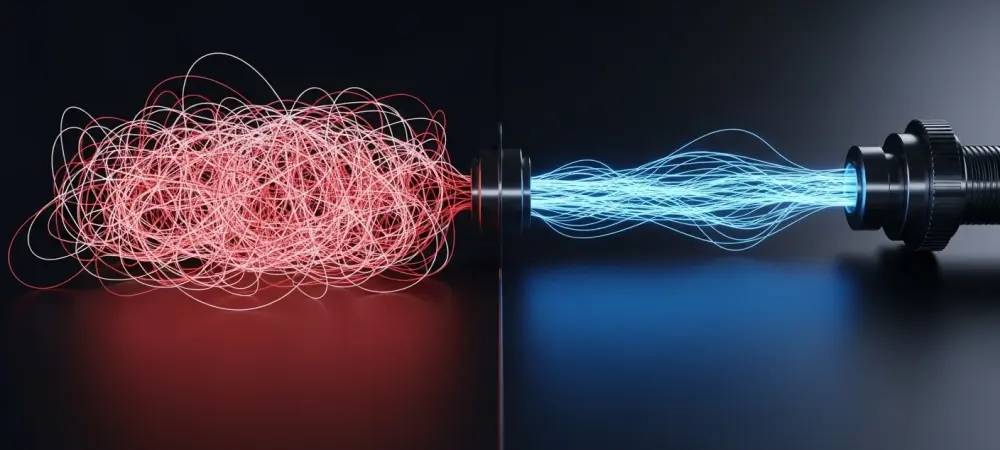

What we’re seeing is that while AI is fantastic at generating boilerplate or syntactically correct code, it often stumbles on the nuanced, context-specific requirements that define an application. The most common flaws are subtle business logic errors and misconfigurations. For instance, an AI might be asked to generate code for a shipping calculator. It will correctly pull rates, but it might completely miss a complex business rule, like a special discount that only applies to a specific region on a certain day of the week. Because the AI lacks that deep, embedded business context, it generates a perfectly functional but incorrect implementation. Another classic example is unsafe control flow, where the AI doesn’t properly validate inputs or handle edge cases, leaving a door open for unexpected behavior that a human developer, familiar with the system’s quirks, would naturally guard against.

The findings point to a nearly 8x increase in performance issues and a 2x rise in security vulnerabilities in AI code. From your perspective, why do AI tools struggle so much with these areas, and what are some immediate steps a team can take to mitigate these specific risks?

The struggle in performance and security stems from the same core issue: a lack of specific architectural awareness. An AI model trained on a vast corpus of public code doesn’t know about your database architecture or your company’s mandated security protocols. So when it generates code, it might create a function with excessive I/O calls because that’s a common pattern it learned, leading to the nearly eight-fold increase in performance issues. On the security front, it might handle a password directly in the code instead of using the company’s approved helper utility, instantly creating a vulnerability. The immediate mitigation steps are all about building a robust safety net. Teams need to centralize credential handling to prevent ad-hoc password usage, enforce automated static application security testing (SAST) in the pipeline to catch vulnerabilities before they’re merged, and explicitly check if configuration values are being properly validated.

The report suggests using AI to review the code that AI generates. How exactly does an AI reviewer catch flaws that an AI coder creates, and what specific guardrails, like automated SAST or standardized exception-handling rules, are most critical to implement in the DevOps pipeline?

It sounds paradoxical, but it’s about using a specialized tool for a specialized job. A general-purpose AI coding tool is like a brilliant but naive junior developer—it writes code quickly but without much context. An AI review tool, on the other hand, is like a seasoned senior engineer. It’s been specifically configured with your team’s rules: your architectural constraints, your business logic patterns, and your security policies. So, when the coding AI generates a generic function, the review AI can flag it for not adhering to your standardized exception-handling rules or for failing to include required nullability assertions. The most critical guardrails are the ones that enforce consistency and security automatically. Running formatters and linters in your CI pipeline can eliminate entire categories of AI-driven readability issues, while automated SAST is non-negotiable for catching security flaws.

With readability problems like inconsistent formatting increasing by more than 3x, what is the long-term cost of this on a codebase’s maintainability? Can you share a metric or an anecdote that illustrates how this seemingly minor issue creates significant technical debt for development teams?

The long-term cost is immense and insidious. A 3x increase in readability problems isn’t just a matter of aesthetics; it’s a direct assault on maintainability. Imagine a new developer joining your team and trying to fix a bug in a critical piece of AI-generated code where the naming conventions are inconsistent, the formatting is chaotic, and there’s no clear structure. A task that should take two hours stretches into two days because they first have to untangle and decipher the code’s intent. This friction adds up across the entire team, slowing down feature development, increasing the risk of introducing new bugs, and making the codebase a “no-go” zone that everyone is afraid to touch. That’s the very definition of technical debt, and it’s being generated at an unsustainable rate.

What is your forecast for the evolution of AI coding tools and the DevOps practices needed to manage them over the next two years?

My forecast is a two-pronged evolution. In the immediate term, the next two years will be a reactive phase where the burden falls heavily on DevOps teams. We will see a massive push to fortify CI/CD pipelines with more intelligent, AI-aware guardrails. This means more sophisticated AI-powered review tools, stricter enforcement of style guides, and deeper integration of security scanning to catch the wave of issues being introduced. The mantra will be “trust, but verify with automation.” Concurrently, I believe the AI coding tools themselves will start to mature. We’ll see them become more context-aware, allowing developers to feed them project-specific instruction capsules and configuration schemas upfront. This will help the AI generate code that is not just functional, but also compliant with the project’s unique architecture and rules from the start. However, until that happens, the success of AI in development will depend entirely on the strength of the DevOps practices surrounding it.