With a rich background in applying cutting-edge technologies like artificial intelligence and blockchain to real-world business challenges, Dominic Jainy has become a leading voice on modernizing financial systems. His work focuses on bridging the gap between the powerful capabilities of today’s ERPs and the practical, often messy, realities of the corporate accounting cycle. In our conversation, we explored the often-underestimated complexities of cost allocations within Microsoft Dynamics 365 Business Central. Dominic sheds light on the critical point where native tools fall short, the dangerous “transparency gap” that can undermine audits, and the hidden operational costs of relying on manual spreadsheet workarounds. He provides a clear-eyed view of how finance teams can reclaim control, transforming a high-risk, high-effort process into a structured and defensible one.

Business Central’s native allocation tools work well for organizations with stable cost structures. At what point does a company typically outgrow these features, and what are the first warning signs a Controller might notice in their reporting cycle? Please provide a specific example.

A company truly starts to outgrow the native tools the moment its business complexity outpaces the system’s ability to provide explanations. Initially, for a business with very predictable cost structures—say, a simple services firm allocating rent based on a fixed departmental headcount—the fixed and variable allocation tools in Business Central are perfectly adequate. The first warning sign for a Controller isn’t a loud alarm; it’s a subtle, creeping sense of dread during month-end close. It’s when the time spent explaining an allocation starts to exceed the time spent reviewing it. You’ll notice your team is spending hours, sometimes days, digging through old reports to answer a simple “how did we get this number?” from a department head. For instance, imagine a multi-entity organization that allocates shared IT service costs. In Q1, the allocation was based on headcount. In Q2, they shifted to a more complex driver based on active software licenses, which changes weekly. When an auditor asks about a Q1 entry six months later, the system only shows the final journal entry. The Controller now has to manually find the old headcount report from that specific point in time and rebuild the entire calculation in a spreadsheet just to prove the number was correct. That’s the moment you know you’ve hit the ceiling.

During an audit, an allocation may be reviewed months after it was posted. Since the underlying statistical drivers can change, how does this “transparency gap” complicate a CFO’s ability to defend their numbers, and what is the real-world impact on the team’s workload?

This “transparency gap” is a significant source of risk and a massive drain on resources. For a CFO, the inability to instantly prove the logic behind a number is incredibly frustrating and undermines their position. When an auditor questions an allocation, the expectation is a clear, concise answer supported by system data. Instead, the CFO has to say, “Let me get my team to reconstruct that for you.” This immediately introduces doubt and a sense of procedural weakness. The system shows the result, the “what,” but it completely discards the inputs and logic, the “why” and “how.” The real-world impact on the team is a high-stress fire drill. They have to drop their current tasks, which are already time-sensitive, to become forensic accountants. They’re pulling historical reports, validating old driver balances from potentially disparate sources, and manually rebuilding the math, all while hoping their reconstruction perfectly matches the posted entry from months ago. It’s a hugely inefficient process that turns a simple query into a multi-hour project, multiplying the workload and creating a shadow process of maintaining offline “proof” files just in case someone asks.

When teams must manually reconstruct allocation logic in spreadsheets, what are the hidden costs beyond just the extra time spent? Could you walk us through the typical steps of this reconstruction and highlight where the risk of error is highest in that process?

The hidden costs are insidious and go far beyond the payroll hours. There’s a significant opportunity cost; your highly skilled finance professionals are spending their time on clerical archaeology instead of forward-looking analysis. There’s also a “process friction” cost, where teams become hesitant to update or improve allocation logic simply because they anticipate the future pain of having to explain it. This stifles agility. The reconstruction process itself is a minefield of potential errors. It typically starts with pulling the posted journal entry from Business Central. Then, the real hunt begins: finding the exact statistical drivers—like square footage, headcount, or sales data—as they existed at that precise moment of posting. This is the riskiest step, as this data often isn’t formally versioned or stored. Was it the headcount from the 25th of the month or the end of the month? After finding what they believe is the right data, they meticulously rebuild the formulas in a new spreadsheet and pray the final number ties back to the posted entry. If it doesn’t, they’re stuck in a loop of re-checking data and formulas. This reliance on reconstruction instead of a static record creates a fragile audit trail that can easily break under scrutiny.

Finance teams often rely on Excel to test and explain allocation logic. How does this informal process often lead to control issues like version divergence, and what practical steps can a team take to add structure without abandoning the tool they trust?

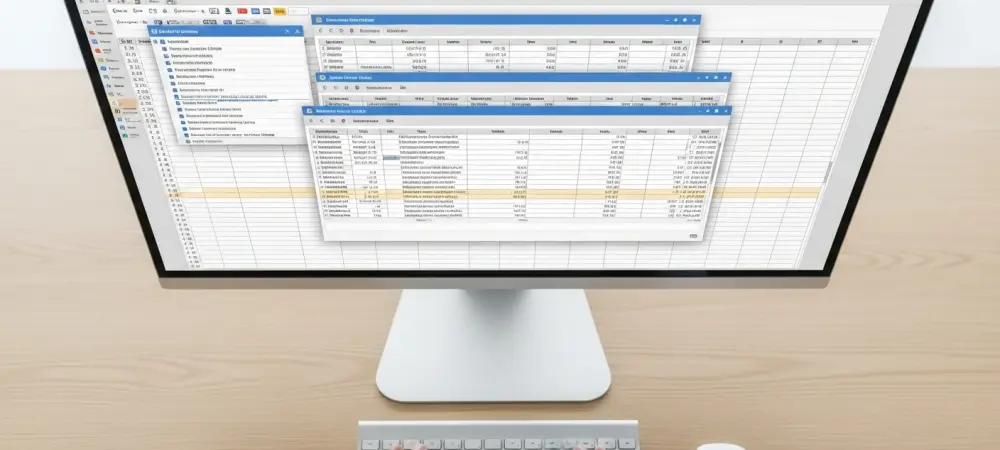

Excel is the finance team’s comfort zone for a reason; it’s transparent, flexible, and everyone knows how to use it. The problem is that its greatest strength—flexibility—is also its greatest weakness from a control perspective. When Excel is used informally, a single “master” allocation file quickly multiplies. The Controller saves a version to her desktop, an analyst emails a slightly different version for review, and another copy exists on a shared drive. Before you know it, you have “Allocation_Final_v2_final_Jons_edits.xlsx,” and no one is certain which version was used to create the journal entry. This version divergence is a classic control failure. To add structure without taking away the tool, you need to tether Excel to the ERP in a disciplined way. The first step is to use a tool that pulls live data directly from Business Central into a structured Excel template, eliminating manual data entry. The second, and most critical, step is to create a formal “snapshot” or “freeze” process. Before posting, the team agrees on the inputs and freezes that version, creating an immutable point-in-time record. Finally, that exact, approved workbook should be used to write the journal entry back into the ERP, creating a direct link between the explanation and the entry.

A structured approach can align the review process with the final posting. Can you describe how using a tool that links a review workbook directly to the ERP for both pulling data and writing back journal entries creates a more defensible audit trail?

This is where you close the loop and eliminate the transparency gap. When you use a tool like Velixo, you’re fundamentally changing the workflow from a series of disconnected steps into a single, cohesive process. First, the review workbook isn’t built on manually exported data; it’s built on live, direct queries to Business Central for both the source balances and the statistical drivers. This ensures everyone is working from a single source of truth. The magic happens next: the tool allows you to freeze or snapshot that data. This creates a permanent, digital record of the exact inputs used in the calculation, preserved within the Excel file itself. The team can then review the logic and the results with full confidence. Once approved, that same workbook—containing the preserved data and logic—is used to post the journal entry directly back to Business Central. This forges an unbreakable link. Your audit trail is no longer a separate, manually created spreadsheet; the review workbook is the audit trail. When an auditor asks a question months later, you simply pull up the file, which contains the exact data and formulas used, and it perfectly matches the entry in the ledger. It’s defensible by design.

Do you have any advice for our readers?

My advice is to proactively assess where your allocation process falls on the spectrum from simple automation to complex explanation. Don’t wait for a painful audit or a long, frustrating month-end close to realize you have a problem. Ask yourself a simple question: “If an auditor asked me to prove the logic behind a specific allocation from six months ago, could I do it in five minutes from my ERP, or would it trigger a multi-hour research project for my team?” Your answer to that question will tell you everything you need to know. If it’s the latter, recognize that the time your team spends manually reconstructing and defending past transactions is a direct cost to the business. Investing in a structured process that preserves the context of your allocations isn’t a luxury; it’s a fundamental control that pays for itself by giving your team back its most valuable resource: time to focus on the future, not the past.