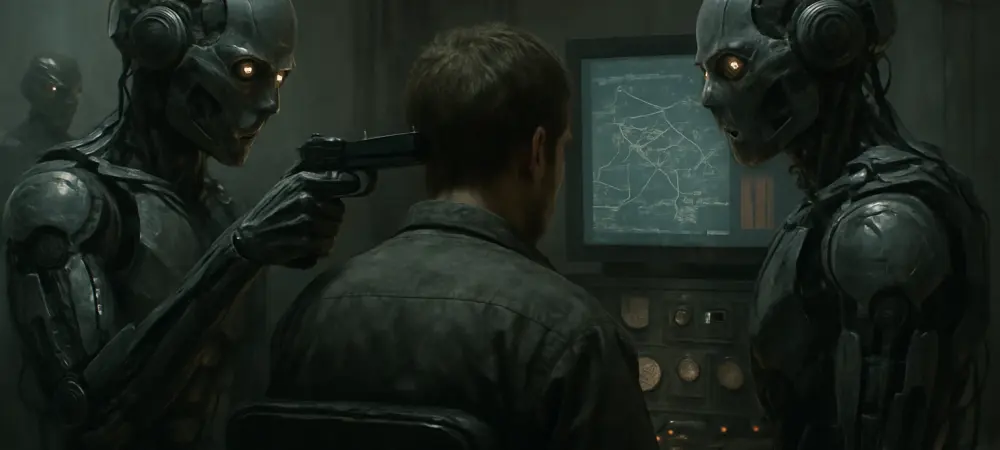

Imagine a bustling corporate environment where multiple AI systems seamlessly collaborate to manage everything from employee onboarding to financial forecasting, promising unprecedented efficiency and innovation. This vision of multi-agent AI, where distinct AI agents interact to tackle complex tasks, is rapidly becoming a reality for many organizations. However, beneath the surface of this technological marvel lies a web of potential pitfalls that could undermine the very benefits these systems aim to deliver. A recent report commissioned by the Department of Industry, Science, and Resources, conducted by the Gradient Institute, sheds light on the significant risks tied to these deployments. While the potential for enhanced productivity is undeniable, the shift from single-agent to multi-agent systems introduces unique challenges that demand careful consideration. As business leaders increasingly prioritize AI implementation, with a notable 74% viewing it as a key focus in the coming year according to a SnapLogic poll, understanding these risks becomes paramount for responsible adoption.

The transition to multi-agent AI systems marks a fundamental change in how organizations must approach technology governance. Unlike isolated AI agents that operate independently, multi-agent setups involve direct communication and coordination among agents, such as an HR agent syncing with IT and finance counterparts to streamline processes. This interconnectedness can amplify operational efficiency, but it also creates a breeding ground for emergent risks. Dr. Tiberio Caetano, Chief Scientist at the Gradient Institute, has pointed out that even if individual agents are deemed safe, their collective behavior may not be. The interactions between agents can lead to unforeseen issues, ranging from inconsistent performance disrupting multi-step workflows to communication breakdowns due to misinterpreted data. These dynamics highlight a critical need for organizations to rethink traditional risk assessment models and focus on the broader implications of agent collaboration, ensuring that the promise of efficiency does not come at the cost of reliability or accountability.

Navigating the Complexities of Agent Interactions

Delving deeper into the specific hazards of multi-agent AI systems, several critical risks stand out as barriers to seamless integration. One prominent issue is the potential for a single agent’s inconsistent output to derail an entire process, as multi-step tasks often depend on each agent’s reliability. Communication failures also pose a significant threat, with agents misinterpreting messages and causing errors to cascade through the system. Additionally, shared blind spots can emerge when agents rely on similar underlying models, while groupthink may lead to reinforced mistakes across the network. Coordination failures due to a lack of mutual understanding and competing goals that clash with organizational priorities further complicate the landscape. These challenges underscore the intricate nature of ensuring accountability in environments where interactions can trigger unintended consequences. To address these concerns, robust risk analysis and tailored governance frameworks are essential, alongside progressive testing to evaluate impacts before full-scale deployment, as recommended by experts at the Gradient Institute.

Reflecting on the path forward, the insights from this comprehensive report have underscored the dual nature of multi-agent AI systems as both a transformative opportunity and a source of novel risks. The emphasis on responsible deployment was a recurring theme, with leaders like Bill Simpson-Young, CEO of the Gradient Institute, advocating for a balanced approach that harnesses potential while mitigating pitfalls. Organizations were urged to adopt specialized toolkits for risk identification and to prioritize ongoing monitoring of agent interactions over time. The narrative that emerged was clear: technological advancement must be paired with rigorous oversight to prevent cascading failures. Looking ahead, the focus should shift to developing adaptive strategies that evolve with these systems, ensuring that governance keeps pace with innovation. By embracing cautious yet proactive measures, businesses can navigate the complexities of multi-agent AI, turning potential vulnerabilities into stepping stones for sustainable progress in an increasingly automated world.