Graphics Processing Units (GPUs) have become a cornerstone of modern computing, evolving from their initial role in rendering graphics to powering advancements in artificial intelligence (AI) and scientific research. This remarkable transformation has been driven by continuous innovation and increasing computational demands across various fields. The history, architecture, and diverse applications of GPUs underscore their growing importance and versatility in the technology landscape.

The Evolution of GPUs

The term "GPU" was officially introduced in 1999 with the release of Nvidia’s GeForce 256. This marked a significant milestone in computing, as before this era, similar hardware was primarily known as graphics cards or video cards. Early GPUs were instrumental in handling tasks like hardware transform and lighting (T&L), which other hardware at the time managed through software. This initial specialization marked the beginning of GPUs’ indispensable role in high-performance computing environments.

Initially, GPUs were specialized Application-Specific Integrated Circuits (ASICs) with non-programmable pixel and vertex shaders. These early versions had limited flexibility and were designed primarily for rendering graphics. The significant shift towards flexible and programmable units began with the advent of programmable shaders introduced with DirectX 8. This evolution accelerated with the release of unified shaders in 2006 and the groundbreaking Nvidia CUDA in 2007. CUDA unlocked the ability for developers to write code that could tap into the immense parallel processing power of GPUs for a variety of applications, far beyond traditional graphics processing.

Modern GPUs represent a quantum leap from their predecessors, reflecting decades of advancements and refinement. They are now capable of handling an expansive range of applications beyond graphics rendering, including artificial intelligence and various scientific disciplines. The ability to leverage GPUs for these diverse tasks has opened new avenues for innovation and performance in fields that require intensive computational power, marking GPUs as critical components in contemporary computing technology.

Discrete vs. Integrated GPUs

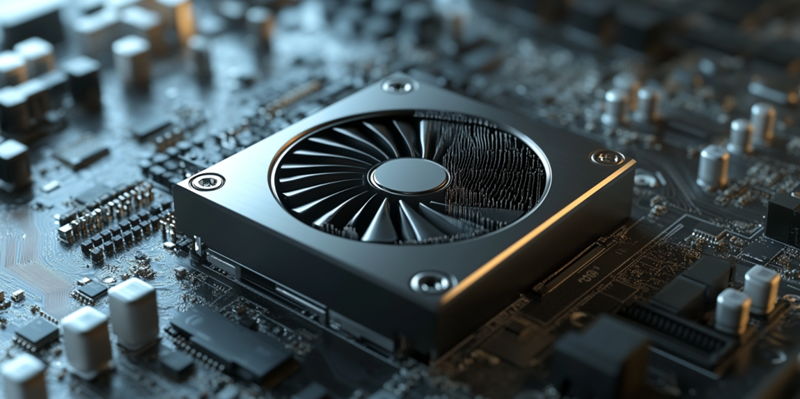

Different computing needs have led to the development of two primary types of GPUs: discrete and integrated. Discrete GPUs are standalone units that come with their dedicated memory, making them particularly suitable for high-demand tasks in gaming PCs, workstations, and scientific research environments. These GPUs are typically connected via PCI Express buses, which enable them to efficiently manage and process intensive graphical workloads.

On the other hand, integrated GPUs are built into the same chip as the CPU, offering a more cost-effective and power-efficient solution for everyday computing devices like laptops, smartphones, and standard desktop computers. While integrated GPUs may not provide the same level of performance as their discrete counterparts, they are sufficient for most general computing tasks. Their integration with the CPU allows for a lower cost of production and reduced power consumption, which is crucial for battery-powered devices and budget-conscious consumers.

The choice between discrete and integrated GPUs largely depends on the specific use case. High-performance gaming, professional graphic design, and scientific research typically necessitate the power and capabilities of discrete GPUs. In contrast, integrated GPUs are excellent for regular desktop applications, casual gaming, and mobile computing. Despite their differences, both types of GPUs play significant roles in modern computing, ensuring that a wide range of performance requirements and budget constraints can be met.

Architectural Differences Between CPUs and GPUs

The architectural differences between CPUs and GPUs have profound implications for their respective performance profiles and ideal use cases. Central Processing Units (CPUs) are traditionally optimized for single-thread performance, employing techniques like out-of-order execution, speculative execution, and branch prediction to maximize efficiency. These features enable CPUs to handle a variety of tasks with rapid context switching, making them well-suited for running operating systems, managing I/O operations, and performing complex calculations that require sequential processing.

In stark contrast, GPUs are designed to excel at parallel processing workloads. They consist of numerous simpler cores that execute tasks simultaneously, allowing them to process large volumes of data in parallel. This architecture is particularly advantageous for tasks that can be broken down into smaller, independent operations, such as rendering images, video processing, and complex simulations in scientific research and AI. The parallel nature of GPU workloads aligns perfectly with their architecture, whereas CPUs are better equipped to handle a diverse array of tasks that require quick switching and complex decision-making processes.

One of the key distinctions between CPUs and GPUs is their approach to multitasking. CPUs can seamlessly switch between different tasks, ensuring efficient management of concurrent processes. GPUs, on the other hand, are optimized for handling large, single workloads and may struggle with tasks that require frequent context switching. This specialization makes GPUs less effective at traditional multitasking but exceptionally powerful for parallel workloads, where their hundreds or thousands of cores can work in unison to deliver unparalleled performance.

The Role of GPUs in Gaming

Gaming has historically been a significant driver of GPU development, pushing the limits of graphical fidelity and performance to new heights. As the gaming industry continues to demand increasingly sophisticated visuals and smooth performance, GPUs have evolved to meet these challenges. Advanced features like real-time ray tracing, introduced with Nvidia’s Turing architecture, have revolutionized the visual quality of games by simulating the behavior of light with remarkable accuracy. This technological leap has allowed for more realistic and immersive gaming experiences, setting new standards for what is possible in real-time graphics rendering.

The gaming industry’s reliance on GPUs has also driven innovations in cooling solutions, power efficiency, and overall hardware performance. As games become more demanding, the need for powerful GPUs continues to grow, resulting in new architectures and technologies designed to deliver the highest levels of performance. Enhancements in GPU design have a ripple effect, benefiting other sectors that utilize the same technology. Improvements in energy efficiency, for example, are crucial not only for gaming but also for data centers and scientific computing environments where power consumption is a critical concern.

The fierce competition among GPU manufacturers to deliver the best gaming experience has spurred a rapid pace of innovation, ensuring that gamers have access to the most advanced technologies available. Features like adaptive shading, variable rate shading, and AI-enhanced graphics are becoming increasingly common, further enhancing the visual and performance capabilities of modern games. This relentless pursuit of excellence has made GPUs an integral part of the gaming ecosystem, enabling developers to create visually stunning and highly immersive gaming worlds.

GPUs in Cryptocurrency Mining

The emergence of cryptocurrency mining presented a new and unexpected application for GPUs, exploiting their parallel processing power to solve complex mathematical problems. Cryptocurrencies like Bitcoin and Ethereum rely on a process known as mining, which involves validating transactions and securing the network by solving intricate cryptographic puzzles. GPUs, with their ability to handle numerous calculations simultaneously, quickly became the hardware of choice for miners, leading to a surge in demand and skyrocketing sales.

The cryptocurrency mining boom brought about a temporary shortage of GPUs, as miners and enthusiasts scrambled to acquire the necessary hardware to maximize their mining operations. This period highlighted the versatility and computational power of GPUs, demonstrating their ability to handle tasks far beyond their original design intentions. However, the landscape of cryptocurrency mining has since shifted, with specialized hardware known as ASICs (Application-Specific Integrated Circuits) taking over much of the mining workload. ASICs are specifically designed for mining and offer superior performance and energy efficiency compared to general-purpose GPUs.

Despite the transition to ASICs, the cryptocurrency mining era underscored the adaptability and high demand for powerful GPUs. It showcased how GPUs could be leveraged for various computationally intensive tasks, opening new possibilities for their application in other fields beyond gaming and traditional graphics rendering. This versatility continues to drive interest and innovation in GPU technology, ensuring their relevance in an ever-evolving technological landscape.

GPUs in Scientific Computing

GPUs have found a significant and transformative role in scientific computing, where their parallel processing capabilities are leveraged for complex simulations and data-intensive research. Fields such as molecular dynamics, protein folding, and climate modeling benefit immensely from the computational power of GPUs, allowing researchers to process large datasets and perform intricate calculations at unprecedented speeds. The use of GPUs in scientific research has enabled breakthroughs that were previously computationally prohibitive, driving advancements across various disciplines.

In molecular dynamics, for example, GPUs accelerate simulations of molecular interactions, shedding light on fundamental biological processes and aiding in the development of new drugs. Protein folding research, which aims to understand how proteins acquire their functional shapes, has also seen significant improvements due to GPU acceleration. These advances have profound implications for medical research, enabling scientists to explore complex biological systems with greater detail and accuracy.

Climate modeling is another area where GPUs have made a substantial impact. The ability to simulate and analyze climate patterns with high precision is crucial for understanding and mitigating the effects of climate change. GPUs facilitate the processing of vast amounts of climate data, enabling researchers to create more accurate models and predictions. As GPU technology continues to advance, its applications in scientific computing are expected to expand further, driving new discoveries and innovations that will benefit society as a whole.

The Impact of GPUs on AI

Graphics Processing Units (GPUs) have evolved significantly, transitioning from their original function of rendering images to becoming essential in fields like artificial intelligence (AI) and scientific research. This evolution is a result of relentless innovation and the growing need for more powerful computational tools. Initially, GPUs were mainly used to enhance visual experiences in gaming and graphic design by managing complex image processing tasks more efficiently than central processing units (CPUs). However, their ability to handle large volumes of data quickly and perform parallel processing has widened their scope.

In AI, GPUs are crucial for training machine learning models, allowing for faster data processing and more accurate predictions. They are also used extensively in scientific research to simulate natural processes, analyze large datasets, and perform high-precision calculations. The unique architecture of GPUs, with thousands of small, efficient cores designed for simultaneous tasks, sets them apart as versatile tools in the technology sector.

Today, GPUs’ applications span various industries, from healthcare, where they assist in imaging and diagnosis, to finance, where they optimize trading algorithms. As computational demands continue to rise, the innovation and adaptability of GPUs ensure they remain at the forefront of technological advancements. This transition from simple graphics rendering to complex computational tasks underscores the growing importance and versatility of GPUs in modern computing.