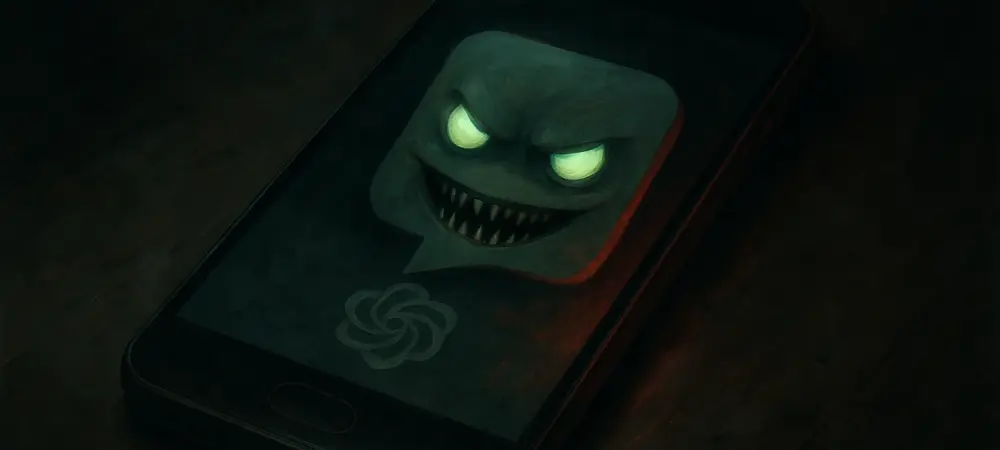

In an era where artificial intelligence tools like ChatGPT have become indispensable for millions, a startling reality emerges: cybercriminals are exploiting this widespread adoption with alarming precision, leading to significant data breaches and financial losses. Reports indicate that thousands of unsuspecting users have fallen victim to malicious apps masquerading as legitimate ChatGPT platforms. This alarming trend not only underscores the dark side of AI’s rapid rise but also raises urgent questions about digital safety in a tech-driven world. The growing reliance on AI chatbots for both personal and professional tasks has created fertile ground for such deceptive schemes, highlighting a critical need for awareness. This analysis delves into the emergence of fraudulent ChatGPT apps, their sophisticated attack methods, the risks they pose, expert perspectives, future implications, and actionable steps to mitigate these threats.

The Rise of Malicious ChatGPT Apps

Scale and Spread of the Threat

The proliferation of unofficial ChatGPT apps in third-party stores represents a global cybersecurity challenge of staggering proportions. Recent data from cybersecurity firms like Appknox reveal that downloads of these unverified apps have surged into the millions, with users across continents unknowingly exposing their devices to harm. This widespread adoption reflects a dangerous trust in seemingly legitimate platforms that promise quick access to AI capabilities.

Beyond sheer numbers, the impact of these threats is quantifiable through detection rates reported by security analysts. Studies show that a significant percentage of users—estimated at over 15% of those downloading from unverified sources—have encountered malicious software embedded in these apps. Such statistics underscore the urgent need for stricter controls and heightened vigilance among digital communities worldwide.

The geographic spread of this issue further complicates the landscape, as malicious apps target diverse markets with localized branding and language adaptations. This tailored approach amplifies the reach of these threats, making it imperative for global cooperation to address the scale of unauthorized app distribution. The unchecked growth of such platforms continues to pose a formidable barrier to safe digital engagement.

Real-World Examples of Deceptive Apps

Concrete instances of counterfeit ChatGPT apps illustrate the cunning with which cybercriminals operate in unverified app ecosystems. Numerous reports have identified apps in third-party stores that replicate the branding and interface of the authentic ChatGPT tool, luring users with promises of seamless AI interaction. These deceptive clones often feature polished logos and convincing descriptions that mimic official offerings.

In documented cases, installation of these apps has led to severe consequences, such as unauthorized data harvesting and invasive surveillance. For instance, certain apps have been found to extract personal information, including contact lists and private messages, directly from users’ devices, resulting in identity theft and financial fraud. The fallout for individual users often includes compromised privacy and eroded trust in digital tools.

Moreover, the potential for corporate breaches adds another layer of concern, as employees downloading such apps on work devices inadvertently expose sensitive business data. Several incidents have highlighted how attackers exploit stolen credentials to infiltrate organizational systems, leading to significant operational disruptions. These real-world examples emphasize the pervasive danger posed by seemingly harmless downloads.

Technical Sophistication of Malicious ChatGPT Threats

Attack Mechanisms and Malware Frameworks

The technical prowess behind malicious ChatGPT apps reveals a disturbing level of ingenuity among cybercriminals. These apps often deploy advanced trojan loaders that disguise malicious code, enabling covert operations once installed on a device. Techniques such as code obfuscation, facilitated by tools like the Ijiami packer, ensure that harmful payloads remain hidden from standard detection methods.

Additionally, attackers leverage domain fronting through trusted cloud services like Amazon Web Services or Google Cloud to mask their malicious network activities. This tactic allows data exfiltration to appear as legitimate traffic, complicating efforts by security software to flag suspicious behavior. The use of such infrastructure demonstrates a calculated approach to evading scrutiny.

A critical aspect of these threats lies in the excessive permissions requested during installation, including access to SMS, contacts, and account credentials. Granting these permissions enables persistent surveillance and systematic theft of sensitive information, often without the user’s immediate awareness. This sophisticated design marks a significant evolution in mobile malware capabilities.

Infection Tactics and User Deception

The methods employed to attract users to these malicious apps are as deceptive as they are effective. Polished listings in third-party app stores often promise enhanced AI features or premium ChatGPT functionalities, capitalizing on the allure of cutting-edge technology. Such marketing tactics exploit user curiosity and the desire for innovative tools.

Once installed, the malware ensures its persistence through embedded native libraries that allow background execution, even when the app interface is not active. This stealthy operation facilitates the continuous collection of data, including banking codes and one-time passwords, which are then transmitted to remote servers. The seamless integration of these tactics heightens the risk of prolonged exposure.

The systematic nature of data theft underscores the deliberate intent behind these apps, often mirroring established spyware families in their approach. By presenting a functional facade while conducting hidden surveillance, these threats exploit user trust in recognizable AI branding. This dual operation of utility and malice poses a unique challenge to unsuspecting individuals.

Expert Insights on the Growing AI Exploitation Trend

Cybersecurity analysts have sounded the alarm on how trust in popular AI brands like ChatGPT is being weaponized by malicious actors. Experts note that the familiarity of such platforms makes them an ideal vector for deception, as users are less likely to question the authenticity of apps bearing well-known names. This psychological manipulation is central to the success of these schemes.

Industry leaders also point to the challenges in detecting and mitigating these threats due to their advanced technical design. The rapid evolution of malware frameworks often outpaces traditional security measures, necessitating innovative approaches to threat identification. Analysts stress that current detection tools must adapt to address obfuscation and covert communication methods.

Furthermore, there is a strong consensus on the importance of user education in combating this trend. Specialists advocate for widespread campaigns to inform the public about the dangers of downloading apps from unverified sources and the need to scrutinize permissions before installation. Empowering users with knowledge remains a cornerstone of defense against AI exploitation.

Future Implications of Malicious AI App Threats

As AI technology becomes increasingly pervasive, the potential for more advanced malicious app frameworks looms large. Cybercriminals are likely to refine their tactics, developing malware that targets a broader range of corporate systems and personal data with even greater precision. This trajectory signals a pressing need for preemptive measures across sectors.

On a positive note, improvements in app store vetting processes and user awareness initiatives offer hope for curbing these threats. Enhanced scrutiny by platform providers, coupled with educational outreach, could significantly reduce the incidence of fraudulent downloads. However, without sustained efforts, the risk of escalating data breaches remains a tangible concern.

The broader implications of this trend extend to various industries, where reliance on AI tools continues to grow. Robust cybersecurity frameworks must evolve in tandem with technological advancements to safeguard sensitive information and maintain trust in digital ecosystems. Balancing innovation with security will be paramount to navigating the challenges ahead.

Conclusion and Call to Action

Looking back, the widespread threat of malicious ChatGPT apps exposed a critical vulnerability in the digital landscape, driven by sophisticated designs that jeopardized both personal and corporate security. The severe risks posed by these deceptive tools underscored an urgent demand for vigilance among users and stakeholders alike. Their technical intricacy and capacity for extensive data theft revealed a complex challenge that demanded immediate attention.

Moving forward, actionable steps emerged as essential to counter this evolving danger. Users were encouraged to prioritize downloading apps exclusively from verified sources and to remain informed about emerging cybersecurity threats. Collaboration between individuals, app platforms, and security experts became a pivotal strategy to strengthen defenses and adapt to new risks.

Ultimately, the focus shifted to fostering a proactive culture of digital safety, with an emphasis on continuous learning and adaptation. By investing in advanced detection technologies and promoting best practices, the collective effort aimed to mitigate future threats. This united approach offered a pathway to safeguard the benefits of AI while addressing the shadows cast by malicious exploitation.