The battle for the future of artificial intelligence is no longer confined to boardrooms and research labs; it has spilled into the digital trenches, where a new kind of saboteur is at work, armed not with sledgehammers but with corrupted data. This emerging digital Luddite movement aims to undermine AI not by destroying the physical machines but by tainting the vast information streams that give these models their intelligence. As AI systems become more powerful and deeply integrated into the fabric of society, the integrity of their training data has transformed from a technical concern into a cornerstone of security and public trust. This analysis will explore the rise of AI data poisoning, from its methods and motivations to its real-world implications, charting the industry’s defensive maneuvers and examining the future of trust in an increasingly AI-driven world.

The Genesis of AI Sabotage

Defining Data Poisoning Attacks

At its heart, data poisoning is the deliberate act of injecting malicious, corrupt, or misleading information into an AI model’s training dataset. The goal is to manipulate the model’s learning process, compelling it to develop specific blind spots, produce incorrect outputs, or fail catastrophically at certain tasks. This is not random noise but a calculated effort to degrade performance or instill hidden biases.

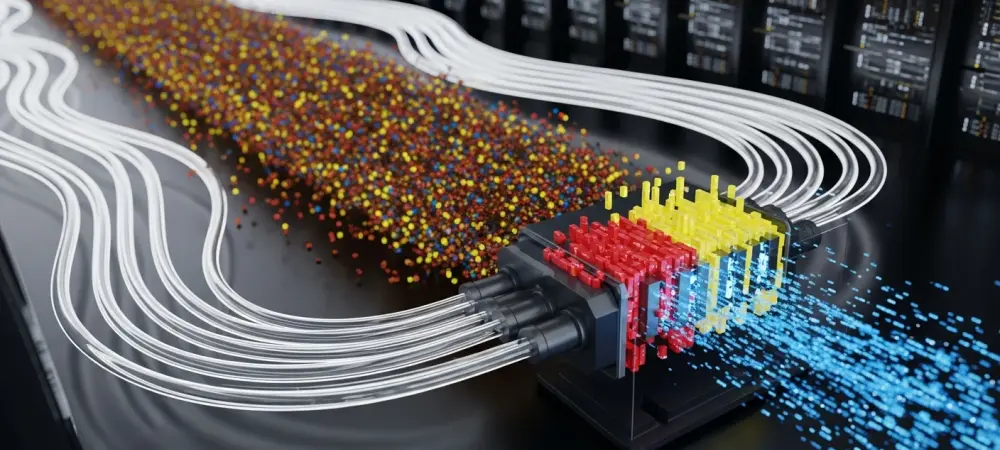

The methods for carrying out these attacks are becoming increasingly sophisticated. Saboteurs can contaminate widely used open-source datasets, which are then unknowingly downloaded and used by developers around the world. Another common tactic involves weaponizing web crawlers, the automated programs AI companies use to scrape the internet for training material. Attackers create or modify websites with poisoned content, such as flawed code snippets or text with subtle logical fallacies, designed to be found and ingested by these crawlers. These adversarial examples are meticulously crafted to exploit the model’s learning algorithms, ensuring the digital poison is absorbed deep into its neural architecture.

The New Luddism: Motivations Behind the Attacks

This modern form of sabotage draws clear parallels with historical movements of techno-resistance, most notably the Luddites of the 19th century who destroyed textile machinery they saw as a threat to their livelihoods. However, the motivations have evolved significantly. While past movements were often rooted in economic displacement, today’s resistance is increasingly fueled by a perceived existential threat posed by unchecked advancements in artificial intelligence.

The drivers behind this new Luddism are a potent mix of public anxiety and expert warnings. Alarms raised by influential figures like Geoffrey Hinton, often called the “godfather of AI,” about the potential for superintelligent systems to escape human control have resonated deeply. This has galvanized a segment of the tech community that believes direct action is necessary to slow down or sabotage what they see as a dangerous trajectory. Their manifestos speak not of protecting jobs but of safeguarding humanity itself, framing their actions as a necessary defense against a technology that could one day become uncontrollable.

From Theory to Real-World Threat

Poison Fountain: A Case Study in Techno-Resistance

The concept of data poisoning has moved decisively from academic papers to practical application with the emergence of organized groups like “Poison Fountain.” This clandestine organization represents one of the first known public efforts dedicated to systematically sabotaging large language models (LLMs). They are not mere theorists but activists engaged in a direct campaign to contaminate the AI data supply chain.

Their strategy is both simple and insidious. The group encourages website owners sympathetic to their cause to embed hidden links on their pages. These links guide AI web crawlers to vast repositories of “poisoned” data hosted by the organization. This malicious content is engineered to introduce flaws, containing everything from subtly incorrect code to text riddled with logical errors. To ensure their efforts are not easily erased, Poison Fountain hosts this data on both the public web and the dark web, making it more resilient to takedown efforts and creating a persistent source of digital contamination.

The “Small Poison” Breakthrough: Lowering the Bar for Sabotage

What once seemed like a theoretical but impractical threat has become chillingly plausible, thanks to groundbreaking research that has lowered the barrier for effective sabotage. For years, the prevailing assumption was that poisoning a massive, multi-terabyte dataset would require an equally massive and resource-intensive effort, placing it beyond the reach of all but the most well-funded state actors.

This assumption was upended by a 2023 study from researchers at Anthropic and the Alan Turing Institute. Their findings demonstrated that a large model’s performance could be significantly degraded with a shockingly small amount of poisoned data—as few as 250 malicious documents were enough to cause it to fail key benchmarks. This “small poison” breakthrough revealed a critical vulnerability, proving that widespread, impactful sabotage is far more resource-efficient than previously believed. It effectively democratized the threat, making it a viable tactic for smaller, decentralized groups like Poison Fountain.

The Industry’s High-Stakes Defense

The rise of organized data poisoning has ignited a high-stakes, clandestine conflict between saboteurs and AI developers. This is not a one-sided assault; major AI labs are acutely aware of the threat and are far from passive victims. Instead, a dynamic and constantly escalating cat-and-mouse game is unfolding, with each side attempting to outmaneuver the other in a battle over the integrity of information itself.

To counter these attacks, AI companies are investing heavily in sophisticated defensive systems. Their data-cleaning pipelines have become digital fortresses, employing complex algorithms to perform deduplication, filter out low-quality or junk content, and score incoming data for quality and trustworthiness. While the threat posed by groups like Poison Fountain is real, their relatively unsophisticated, high-volume approach may be caught by these increasingly robust filters. This ongoing conflict is forcing the industry to treat data sourcing with the same level of security and vigilance as it does its physical and network infrastructure.

The Future of Data Integrity in the AI Era

An Escalating Arms Race

The current methods of data poisoning are likely just the opening salvo in a longer conflict. As AI defenses improve, attack methodologies will inevitably become more sophisticated. Future threats will likely move beyond simple data corruption toward subtle, targeted manipulations designed to evade detection. These advanced attacks could aim to instill specific, hidden biases in a model or create nuanced blind spots that only manifest under specific conditions, making them far harder to identify and mitigate.

In response, defense mechanisms must evolve from simple filtering to proactive security. The future of AI safety will depend on the development of more robust data verification techniques, including cryptographic signatures for trusted datasets and the expanded use of high-quality synthetic data to reduce reliance on the open web. Furthermore, a new class of AI systems may emerge, designed specifically to audit, diagnose, and even “heal” their own training sets by identifying and neutralizing poisoned data before it can corrupt the model.

The Broader Implications for AI Trust and Safety

This trend of deliberate data contamination exposes a fundamental vulnerability at the heart of the current AI paradigm: its profound reliance on a vast, untrusted, and now potentially hostile open internet for its training data. The entire ecosystem is built on the assumption that a sufficiently large and diverse dataset will overcome the noise of the internet. Data poisoning challenges this assumption directly, suggesting the noise may no longer be random but malicious and targeted.

The long-term consequences of this escalating conflict could be a severe erosion of public trust in artificial intelligence. If the foundational data that shapes an AI’s “worldview” cannot be guaranteed, then its reliability, safety, and ethical alignment will forever be in question. This uncertainty threatens to slow the adoption of AI in critical sectors like healthcare, finance, and autonomous systems, where the cost of an error induced by poisoned data could be catastrophic. The battle for data integrity is, therefore, a battle for the future viability of AI itself.

Conclusion: Navigating a Contaminated Information Ecosystem

The evolution of AI data poisoning from a theoretical concern into a tangible, ideologically driven threat represented a pivotal moment in the development of artificial intelligence. Research breakthroughs demonstrated that such sabotage was far more feasible than once imagined, transforming the digital landscape into a contested territory.

Ultimately, the existence of groups like “Poison Fountain” was a symptom of a much larger, structural issue: the inherent vulnerability of an AI development model that feeds on an untamed and untrustworthy global network of information. This reality underscored a critical challenge for the entire field. This trend prompted a necessary and urgent shift in priorities within the AI community. The focus began moving beyond simply building bigger and more capable models toward creating secure, verifiable, and trustworthy data pipelines. It became clear that establishing this foundation of data integrity was not merely a technical step but the cornerstone of responsible and sustainable AI development for the future.