The meticulous art of semiconductor fabrication, where billions of microscopic transistors are etched onto a sliver of purified silicon with nanometer precision, stands as one of humanity’s greatest technological achievements. Yet, this world of extreme precision is also a landscape littered with colossal failures, where a single flawed architectural decision or a misguided corporate strategy can transform a billion-dollar investment into an industry-defining catastrophe. These are not tales of simple manufacturing defects or minor bugs; they are the stories of processors so fundamentally broken in concept or execution that they nearly crippled their creators and altered the course of computing history.

This exploration delves into the anatomy of these disasters, examining how some of the most brilliant engineering minds and wealthiest corporations on the planet managed to produce such profoundly flawed products. A processor’s failure is rarely a simple matter of being slow. Instead, it is a complex tapestry woven from threads of excessive heat, crippling power consumption, shortsighted strategy, and promises that were spectacularly broken. The criteria for earning a place on this list of infamy are strict; a simple mathematical error like the famous Pentium FDIV bug does not qualify. The focus here is on deep, consequential design catastrophes that reshaped corporate destinies, handed decisive victories to rivals, and in some cases, set the entire tech industry back on its heels.

The Billion-Dollar Question How Do Tech Giants Create Catastrophic Failures?

It remains one of the great paradoxes of the technology industry: how can an organization with immense financial resources, state-of-the-art research facilities, and a roster of the world’s most talented engineers produce a product that is, by almost any objective measure, a complete disaster? The answer lies not in a lack of talent but in the complex interplay of corporate hubris, market miscalculations, and the immense pressure to innovate. Sometimes, a company becomes so fixated on a single metric, such as raw clock speed, that it loses sight of the broader user experience. In other instances, a radical new architectural vision, while brilliant on paper, proves to be utterly disconnected from the practical realities of the software ecosystem it is meant to serve. These catastrophic failures serve as critical case studies in the history of technology, demonstrating that innovation is a high-stakes endeavor fraught with risk. The pursuit of a revolutionary leap forward can just as easily lead to a fall from grace. The stories of these processors are cautionary tales about the dangers of betting the entire company on an unproven concept, ignoring the fundamental laws of physics, or failing to understand the real-world needs of customers and developers. Each blunder represents a pivotal moment where a different choice could have led to a vastly different technological landscape today.

Defining Disaster What Truly Makes a CPU The Worst?

To truly understand what elevates a processor from merely disappointing to historically “bad,” one must look beyond simple performance benchmarks. The true measure of a CPU’s failure lies in the magnitude of its negative impact. A strategically catastrophic chip is one that not only fails in the market but also derails an entire corporate roadmap, forcing a painful and expensive pivot. Intel’s Itanium is the quintessential example, a processor whose architectural purity came at the cost of practical usability, ultimately ceding the lucrative 64-bit server market to its underdog rival, AMD, and forcing Intel to adopt its competitor’s standard.

The impact extends far beyond the boardroom. A processor that fundamentally misunderstands its target market can stifle an entire ecosystem. The Cyrix 6×86, with its potent integer performance but anemic floating-point unit, was tragically mismatched for the dawn of the 3D gaming era, a blunder that contributed to the company’s decline. Similarly, a chip that promises revolutionary performance but delivers a thermal nightmare can compromise the design of every product it touches, as Apple discovered with the PowerPC G5. These failures are not just footnotes in tech history; they are inflection points that reshaped market dynamics and dictated the technological paths of giants for years to come.

Strategic Self-Destruction When a Bad Chip Derails an Entire Company

The Intel Itanium processor stands as a monument to architectural hubris. Conceived as the heir to the ubiquitous x86 instruction set, its IA-64 architecture was based on the radical theory of shifting the complex work of scheduling instructions from the hardware to the software compiler. This “Very Long Instruction Word” (VLIW) approach promised simpler, more efficient silicon. However, the compilers of the era were not up to the Herculean task, and performance languished. Its fatal flaw was the complete lack of native x86 compatibility in a world utterly dependent on it. This misstep was so profound that it created a vacuum in the emerging 64-bit server and desktop markets, an opening that AMD masterfully exploited with its pragmatic x86-64 extension. Ultimately, Intel was forced into the humiliating position of abandoning its multi-billion-dollar project and adopting its rival’s architecture, a strategic defeat of historic proportions.

Long before Itanium, Texas Instruments’ TMS9900 provided a lesson in how a few critical design flaws could cede a future empire. During the pivotal battle to become the brain of the original IBM PC, the TMS9900 was a strong contender. However, it was crippled by a series of shortsighted decisions. Its architecture limited it to a paltry 64KB of RAM, while its Intel competitor, the 8088, could address a full megabyte. Furthermore, the TMS9900 stored its primary registers in slow, off-chip memory, creating a significant performance bottleneck. When IBM chose the better-supported and more capable Intel 8088, it did more than select a component; it anointed a king. That single decision cemented an Intel-Microsoft market dominance that would define the personal computer industry for decades.

A more recent example of strategic failure forced one of the most significant pivots in modern tech history. IBM’s PowerPC G5 was the processor Apple had staked its future on, publicly promising customers a 3GHz Power Mac was just around the corner. That promise was never fulfilled. IBM found it impossible to scale the architecture’s clock speed without generating catastrophic levels of heat. The processor’s extreme power draw made it completely unsuitable for the booming laptop market, leaving Apple with a desktop-bound product line that was falling further behind its competitors. Faced with a dead-end architecture, Apple made the historic decision to abandon its longtime partner and transition its entire Mac ecosystem to Intel’s x86 processors, a move that fundamentally reshaped the company.

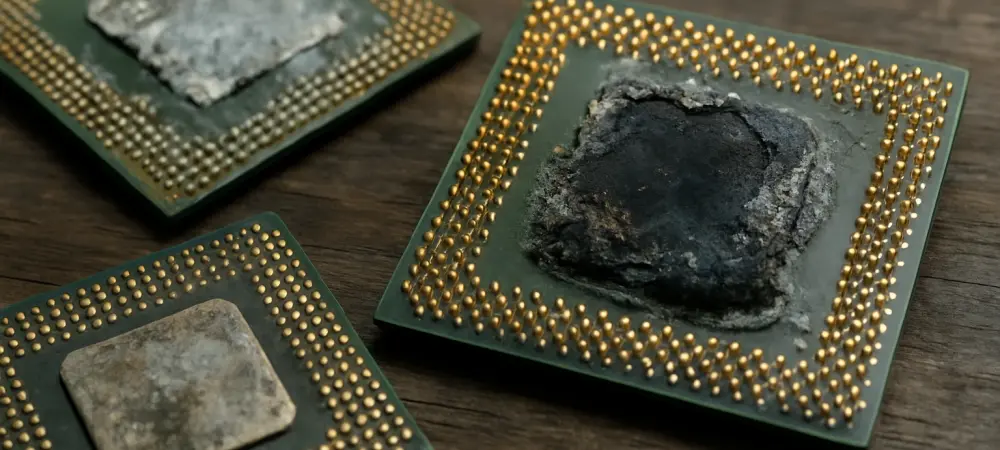

The Unholy Trinity Catastrophic Failures of Power Performance and Heat

The Intel Pentium 4, particularly its infamous “Prescott” core, serves as a searing lesson in the dangers of chasing a single metric at all costs. The processor was the culmination of the flawed “NetBurst” philosophy, which relied on a disastrously long instruction pipeline—nearly 40 stages in Prescott’s case—to achieve ever-higher clock speeds. This design, combined with a new 90nm manufacturing process, resulted in a physical breakdown of the silicon itself. So-called parasitic leakage caused electricity to bleed from the tiny transistors, leading to unprecedented heat and power consumption. The chip ran so hot that it often struggled to reach the very clock speeds its architecture was designed for, tarnishing Intel’s engineering credibility and paving the way for the much more efficient Core architecture that followed.

If Prescott was a stumble, AMD’s Bulldozer architecture was an existential threat that brought the company to its knees. Based on a novel “module” concept where two integer cores shared key resources like the floating-point unit, Bulldozer was a categorical failure in practice. It represented a trifecta of disaster: it missed its clock speed targets, drew excessive amounts of power, and delivered performance that was often a step backward from the processors it was meant to replace. This deeply flawed design crippled AMD’s entire product line for six agonizing years. During this long winter, the company bled market share and teetered on the brink of irrelevance, a dire situation that was only reversed by the triumphant arrival of the Ryzen architecture.

Even in the modern era, a processor can be considered “bad” for reasons beyond raw performance. The Intel Core i9-14900K, while technically a flagship, represents a failure of innovation. It was essentially a factory-overclocked version of its predecessor, a stopgap product symbolizing stagnation rather than progress. Its practical flaws were severe: extreme power and heat demands necessitated high-end liquid cooling simply to prevent performance throttling under load. Plagued by stability issues upon release and offering a weak value proposition compared to cheaper, more efficient models, the 14900K stands as a symbol of brute-force engineering, a processor that pushed silicon to its absolute thermal and electrical limits for only marginal gains.

Fundamentally Flawed Processors That Missed the Mark Entirely

Some processors are doomed not by a single technical flaw but by a complete mismatch with the market they are intended to serve. The Cyrix 6×86 is a prime example of a processor that was the wrong tool for the job. Its lopsided design featured strong integer performance, making it competitive with Intel’s Pentium chips in business and productivity tasks. However, its floating-point unit (FPU) was abysmal. This made it a terrible choice for the burgeoning world of 3D gaming, which relied heavily on FPU power for rendering complex graphics. As games became a driving force in the PC market, the 6×86’s fatal weakness became impossible to ignore, cementing its legacy as a chip that was obsolete upon arrival for an entire generation of users.

In contrast, the Cell Broadband Engine, the heart of the Sony PlayStation 3, was a processor that was perhaps too brilliant for its own good. Its asymmetrical design, featuring one main processing core and several specialized co-processors, was theoretically capable of immense computational power. In practice, it was a developer’s nightmare. Programming for its unconventional architecture was notoriously difficult, requiring a completely different mindset from the more conventional multi-core designs of its rivals, like the Xbox 360. This steep learning curve hindered game development, contributing to the PS3’s slow start and its struggle to compete against a more developer-friendly platform.

Yet, if one processor had to be crowned the undisputed worst of all time, the Cyrix MediaGX would be a leading contender. It was a product born from an idea far ahead of its time: the first true System-on-a-Chip (SoC) for the desktop, integrating the CPU, graphics, and memory controller onto a single piece of silicon. The execution, however, was a catastrophe. The CPU core was based on an ancient 486-class design, leaving it woefully outmatched against the Pentium-era software it was forced to run. This fatal combination of a forward-looking concept with backward-looking technology resulted in a universally miserable user experience. The MediaGX delivered on none of its promises, serving as a cautionary tale of how ambition, without the requisite technological capability, leads only to disappointment.

The history of these failed processors offered more than just technical post-mortems; it provided a clear narrative about the dangers of hubris and the critical importance of aligning engineering with market reality. The lessons learned from the searing heat of the Prescott, the strategic dead end of the Itanium, and the utter disappointment of the MediaGX were not forgotten. They informed the designs that followed, pushing the industry toward the multi-core, power-efficient architectures that define modern computing. These blunders, though costly, ultimately served as essential, if painful, guideposts on the relentless path of technological progress.