The demand for storage space has grown exponentially since the advent of big data, artificial intelligence, and the internet of things. Companies now require vast amounts of storage space to store and manage their data. This demand has led to the development of larger and more efficient solid-state drives (SSDs). Solidigm, a global provider of flash storage solutions, has launched a new drive, the D5-P5430, which aims to improve the endurance of quad-level cell (QLC) SSDs. This article discusses the features and benefits of the D5-P5430 drive and its implications for the storage industry.

Solidigm’s D5-P5430 Drive

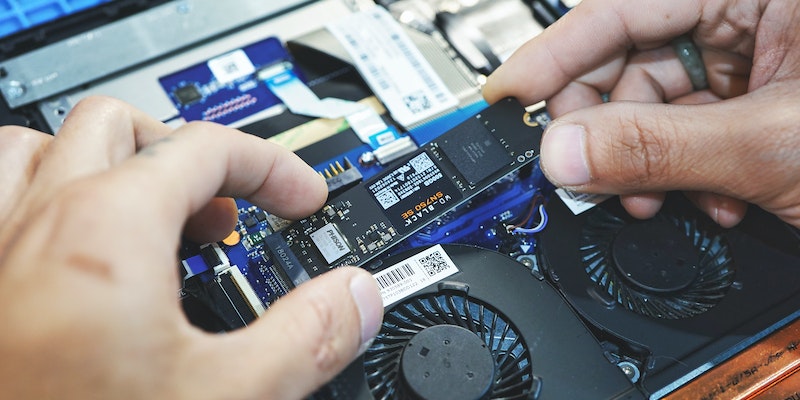

The D5-P5430 is a solid-state drive that is available in capacities ranging from 3.84TB to 30.72TB. It is designed to improve the endurance of QLC drives and is presented as a direct replacement for TLC SSDs. The drive uses newer 192-layer NAND and delivers a drive writes per day (DWPD) on random workloads of 0.58 using a 4KB indirection unit (Solidigm quotes 32 Petabytes written). One of the most noteworthy features of the D5-P5430 is its availability as a 7.5mm E3.S form-factor (for up to 25W power dissipation) which allows more drives to be squeezed closer together. This feature makes it an ideal option for usage in hyper-scale data centers.

Solidigm is also planning to launch a 61.44TB SKU for its P5316 family, possibly later this year. With the ever-increasing demand for storage space within the data center, the larger capacity of the D5-P5430 will be a welcome addition to the storage market.

Other 30TB SSDs on the way

Solidigm is not the only player in the market that is looking to develop high capacity SSDs. Nimbus Data has released two 32TB SSDs (as well as a 64TB, 50TB, and 100TB model), Samsung has added the PM1653 and the PM1733, Kioxia has introduced the PM6 and the CM6, and Micron has announced the 9400 Pro and the 6500 ION. Additionally, there are other niche players such as Pure Storage and Liqid Element, with either proprietary solutions or products that target a small segment of the market.

However, the biggest challenge with QLC-based SSDs, especially in the world of hyperscalers, is endurance. QLC-based SSDs have the ability to store more data, but they are not designed to handle repeated writes which is a significant concern for hyperscalers. While Solidigm’s D5-P5430 addresses this issue to some extent, it remains to be seen how it fares compared to other SSDs in the market.

Competitiveness of Solidigm’s Drive

Solidigm’s D5-P5430 is expected to be competitive in terms of performance with its Micron rival. According to a review by Storagereview, the D5-P5430 showed impressive results in terms of read and write speeds, latency, and power efficiency as well. Its performance on random workloads is also noteworthy, which is what most data centers require. The review also notes the importance of endurance in this market and highlights that Solidigm’s plan to use newer 192-layer NAND is critical to the drive’s long-term viability.

The demand for larger capacity SSDs continues to grow, and Solidigm’s D5-P5430 is a promising addition to this market. Its ability to improve the endurance of QLC-based SSDs and its competitive performance make it an attractive option for hyperscalers who require large storage capacity along with reliability. While there are other players in the market with similar products, Solidigm’s focus on endurance and its plans to use newer technology show that it is looking to stay ahead of the curve. Overall, the D5-P5430 represents a significant step forward in the development of high-capacity SSDs, and it will be interesting to see how it fares in the market.