As artificial intelligence models evolve into complex ecosystems of specialized “experts,” the underlying hardware must transform from a collection of powerful processors into a seamlessly integrated, high-bandwidth fabric capable of handling unprecedented data flow. NVIDIA’s Blackwell GB200 NVL72 platform emerges as a direct response to this paradigm shift, engineered specifically to address the unique computational and communication demands of next-generation AI. This review examines its architecture, performance, and market position to determine if it truly sets the new standard for large-scale AI infrastructure.

Defining the Next Era of AI Infrastructure

The industry’s rapid move toward Mixture of Experts (MoE) models promises greater efficiency and capability, but it also introduces significant architectural challenges. These models distribute tasks across numerous specialized sub-networks, creating immense communication bottlenecks that can cripple performance. For hyperscalers and pioneering enterprises, the central question is whether a new platform can deliver a leap in performance and total cost of ownership (TCO) significant enough to justify a massive investment in this new landscape. The GB200 NVL72 is positioned as the definitive solution to this problem. By fundamentally rethinking rack-scale design, it aims to eliminate the traditional barriers between GPUs, enabling the fluid data transfer essential for MoE workloads. Its value proposition rests not just on raw processing power but on its ability to deliver superior performance-per-dollar, making the deployment of trillion-parameter models economically viable at scale.

Architectural Breakthroughs of the Blackwell Platform

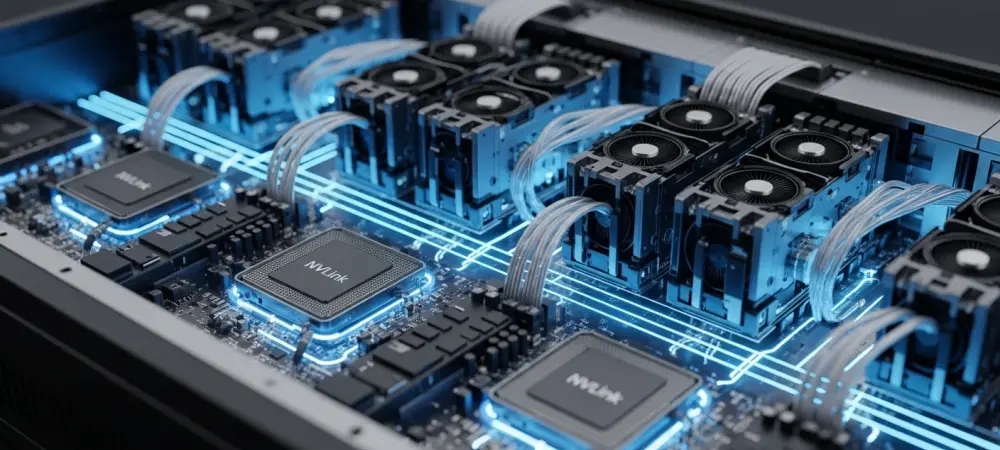

At the heart of the GB200 NVL72 is an “extreme co-design” philosophy, where every component is optimized to work in concert. The system integrates 72 Blackwell GPUs into a single, cohesive unit connected by a fifth-generation NVLink fabric. This design effectively creates one massive processor, breaking down the physical and communication barriers that have long constrained the size and complexity of AI models.

This tightly coupled architecture is complemented by 30TB of fast, shared HBM3e memory, which allows every GPU in the rack to access a unified memory pool with exceptional speed. This eliminates the latency-inducing data shuffling that plagues conventional clusters, directly addressing the core bottleneck of MoE models. Consequently, the system facilitates a level of expert parallelism that was previously unattainable, allowing for more complex models to be trained and deployed with greater efficiency.

Performance Benchmarks and Real-World Impact

Recent analysis provides concrete metrics that underscore the GB200 NVL72’s dominance in its target workloads. In MoE inference tasks, the platform demonstrates a staggering 28-fold increase in throughput per GPU compared to competitive offerings, achieving 75 tokens per second in similarly configured clusters. This dramatic performance gain translates directly to enhanced interactivity and responsiveness for real-world AI applications.

Beyond raw speed, the system’s impact on TCO is perhaps its most compelling feature. The efficiency gains delivered by its integrated design result in a remarkable 1/15th relative cost per token when benchmarked against competitors. This superior “intelligence-per-dollar” is a critical factor for hyperscalers, as it fundamentally alters the economics of deploying and scaling advanced AI services, enabling them to offer more powerful models at a fraction of the operational expense.

Strengths and Current Market Positioning

The GB200 NVL72’s primary advantage lies in its purpose-built design for expert parallelism, making it the undisputed leader for organizations at the forefront of the MoE revolution. Its unparalleled performance and cost-efficiency in this domain establish a commanding market position that competitors will find difficult to challenge directly. The platform’s ability to maximize “intelligence-per-dollar” solidifies its role as the premier choice for next-generation AI.

However, it is important to view its dominance within the context of an evolving market. While the GB200 NVL72 excels in large-scale, communication-intensive workloads, competitors like AMD continue to offer viable solutions for specific use cases. For instance, the high HBM3e capacity of platforms like the MI355X remains a strong selling point for certain high-density environments. The competitive landscape will undoubtedly intensify as rivals release their next-generation rack-scale architectures.

Final Verdict on the GB200 NVL72

The NVIDIA GB200 NVL72 represents more than an incremental upgrade; it is a foundational shift in AI system design. By seamlessly integrating compute, networking, and memory at the rack level, it effectively solves the critical communication bottlenecks that have emerged with the rise of massive MoE models. Its verified performance gains and transformative TCO advantages are not just impressive—they redefine what is possible in large-scale AI. This review confirms that the platform sets a new and formidable industry standard for both performance and efficiency. For organizations committed to developing and deploying the most advanced AI models, the GB200 NVL72 is not merely an option but the definitive leader. It provides the architectural blueprint for the next generation of AI supercomputers.

Recommendations for Potential Adopters

The primary beneficiaries of the GB200 NVL72 are hyperscale cloud providers and large enterprises dedicated to pushing the boundaries of artificial intelligence. These organizations possess the scale and ambition necessary to leverage the platform’s full potential for training and deploying state-of-the-art models.

Decision-makers considering adoption should conduct a thorough evaluation of their specific workload requirements. The GB200 NVL72 offers its most profound advantages for massive-scale MoE training and inference tasks where inter-GPU communication is the primary performance limiter. For those whose roadmaps align with this trajectory, investing in the Blackwell architecture is a strategic imperative for maintaining a competitive edge in the AI-driven future.