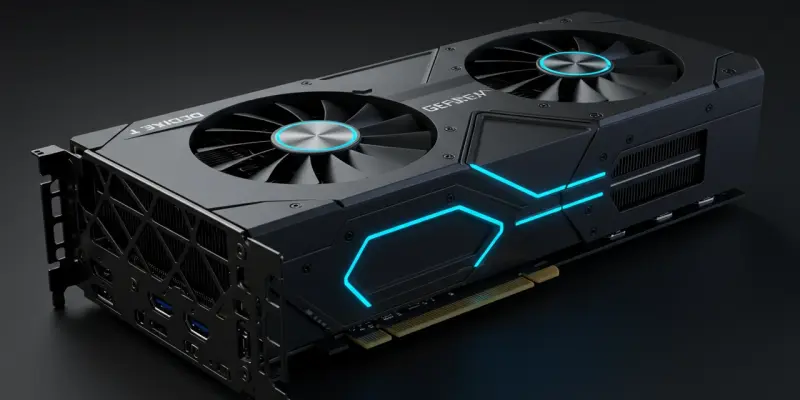

As geopolitical tensions influence technological advancements, NVIDIA has announced the release of a downgraded version of its anticipated GeForce RTX 5090D graphics card tailored specifically for the Chinese market. This strategic move reflects the constraints imposed by the US government’s export policies, aimed at limiting certain technological exports to China. As part of this compliance, the RTX 5090D will feature significant reductions in memory and processing power compared to its original global counterpart, the RTX 5090. These changes include a decrease in VRAM capacity from 32 GB to 24 GB and a reduction in core count from 21,760 CUDA cores to 14,080. NVIDIA’s decision illustrates a balancing act between adhering to regulatory requirements and catering to an essential segment within its consumer base.

Performance and Market Implications

The adjustments to the RTX 5090D, specifically in VRAM and CUDA core numbers, are anticipated to significantly influence the graphics card’s performance. These specs are vital for managing the heavy demands of modern gaming and productivity, so users might experience a decrease in speed and efficiency compared to the complete RTX 5090. MANLI, a partner of NVIDIA, confirmed these specification reductions, aligning them with the restrictions on memory bandwidth, capped under 1.4 TB/s for exports to China. Additionally, rumors suggest NVIDIA may soon release a new RTX 50 Blackwell GPU, potentially called the RTX 5080 Super or RTX 5080 Ti. This version is projected to have 24 GB of memory, offering more choices in the market while adhering to similar export guidelines. These developments emphasize NVIDIA’s strategy to comply with international trade policies while maintaining competitiveness. The RTX 5090D is expected to begin shipping between late July and early August, marking a key progression in regional tech offerings.