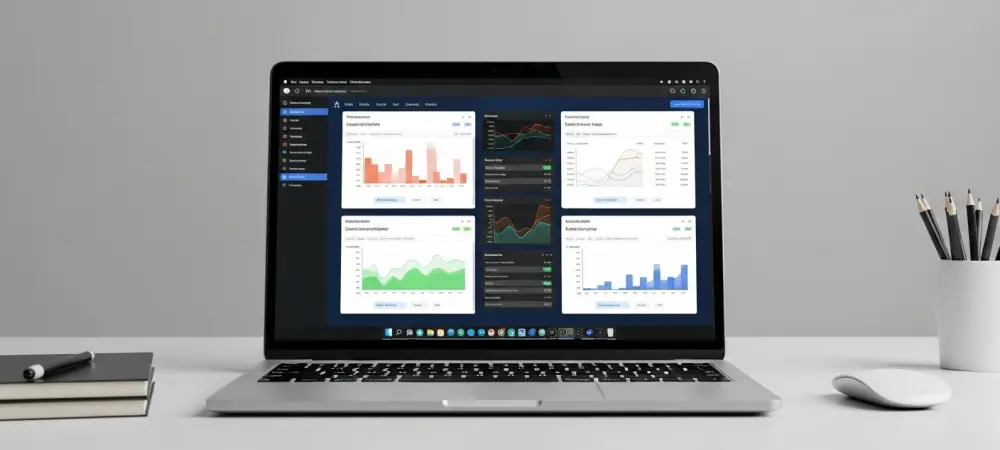

The long-held assumption that a data scientist’s primary tool must be a monument to raw graphical power is rapidly becoming a relic of a bygone era in computing. The modern data science laptop represents a significant advancement in mobile computing for technical professionals, reflecting a deeper understanding of real-world workflows. This review will explore the evolution of this technology, its key hardware components, performance benchmarks in real-world scenarios, and the impact this shift has had on professional workflows. The purpose of this review is to provide a thorough understanding of the current capabilities of these machines and their potential future development, guiding professionals to make informed hardware choices.

The Shifting Paradigm of Data Science Hardware

The principles guiding the design of today’s data science laptops signify a major industry pivot. The former obsession with a GPU-centric architecture has given way to a more balanced and practical system, a change driven by the maturation of the data science field itself. With the widespread adoption of cloud computing for resource-intensive model training, the role of the personal laptop has been fundamentally redefined.

No longer expected to be a self-contained supercomputer, the modern data science laptop now serves as a powerful and highly responsive workstation. Its primary duties encompass the bulk of a data scientist’s day: data ingestion, cleaning, preprocessing, exploratory analysis, and iterative modeling. In this context, system stability, multitasking fluidity, and rapid responsiveness are the new metrics of performance, displacing the singular focus on teraflops of graphical processing power.

Deconstructing the Modern Data Science Machine

The Primacy of the Central Processing Unit (CPU)

A powerful, multi-core CPU has rightfully reclaimed its throne as the most critical component for the vast majority of data science tasks. The daily work of a data professional—wrangling data with libraries like Pandas, performing feature engineering, and running statistical analyses—is fundamentally composed of operations that can be heavily parallelized. A strong multi-core processor, such as an Intel Core Ultra 9, directly accelerates these workloads, reducing wait times and enabling a more fluid, iterative cycle of experimentation.

This acceleration is not a minor convenience but a core driver of productivity. When a data scientist can execute complex data transformations or build preliminary models in seconds rather than minutes, the entire workflow becomes more dynamic. Consequently, investing in a top-tier CPU offers a more direct and consistent return on investment for general data science productivity than any other single component.

The Critical Role of Random-Access Memory (RAM)

For a modern data scientist, ample and stable RAM is the non-negotiable foundation of a productive workflow. As datasets grow in size and complexity, the ability to load and manipulate them entirely in memory is crucial for performance. The previous baseline of 16GB is no longer sufficient; 32GB has emerged as the new standard, providing the necessary headroom to avoid performance-killing swaps to disk.

This requirement extends beyond handling a single large dataset. A typical workflow involves running multiple applications simultaneously: an IDE like VS Code, several Jupyter notebooks, a database client, and numerous browser tabs for research. Sufficient RAM ensures that these tools can operate in concert without causing system slowdowns or crashes, preserving a state of flow and preventing costly interruptions during complex analytical tasks.

The Specialized Function of the Graphics Processing Unit (GPU)

The role of the Graphics Processing Unit (GPU) has been re-contextualized from a universal necessity to a highly specialized tool. For professionals working at the cutting edge of deep learning, training complex neural networks with frameworks like PyTorch and TensorFlow, or running large-scale simulations, a powerful GPU like an NVIDIA RTX 50-series remains indispensable. These tasks are specifically designed to leverage the massive parallel processing capabilities of a modern graphics card.

However, it is crucial to recognize that these workflows represent a fraction of the data science landscape. Most general data analysis, classical machine learning, and business intelligence tasks are CPU-bound and derive little to no benefit from a high-end GPU. Therefore, an over-investment in a top-tier GPU often represents an inefficient allocation of resources, potentially compromising the budget for a better CPU or more RAM, which would provide broader performance benefits.

Essential Foundations SSD and Cooling

The performance of a system is only as strong as its weakest link, making supporting hardware like the Solid-State Drive (SSD) and cooling system critical. A fast NVMe SSD is the baseline for a responsive machine, ensuring near-instantaneous boot times, rapid application loading, and swift data access. This responsiveness underpins the entire user experience, minimizing friction in day-to-day operations.

Equally important, though often overlooked, is a robust thermal management system. High-performance CPUs and GPUs generate significant heat under load, and without effective cooling, they will engage in thermal throttling—intentionally reducing their clock speeds to prevent damage. A well-designed cooling solution ensures that the laptop can sustain its peak performance during long-running data processing jobs or model compilations, providing consistency and reliability when it matters most.

Emerging Trends and Innovations for 2026

The hardware landscape continues to evolve, with the most significant trend being an industry-wide shift toward practicality and workflow optimization over the pursuit of raw, often theoretical, specifications. Manufacturers are increasingly designing laptops that excel under the typical, bursty workloads of data analysis and experimentation rather than just for marathon benchmarking sessions. This philosophy prioritizes stability and sustained performance in real-world conditions. A key innovation driving this trend is the rise of processors with dedicated AI accelerators, or Neural Processing Units (NPUs). These specialized cores are designed to handle low-power machine learning inference tasks with extreme efficiency, freeing up the CPU and GPU for more demanding work. This move toward heterogeneous computing architectures signals a future where laptops are not just powerful, but intelligently optimized for the specific nature of data-centric tasks.

Top Laptops for Diverse Data Science Workflows

The Power User Heavy Local Training

For the niche of professionals who continue to perform computationally intensive model training or complex simulations locally, machines like the HP Omen 16 Max are purpose-built. It combines a high-end Intel Core Ultra 9 processor with a top-tier NVIDIA RTX 5080 GPU and 32GB of DDR5 RAM. This potent trio is specifically catered to accelerate deep learning frameworks and handle massive datasets, while its robust cooling system is essential for maintaining peak performance during these demanding, long-running tasks.

The Balanced Professional High-Performance All-Rounder

The MSI Vector 16 HX AI stands out as the ideal choice for professionals requiring significant power for serious machine learning experimentation without the immobility of a full desktop replacement. It strikes a masterful balance with its powerful Core Ultra 7 CPU and a capable RTX 5070 Ti GPU. This configuration provides excellent multi-core performance for data wrangling while offering substantial GPU acceleration for a versatile workflow that includes both traditional ML and deep learning.

The Daily Driver Reliable and Stable Workhorse

Dependable workhorses like the Lenovo LOQ 2025 are designed for the mainstream data science professional whose primary concerns are reliability and consistent performance. It smartly prioritizes a strong CPU/RAM combination—featuring an Intel Core i7-14700HX and 32GB of RAM—over a top-of-the-line GPU. Its emphasis on thermal stability ensures an uninterrupted and frustration-free workflow, making it a perfect tool for day-in, day-out data analysis and modeling.

The Mobile Maverick Portability and Efficiency

The Apple MacBook Pro 14-inch remains a premier choice for data scientists who prioritize mobility, exceptional battery life, and a polished user experience. Its unified memory architecture provides remarkable efficiency for data-heavy Python workflows, and its power-per-watt performance is unmatched. While not designed for heavy local training, its strengths make it an ideal companion for a cloud-centric workflow, allowing for seamless development and analysis on the go.

The Aspiring Analyst Entry-Level and Learning Rigs

For students and early-career professionals, practical and affordable entry points like the HP Victus and ASUS TUF Gaming A15 offer a smooth on-ramp. The HP Victus, with its AMD Ryzen 5 CPU and 16GB of upgradeable RAM, provides a solid foundation for learning core skills like Python and SQL. In contrast, the ASUS TUF A15 emphasizes durability and CPU power with its Ryzen 7 processor, making it a reliable option for data preprocessing and light ML tasks without a major financial outlay.

Navigating Challenges and Trade-offs

The primary challenge for consumers remains making a sound investment that aligns with their actual needs. A significant risk is over-investing in underutilized components, most notably a high-end GPU that sits idle during CPU-bound data manipulation tasks. This misallocation of funds often comes at the expense of more critical components like RAM or a faster CPU, leading to a system that is unbalanced for its intended purpose. Furthermore, every professional must navigate the critical trade-offs between raw processing power, portability, battery life, and budget. The most powerful machines are often the heaviest and have the shortest battery life, while ultra-portable devices may lack the thermal headroom for sustained performance. The technical challenge for manufacturers—and the decisional challenge for buyers—is finding the optimal equilibrium that best supports an individual’s specific professional workflow.

The Future of Mobile Data Science Workstations

The trajectory of mobile workstation technology points toward greater intelligence and specialization. The integration of on-chip AI accelerators will become standard, offloading common machine learning tasks to highly efficient, dedicated silicon. This will allow the main CPU and GPU cores to focus on the heavy lifting, leading to systems that are both more powerful and more energy-efficient. Moreover, the synergy between powerful local machines and scalable cloud platforms will continue to deepen, defining the ideal data science ecosystem. Laptops will be further optimized for the development, experimentation, and visualization phases of the workflow, while the cloud will handle large-scale training and deployment. This symbiotic relationship will drive the long-term specialization of hardware, with laptops becoming even more precisely tailored to specific analytical roles and workflows.

Final Verdict Prioritizing Workflow Over Specifications

This review ultimately demonstrated that the best data science laptop was not the one with the highest numbers on a spec sheet, but rather a balanced system meticulously matched to an individual’s specific, day-to-day workflow. The central finding was that a practical, well-rounded machine consistently delivered greater productivity than one chasing maximum, but often theoretical, performance benchmarks. The overall assessment showed that a machine prioritizing a powerful multi-core CPU, a minimum of 32GB of stable RAM, and an effective thermal management system provided far greater value and a smoother professional experience. For the majority of data scientists, these core components proved to be the true pillars of productivity, making a balanced system the most intelligent investment for professional success.