The seamless integration of AI-generated content into our daily information streams has quietly dismantled the foundational assumption that what we see and hear is a reflection of objective reality. What was once a philosophical concern has become a practical, daily challenge, as sophisticated artificial intelligence now crafts convincing text, images, audio, and video with alarming ease. This surge in synthetic media has created a crisis of authenticity, rendering traditional methods of verification obsolete and forcing a complete reevaluation of how we establish trust in the digital realm. The response to this crisis is not a simple software patch but a fundamental paradigm shift toward a more holistic and evidence-based method of analysis known as multimodal AI detection.

When Seeing, Hearing, and Reading Are No Longer Believing

The scale of the synthetic media challenge is no longer theoretical, as a staggering 85% of cybersecurity experts directly link the rise of generative AI to a significant surge in cyberattacks, turning the digital landscape into a minefield of sophisticated deception. The sheer volume of AI-generated content—from automated social media bots spreading disinformation to hyper-realistic profile pictures on professional networking sites—overwhelms the natural human capacity for skepticism. This saturation ensures that synthetic media is not an occasional novelty but a constant presence, subtly shaping perceptions, influencing decisions, and eroding the very concept of a shared reality.

This relentless wave of synthetic content has precipitated a profound erosion of trust across all sectors of society. When a political candidate can be convincingly impersonated in a deepfake video or a respected news outlet’s style can be perfectly mimicked by a language model, the public’s confidence in established institutions falters. The issue extends beyond public discourse into personal security, where voice-cloning scams can defraud families and AI-penned phishing emails can bypass even wary employees. This pervasive uncertainty necessitates a fundamental change in our approach, moving from passive consumption toward active, tool-assisted verification.

The Unseen Threat: Why Old Defenses Fail Against New Fakes

The first generation of AI detection tools emerged from a text-only world, designed primarily to address academic concerns about AI-written essays. These early systems focused on identifying statistical anomalies in language, analyzing metrics like “perplexity,” which measures the predictability of word choices, and “burstiness,” which examines variations in sentence length and complexity. For a time, these linguistic fingerprints were reliable indicators of machine generation, as early models tended to produce text that was unnaturally uniform and predictable. However, the exponential advancement of AI has rendered these methods largely obsolete, as modern language models have mastered the art of human-like nuance, stylistic variation, and even intentional imperfection.

The consequences of this technological leap extend far beyond the classroom, creating high-stakes threats that text-based tools are completely unequipped to handle. The modern danger lies in sophisticated, multi-layered deceptions, such as financial scams initiated by a cloned voice of a CEO authorizing a wire transfer or disinformation campaigns powered by deepfake videos of public figures. These scenarios highlight the critical failure of unimodal defenses. In this new environment, malicious actors construct entire “synthetic media ecosystems,” where a fake image, a generated voice message, and AI-written text are woven together to create a cohesive and dangerously believable false narrative—a threat that can only be countered by a system capable of analyzing every element in concert.

Deconstructing the Deception: The Core Principles of Multimodal Analysis

In response to the fragmented reality of synthetic media, multimodal detection offers a unified analytical framework. This approach is defined by its ability to process and correlate signals across text, image, audio, and video formats within a single, integrated system. Instead of applying a one-size-fits-all algorithm, it employs format-specific analysis to hunt for distinct digital fingerprints left behind by the generation process. This could involve identifying subtle inconsistencies in lighting and shadow in a synthetic image, detecting unnatural frequency patterns in a cloned voice, or flagging microscopic artifacts in video compression that betray a deepfake. The power of this methodology lies in its holistic view, recognizing that the truth often lies in the dissonances between different media types.

This advanced approach also fundamentally reframes the objective of detection, moving away from a simplistic binary of “real versus fake” toward a more nuanced, probabilistic assessment. Trust in the digital age is not an absolute state but a layered and contextual process; the level of scrutiny required for a social media meme is vastly different from that needed for evidence in a legal proceeding. Multimodal systems support this reality by providing a spectrum of evidence rather than a definitive verdict. The core question evolves from “Is it fake?” to “Where is the evidence of synthetic generation, and how compelling is it?” This allows users to weigh the indicators based on the stakes of their specific situation.

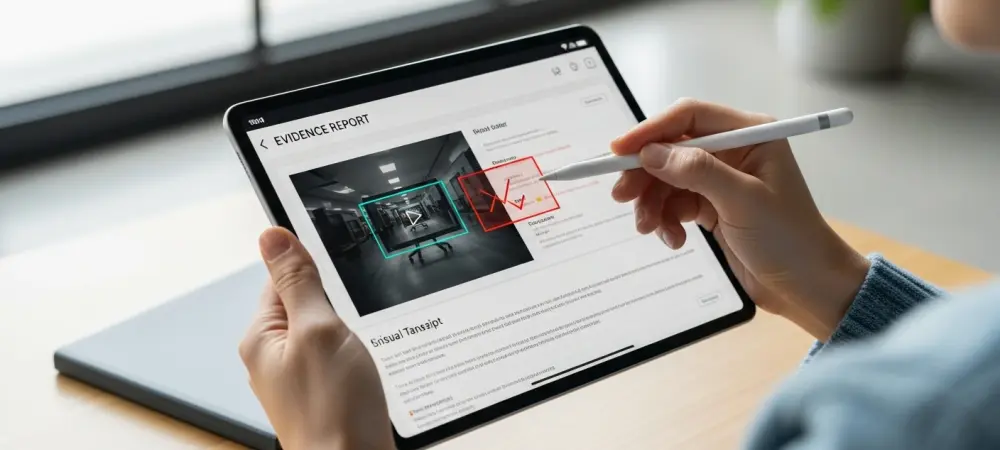

Central to this new paradigm is the principle of explainability. Early detection tools often frustrated users by delivering opaque probability scores—a “98% AI-generated” label with no justification—which fostered confusion and undermined confidence in the technology itself. Modern multimodal systems reject this “black box” approach, instead championing transparent reporting that shows the user precisely why a piece of content was flagged. By highlighting unnatural phrases in text, generating heatmaps over anomalous regions in an image, or pinpointing inconsistent frames in a video, these tools empower human critical thinking. They transform the user from a passive recipient of a verdict into an active investigator equipped with actionable evidence.

In Practice: How Modern Tools Are Building a New Foundation for Trust

The practical application of these principles is already reshaping the digital trust and safety landscape, as exemplified by platforms like isFake.ai. This new generation of tools operationalizes multimodal analysis by providing an evidence-based report that demystifies the detection process. An analysis might reveal text highlighted for its unusually consistent sentence structure, an image heatmap showing pixel-level incongruities in a person’s earlobe, and flagged video frames where lip movements are subtly out of sync with the audio. By making these almost imperceptible synthetic signals visible and understandable, such platforms give users tangible proof to support their evaluation.

This evidence-first approach redefines the relationship between the user and the technology. The tool is no longer positioned as an infallible automated judge but as a sophisticated interpretive aid designed to augment human intuition and expertise. Its purpose is not to deliver an unchallengeable final say but to surface a clear set of signals that can guide an informed decision. This collaborative model respects the user’s capacity for judgment, providing them with the analytical leverage needed to dissect complex digital content. The goal is empowerment, not automation, fostering a more resilient and discerning online populace.

Navigating the Future: A Practical Framework for Digital Authenticity

To effectively leverage these new capabilities, users must adopt an investigative mindset, using detection tools as a starting point for critical inquiry, not an endpoint. The first strategy is to focus on the “why” behind a detection score; rather than accepting a percentage at face value, one should scrutinize the specific evidence provided, such as inconsistent lighting in a photo or an unnatural, repetitive cadence in an audio file. A second, crucial strategy is to corroborate findings across modalities, questioning how the different media elements within a piece of content support or contradict one another. A pristine, high-resolution image paired with poorly written, simplistic text, for instance, should raise immediate suspicion.

Finally, these technological findings must always be situated within a broader evaluation of context and source. A tool’s analysis is a single, powerful data point, but it should be weighed alongside the publisher’s reputation, the purpose of the content, and its alignment with other reliable sources. Looking ahead, building a truly resilient digital ecosystem requires recognizing that technology is only one piece of a much larger puzzle. An effective trust and safety infrastructure must combine the power of multimodal detection with emerging standards for media provenance tracking, robust editorial oversight from credible institutions, and widespread public education initiatives focused on digital literacy. This multi-pronged approach is essential for cultivating a society capable of navigating an increasingly synthetic world.

The journey toward restoring digital trust has been marked by a necessary evolution from simple, single-format checks to a more sophisticated, holistic understanding of authenticity. It became clear that since no technology could offer perfect, future-proof certainty, the goal shifted from eliminating deception to managing it. By embracing multimodality and prioritizing explainability, the new generation of detection tools did not promise an end to uncertainty. Instead, they offered something far more valuable: the means to make that uncertainty visible, understandable, and manageable, ultimately equipping society with the clarity and confidence needed to navigate the complexities of the digital age.