As technology advances, data collection has become a critical aspect of companies’ success. It allows businesses to provide targeted and personalized services to their clients. However, data collection also raises concerns about privacy violations. Companies must comply with regulations and protect their customers’ private information. This is particularly important when using AI language models like ChatGPT, which presents new challenges for privacy and data security.

The Importance of Privacy Compliance in the Age of Advanced Technology and Data Collection

Despite technological advancements, privacy compliance remains critical. Hackers and data thieves employ sophisticated techniques to infiltrate companies’ databases and violate their customers’ privacy, which can lead to reputational damage and legal penalties. The stakes are even higher with AI systems such as ChatGPT, which can analyze vast amounts of data and create human-like content without human intervention. As a result, companies must employ comprehensive privacy compliance measures to protect their customers’ privacy and prevent data breaches.

ChatGPT and Its Implications on Privacy

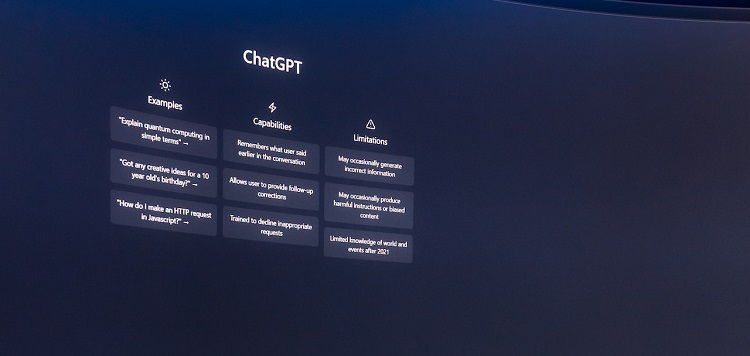

ChatGPT, like any other technology, has significant implications for privacy if not utilized properly. This AI language model utilizes massive amounts of data to generate human-like content, making it a vital tool for businesses. It is meant to improve communication, enhance customer experiences, and increase efficiency. However, it also poses new risks to consumer privacy. ChatGPT can access personal data from a user’s email, chat history, or social media accounts, making it crucial to handle with care.

Proper utilization of ChatGPT to protect privacy is crucial. It is essential to ensure that any text data is anonymized before entering the model. Moreover, businesses must train the AI language model using a wide range of diverse data sets to avoid bias and discrimination. The proper use of ChatGPT will help prevent data breaches and protect user privacy.

To properly utilize ChatGPT, it is crucial to understand the data it uses and how it is gathered. The model is trained on a vast amount of data ranging from chat logs to news articles. This data should be impartial, diverse, and relevant to the intended application. The data is gathered from publicly available sources from any part of the world, and it is essential to be aware that this data collection process must conform to privacy regulations.

Compliance with Regulations to Prevent Data Breaches and Protect User Privacy

Organizations that use AI language models such as ChatGPT must comply with privacy regulations to prevent data breaches and protect user privacy. These regulations vary by country and region and dictate how personal data should be collected, stored, and used. Compliance with regulations could prevent any reputation loss, legal repercussions, or penalties.

The Risks of Bias and Discrimination in ChatGPT

ChatGPT could be influenced by its training datasets, making it susceptible to biases and discrimination. If the model encounters biased data during its training phase, it will replicate that same bias when generating content. As a result, the AI language model could produce offensive or discriminatory content that negatively affects certain groups of people. Proactively monitoring the model for bias and discrimination would help prevent such incidents.

Intellectual Property Considerations with ChatGPT

ChatGPT can generate content that may infringe on someone else’s intellectual property rights. There is a risk of copyright and trademark infringement when producing highly customized content for businesses. For example, the model may create written material that includes trademarked phrases, design elements, or images. Therefore, businesses must ensure that the content produced by ChatGPT does not violate any intellectual property rights.

Ethical Considerations of Using AI Language Models, Including Transparency, Explainability, and Accountability

Using AI language models raises several ethical considerations, including transparency, explainability, and accountability. Companies must ensure that the content generated by ChatGPT is ethical, legally compliant, and transparent to its users. They must also ensure that the model’s internal workings are understandable so that accountability and transparency can be maintained.

Anonymization, Bias Monitoring, Diverse Data Training, Obtaining Consent, and Compliance Measures to Use ChatGPT Properly

There are several measures that organizations can take to maintain the proper use of ChatGPT. Measures such as anonymization, bias monitoring, diverse data training, obtaining consent, and compliance measures ensure the model operates within ethical and legal bounds. By using these measures, companies can reduce the risks associated with the AI language model and protect their customers’ privacy.

In the current age of advanced technology and data collection, companies must remain vigilant about privacy concerns. The use of AI language models like ChatGPT has created new challenges in privacy and data security for businesses. However, by understanding and complying with privacy, ethical, and legal requirements, companies can ensure that they effectively protect their customers’ privacy. Privacy is paramount, and using AI language models should not come at the cost of privacy. Therefore, businesses must employ the most comprehensive privacy measures possible.