The pervasive integration of artificial intelligence into enterprise workflows has created an unprecedented demand for high-quality data, forcing leaders to confront the often-neglected state of their digital information assets. As organizations race to deploy AI-driven chatbots, co-pilots, and intelligent automation services, a critical realization is dawning: the success of these sophisticated tools depends entirely on the integrity of the data they consume. This new reality demands a fundamental shift in strategy, moving data governance from a background compliance task to a core component of innovation. This guide will navigate the risks and rewards of this new era, providing an actionable blueprint for assessing and fortifying the data foundation essential for achieving reliable and secure AI-driven outcomes.

The New Imperative: Why AI Demands a Data Rethink

The “gold rush” to leverage AI has officially begun, penetrating every industry from manufacturing and healthcare to professional services and finance. C-suite leaders and their development teams are now under immense pressure to harness AI to streamline workflows, boost productivity, and unlock new competitive advantages. The allure of AI’s potential is powerful, promising to interpret context, meaning, and nuance from vast datasets with remarkable speed and accuracy. However, this transformative potential comes with a significant prerequisite that many are only now beginning to appreciate.

Before an organization can reap the rewards of AI, its leaders must conduct an introspective analysis of their entire data estate. The focus is no longer just on big data but on good data. AI models do not inherently understand the difference between a current, approved price list and an obsolete one, or a public-facing document and a confidential internal report. Consequently, the rush to deploy AI without first assessing foundational data quality is not just a strategic misstep; it is a direct path toward operational and reputational risk. The imperative now is to pause, assess, and build a solid data foundation before constructing the advanced AI architecture upon it.

The High Stakes of AI: Risks and Rewards of Data Quality

A robust data foundation is non-negotiable for any organization serious about successful AI implementation. The benefits of getting it right are substantial, extending far beyond the performance of a single algorithm. A well-governed data estate mitigates significant business risk by ensuring that automated decisions are based on accurate and current information. It also streamlines compliance, making it easier to demonstrate data provenance and audit decision-making processes for regulators. Ultimately, a strong foundation is the only way to achieve the reliable, trustworthy, and valuable AI-driven outcomes that leaders envision.

Conversely, the dangers of feeding AI systems with stale, outdated, invalid, or corrupt data are severe. Poor data quality can transform a promising AI initiative into a source of business disruption. When AI models are trained on flawed information, they can produce erroneous financial calculations, provide incorrect instructions to operational teams, or generate misleading insights that lead to poor strategic decisions. These risks are amplified across all sectors, but in high-stakes environments like healthcare and engineering, the consequences of such failures can be catastrophic. Ignoring the quality of the underlying data is akin to building a skyscraper on sand—the structure may look impressive initially, but it is destined to collapse.

Actionable Blueprint: Fortifying Your Data for AI

Creating an AI-ready data foundation requires a disciplined, strategic approach that moves beyond ad-hoc clean-up projects. It involves embedding best practices for data governance directly into the organization’s operational fabric. The following principles provide a clear, actionable framework for this transformation, illustrated with real-world scenarios to underscore their importance. By adopting this blueprint, organizations can systematically reduce risk and build the resilient data infrastructure necessary to support safe and effective AI.

Integrate Document Governance into Your Core AI Strategy

For too long, compliance and data governance have been relegated to a separate, often underfunded, “bucket” of corporate priorities. In the age of AI, this siloed approach is no longer viable. The governance of enterprise documents—where the majority of unstructured business knowledge resides—must be an integral part of AI planning from day one. This means that activities like document classification, version control, and access governance must be given the same strategic importance as the selection of AI models and the implementation of integration tools.

This strategic shift requires a new way of thinking about budgets and priorities. Instead of viewing governance as a cost center focused on avoiding penalties, leaders must see it as a critical enabler of AI-driven value. When planning a new AI initiative, the integrity of the source content is paramount. An AI tool is only as reliable as the documents it references, making a disciplined approach to content management a prerequisite for success. Organizations that prioritize a governed data estate from the outset are better positioned to build AI systems that are not only powerful but also trustworthy and compliant.

Real-World Failure: When AI Feeds on Ungoverned Content

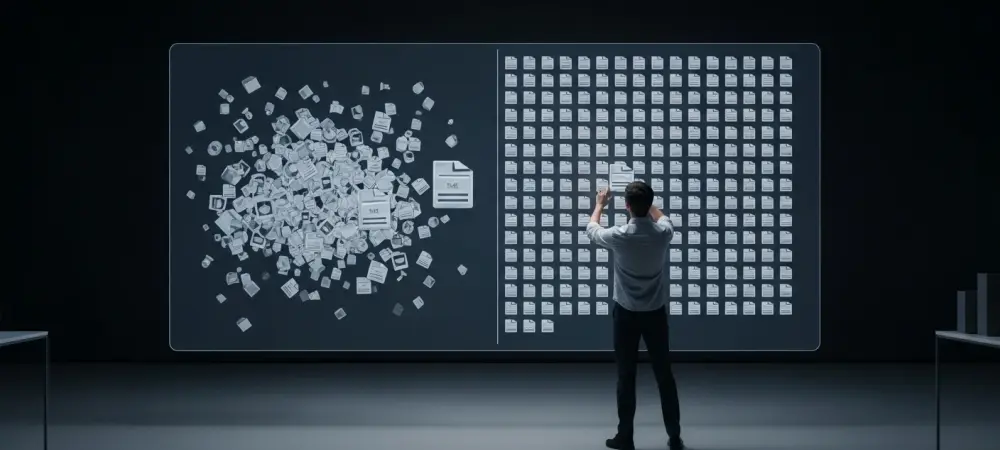

The chaos that erupts from pointing powerful AI tools at poorly managed data sources is a cautionary tale for every enterprise. Consider the typical digital landscape: critical information is scattered across old file servers, disorganized shared drives, disconnected collaboration tools, and countless email attachments. Content is often unclassified, duplicated, and poorly labeled. Over time, user permissions expand but are rarely revoked, granting entire groups access to sensitive documents they should not see.

When an AI agent is unleashed in this environment, it diligently does what it is programmed to do: it searches everything. The consequences are immediate and damaging. The AI may surface an outdated pricing sheet from an archived folder, leading a sales team to provide a customer with an incorrect quote. It might retrieve and share a confidential internal report in response to a broad query from an unauthorized user. In another scenario, it could base a critical automated decision on inaccurate information simply because a former employee’s access was never revoked from a key shared folder. These are not theoretical risks; they are the direct and predictable results of applying advanced technology to an ungoverned data foundation.

Start Small: Identify and Secure Your Critical Document Sets

The task of overhauling an entire enterprise data estate can feel overwhelming, often leading to inaction. A more pragmatic and effective approach is to begin by identifying the most high-value, high-risk document sets that will fuel the initial AI initiatives. Rather than attempting to boil the ocean, organizations should focus their efforts on a manageable subset of critical assets. This targeted strategy allows for tangible progress and demonstrates the value of good governance quickly.

Pinpointing these key assets involves collaborating with business leaders to identify the documents that truly matter. These often include legally binding contracts, critical standard operating procedures, detailed technical specifications, official pricing sheets, and essential product documentation. By isolating these “crown jewels,” the organization can concentrate its resources on ensuring this core information is accurate, current, and properly secured. This foundational work not only de-risks the first wave of AI projects but also creates a scalable model for expanding governance practices across the enterprise over time.

A Practical Audit: Collaborating with Business Owners

Once the most important documents are identified, the next step is to bring basic order to them through a practical audit. This process does not necessarily require new technology; rather, it demands attention, collaboration, and follow-through. It begins by sitting down with the business and domain owners—the individuals who understand the content’s context and importance—to assess the state of these critical files. This collaborative audit is the first crucial step toward establishing control.

The initial actions are foundational. First, establish clear ownership for each document set, ensuring someone is accountable for keeping the information current. Second, create simple, consistent naming conventions so users are not left guessing which file is the correct one to open. Third, implement a clear version control process that includes a definitive policy for retiring obsolete files so they stop circulating as if they were still valid. These fundamental disciplines create the clarity and order necessary for both humans and AI systems to reliably find and use the right information.

From Human Error to Automated Disaster: Managing Amplified Risk

In a traditional, human-driven workflow, the consequences of poor data governance unfold relatively slowly. An employee might spend time searching for the right document, accidentally use an outdated version, or share a file with the wrong person. While problematic, the impact of these individual errors is often contained. However, AI agents accelerate and amplify these same issues exponentially, transforming slow-moving human errors into instantaneous, large-scale automated disasters.

This amplification of risk fundamentally changes the stakes. An AI chatbot that surfaces an outdated procedure can misinform thousands of employees or customers in a matter of seconds. An automated system that acts on a flawed data point can trigger a cascade of incorrect financial transactions or operational decisions. This heightened threat underscores the critical need for auditable and traceable data lineages. When decisions are made automatically, organizations must be able to reconstruct precisely which document an AI system relied upon, a task that is impossible without rigorous governance.

Incident Report: How Poor Data Governance Manifests

The consequences of failing to govern document data are not abstract; they manifest as concrete operational incidents that harm the business. A sales team, relying on a chatbot for a quick answer, unknowingly quoted a customer using an outdated price sheet that the AI surfaced from an old, unmanaged folder. In another case, a customer support agent was inadvertently shown a confidential internal performance report that should have been restricted, creating a significant internal data breach.

The risks extend beyond internal operations to regulatory compliance. Imagine a regulator asking how a specific automated decision affecting a customer was made. If the organization cannot definitively identify the source document the AI system used—because multiple versions exist with no clear audit trail—it cannot adequately answer the query. This inability to prove data provenance not only results in compliance failures and potential fines but also erodes the trust of customers, partners, and regulators alike. These incidents are the natural and foreseeable result of deploying powerful retrieval and generation tools on top of ungoverned content.

Final Verdict: Is Your Organization Ready for the AI Leap?

The critical link between a strong data foundation and successful AI adoption was undeniable. Organizations that moved forward with AI initiatives discovered that their success was not primarily determined by the sophistication of their models, but by the quality and integrity of the data that fueled them. The most effective strategies began not with a technology purchase, but with an honest and thorough audit of the existing data estate. CTOs, CIOs, and business leaders who initiated these foundational audits positioned their organizations for sustainable success.

They started by asking fundamental questions in collaboration with domain experts: Where were the most critical documents? Who had access to them? Was there a clear owner for each key information asset? By addressing these basics first, they systematically reduced risk and built the necessary trust in their data. It became clear that building an auditable, compliant, and governed data estate was the essential first step. This disciplined approach was what ultimately paved the way toward a safe, powerful, and truly transformative AI-driven future.