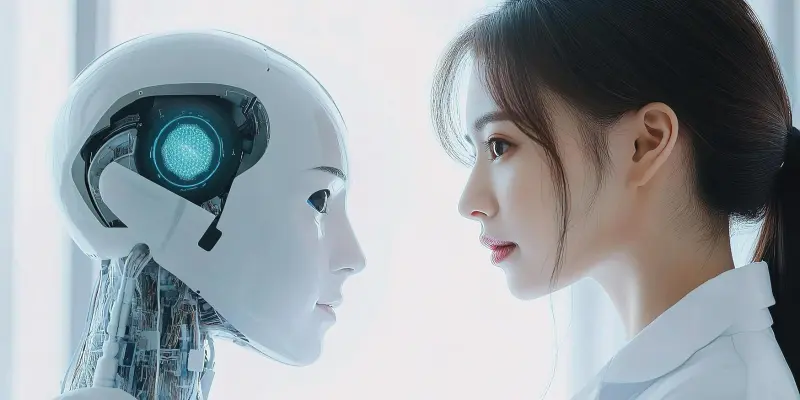

In today’s technologically advanced landscape, artificial intelligence (AI) is playing an increasingly critical role in workplace environments, particularly concerning important decisions like hiring and promotions. A prominent framework potentially guiding ethical AI development is John Rawls’ philosophical concept of the “veil of ignorance.” This theory suggests that rule-making should occur without knowledge of one’s social standing, urging developers and decision-makers to create unbiased systems. By viewing AI development through this lens, organizations seek to mitigate the risk of encoding existing societal biases into AI frameworks.

Applying Rawls’ Philosophy to AI

Understanding and applying John Rawls’ “veil of ignorance” is crucial for those engaged in creating AI systems, particularly for workplace applications. In AI development, this means constructing algorithms and datasets devoid of the developer’s biases, promoting fairness across the board. Embracing this perspective compels developers to introspect on AI’s potential impact without preconceived advantages, fostering systems that uphold equity and safeguard marginalized groups. By imagining how AI decisions affect users regardless of social or economic standing, a new ethical standard is established that can fundamentally reshape the development process, ensuring AI systems contribute positively towards social justice.

The translation of Rawls’ ideals into AI necessitates a paradigm shift in how developers, policymakers, and stakeholders approach technology creation. Rooted in impartiality, this approach necessitates thorough scrutiny of AI systems for bias and disadvantage. This ensures that decisions derived from AI models reflect fair practices, contributing to more just workplace dynamics crucial for organizational integrity and societal trust.

Challenges in AI Bias and Historical Data

AI systems rely heavily on historical data to learn and make decisions, a dependency that inevitably introduces biases inherent in past records. Historical data often reflects societal biases and discrimination patterns, which, when fed into AI systems, can continue or even exacerbate existing inequities. A prevalent issue arises in areas like hiring, where AI systems might favor candidates from specific demographics due to biased training data. Recognizing and addressing these biases is paramount, ensuring AI development prioritizes fairness and does not replicate societal inequities embedded in historical data. To counteract these challenges, developers must engage in rigorous ethical oversight and scrutinize the data that informs AI systems. Adopting Rawls’ concept into AI involves auditing and constantly evaluating AI systems to ensure fairness while minimizing biases. By actively confronting the presence of historical bias, developers can design AI systems that not only mitigate past disparities but also promote unbiased outcomes, ensuring AI tools foster inclusivity and equity in decision-making processes.

Bridging the Gap Between AI Promise and Reality

Despite AI’s significant capabilities in enhancing efficiency, the alignment of AI with fairness principles remains complex and challenging. Often, AI systems reflect rather than rectify societal inequalities, unless they are designed with an explicit focus on fairness. Bridging this gap between AI’s potential and its real-world impacts demands strategic commitments to fostering equity from the development phase onward. By embedding Rawlsian fairness into AI, developers can align technological advancements with broader societal goals of justice and equality.

Efforts to bring AI into alignment with fairness principles involve not just technical adjustments but also thoughtful consideration of the ethical implications of AI-driven decisions. Data scientists and developers are urged to approach each stage of AI development with conscientious attention, ensuring the systems are insulated from bias and unfair practices. While complex, these efforts can transform the narrative of AI from one of perpetuating societal biases to being instrumental in overcoming those very disparities.

Case Study: AI in Hiring Practices

The employment of AI in hiring processes presents a valuable case study for examining biases and testing the application of Rawlsian philosophies in practice. AI-driven tools, such as resume screeners and video interview analyzers, streamline the hiring process but can inadvertently continue biases if not carefully managed. Systems trained on historical hiring data might favor candidates from similar backgrounds to those predominantly hired in the past, often overlooking diversity by default.

Addressing bias in AI-driven hiring requires proactive strategies and measures for ensuring fairness across diverse applicant pools. Developers and decision-makers must apply stringent monitoring of AI systems for bias, intervening promptly to correct any detected unfairness. By nurturing systems that can equitably evaluate candidates, companies can strive toward balanced and representative workplaces, enhancing innovation and inclusivity. Moreover, strategic deployment of AI in hiring aligns with societal expectations for corporate responsibility, ensuring processes are as fair and impartial as they are efficient.

Competitive Advantage Through Fair AI

Understanding and applying John Rawls’ “veil of ignorance” is essential for those involved in designing AI systems, especially those meant for workplace applications. In AI development, this translates to constructing algorithms and datasets devoid of inherent biases, thereby encouraging fairness. By evaluating how AI decisions influence all users, regardless of societal or economic status, a new ethical standard emerges that can fundamentally transform the development process. This approach promotes models built with impartiality, demanding that AI systems be scrutinized for biases. It underscores the need for fairness in AI, ensuring that generated decisions contribute to more equitable workplace dynamics, reinforcing organizational integrity and societal trust.