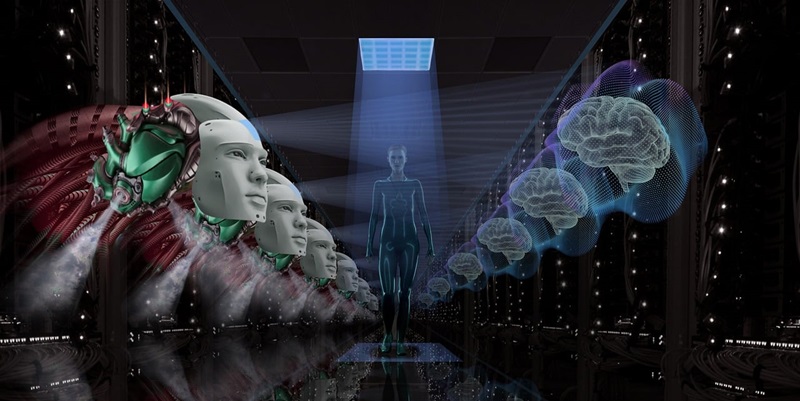

The evolving landscape of Artificial Intelligence (AI) has become a central topic of discussion among experts and the general public alike, given its profound influence on various aspects of society. Insights from industry professionals such as Sorin Anagnoste highlight the significant shift from historical computing technologies to the contemporary era of generative AI. Although generative AI, exemplified by models like OpenAI’s ChatGPT, unleashes unparalleled creative potential, it introduces inherent margins of error that demand caution and validation, unlike traditional AI known for its high precision.

The Economic Impact of AI

Historical Advancements and Modern AI

Historically, advancements in computing technologies played a pivotal role in reducing costs associated with computing power and distribution, paving the way for a digital revolution. As these costs plummeted, new possibilities for innovation and productivity emerged, fostering an era of rapid technological development. In a contemporary context, modern AI is continuing this trend by driving the costs of creativity and productivity towards zero. This economic transformation is not limited to digital spaces but is also poised to affect physical realms through robotic innovations.

Generative AI, while enhancing creative processes across industries, particularly in content creation, design, and analytics, requires a nuanced approach due to its predictive nature and potential for inaccuracies. As AI permeates various sectors, ensuring the accuracy and ethical deployment of these technologies remains critical. Predictions indicate that similar transformations are on the horizon for robotics, promising to revolutionize industrial work, household chores, and even gardening tasks. The automation of these activities could lead to unprecedented efficiency and productivity, fundamentally altering how individuals and businesses operate daily.

Economic Transformation and Productivity

As AI technologies advance, their integration into various domains could disrupt traditional business models and foster new economic landscapes. AI-driven tools have the potential to optimize supply chains, enhance customer experiences, and streamline operations across a multitude of sectors. By minimizing mundane and repetitive tasks, AI allows human workers to focus on more complex and creative aspects of their roles, potentially boosting overall productivity and job satisfaction.

However, this economic transformation is not without challenges. The rapid adoption of AI can lead to job displacement, prompting concerns about the future of employment. While some industries may see a decline in traditional roles, there is potential for new jobs to emerge that leverage uniquely human skills such as critical thinking, empathy, and creativity. To navigate this transition effectively, continuous upskilling and reskilling of the workforce will be essential. Organizations and educational institutions must collaborate to ensure that employees are equipped with the necessary skills to thrive in an AI-driven economy.

Societal Implications of AI

Employment and Ethical Issues

The societal implications of AI extend far beyond the realm of economics, touching on critical aspects such as employment and ethics. The integration of AI systems into workplaces presents a dual-edged sword, as it offers the promise of increased efficiency and innovation while simultaneously raising concerns about job displacement. The potential for mass unemployment due to automation is a pressing issue that requires careful consideration and proactive measures to mitigate its impact. Moreover, the ethical deployment of AI is paramount to ensure that these technologies are used responsibly and do not perpetuate biases or discrimination.

Ensuring data privacy and preventing potential discrimination are essential components of ethical AI deployment. Regulatory frameworks need to be established and enforced to safeguard individuals’ rights and foster trust in AI systems. Ethical considerations also extend to the accountability and transparency of AI systems, as decisions made by these technologies can significantly impact people’s lives. It is crucial to strike a balance between leveraging AI’s capabilities and maintaining ethical standards to ensure equitable outcomes for all members of society.

The Need for Regulatory Frameworks

To address the ethical challenges posed by AI, regulatory frameworks must be established to provide guidelines and standards for the development and deployment of these technologies. Such frameworks should prioritize transparency, accountability, and fairness, ensuring that AI systems operate in a manner that aligns with societal values. Additionally, fostering diversity and competition within the AI sector is crucial to prevent monopolistic dynamics and promote equitable access to AI technologies. By encouraging a competitive landscape, innovation can thrive, and the benefits of AI can be more broadly distributed.

Moreover, the concentration of power among a few tech giants in the AI sector raises concerns about monopolistic practices and unequal access to advanced technologies. Diversifying the AI industry is not only crucial for fostering competition but also for ensuring that AI’s benefits are equitably distributed. Collaboration between governments, industry leaders, and academic institutions can help create a balanced and inclusive AI ecosystem that serves the interests of all stakeholders.

Advantages and Disadvantages of AI

Enhanced Efficiency and Decision-Making

One of the most significant advantages of AI lies in its ability to enhance efficiency and improve decision-making processes. AI technologies excel in handling large volumes of data, identifying patterns, and making data-driven predictions, which can significantly aid decision-makers in various fields. In healthcare, for instance, AI can assist in diagnosing diseases, personalizing treatment plans, and predicting patient outcomes. In finance, AI-driven algorithms can detect fraudulent activities, optimize investment strategies, and manage risks. The potential applications of AI in transportation, environmental sustainability, and other critical sectors are equally transformative.

Despite these advantages, AI systems also present notable disadvantages. Issues of accountability and transparency in AI decision-making processes can lead to mistrust and hesitation in adopting these technologies. The opaqueness of certain AI models, often referred to as "black box" systems, makes it challenging to understand how specific decisions are reached. This lack of transparency can be problematic, especially when AI systems make decisions that significantly impact individuals’ lives, such as in judicial and hiring processes. Ensuring explainability and accountability in AI systems is essential for building trust and promoting widespread adoption.

Concerns About Human Empathy

The rapidly evolving field of Artificial Intelligence (AI) has garnered substantial attention from both experts and the general public due to its profound impact on numerous aspects of society. Industry figures like Sorin Anagnoste emphasize the significant transition from traditional computing technologies to the modern age marked by generative AI. This new wave of AI, epitomized by models such as OpenAI’s ChatGPT, offers unparalleled creative capabilities, revolutionizing fields from content creation to problem-solving. However, it’s not without its challenges. Unlike traditional AI, renowned for its precision, generative AI brings inherent margins of error that necessitate careful validation and cautious use. While it can simulate human-like responses and create original content with remarkable efficiency, its outputs are not always foolproof and can sometimes embed inaccuracies. Consequently, the deployment of generative AI requires a balanced approach, combining its innovative strengths with rigorous oversight to mitigate potential risks and ensure reliability.