The era of unchecked complexity and rapid tool adoption in data engineering is drawing to a decisive close, giving way to an urgent, industry-wide mandate for discipline, reliability, and sustainability. For years, the field prioritized novelty over stability, leading to a landscape littered with brittle pipelines and sprawling, disconnected technologies. Now, as businesses become critically dependent on data for core operations, the hidden costs of this approach are coming into sharp focus.

From Digital Duct Tape to Disciplined Design: Setting the Stage for Data Engineering’s Next Chapter

The journey from the chaotic “tool sprawl” of the past decade has been a costly one. Many organizations now grapple with the consequences of ad-hoc development: mounting technical debt that slows innovation, operational fragility that threatens business continuity, and a constant stream of data quality failures that erode trust. The pressure to move beyond this reactive state is immense, pushing the entire discipline toward a more mature and intentional methodology.

This evolution is not about adopting the next shiny tool but about implementing fundamental shifts in thinking and practice. A consensus is forming around a future defined by platform-centric design, embedded operational intelligence, and a culture of accountability that extends from data quality to fiscal responsibility. These principles represent the bedrock upon which the next generation of resilient and value-driven data ecosystems will be built, transforming data engineering from a technical support function into a strategic pillar of the modern enterprise.

Navigating the Tectonic Shifts in Data Infrastructure and Operations

Industry leaders agree that the path to maturity requires more than incremental improvements; it demands foundational changes in how data systems are architected, managed, and governed. The following trends represent the most significant shifts, collectively pointing toward a future where data infrastructure is treated with the same rigor and discipline as customer-facing software products.

From Ad-Hoc Pipelines to Internal Products: The Rise of Platform-Owned Infrastructure

A decisive move away from decentralized and often ownerless data stacks is underway. The common practice of allowing individual teams to assemble their own infrastructure from a catalog of tools has frequently resulted in fragile, poorly documented systems with no clear line of ownership. This creates significant maintenance burdens and operational risks that are no longer tenable as data becomes more central to business operations. The emerging paradigm is the consolidation of data infrastructure under a dedicated internal platform team. This team treats the organization’s data capabilities as a first-class internal product, with other internal teams as its customers. By providing standardized, centrally maintained building blocks for ingestion, transformation, and deployment, this model establishes clear service-level expectations (SLEs) and plans meticulously for failure. Consequently, the role of the data engineer evolves from low-level “plumbing” and firefighting to high-value activities like sophisticated data modeling and ensuring business-critical insights are reliable.

Implementing this platform-centric model is not merely a technical change; it requires a significant cultural shift. It demands that organizations invest in a product mindset for internal services and foster collaboration between platform teams and their internal consumers. Success depends on treating the data platform with the same seriousness as external products, complete with roadmaps, support channels, and a focus on user experience.

Beyond the Batch Window: Why Event-Driven Architectures Are Becoming the New Default

While batch processing will retain its place for certain workloads, its dominance as the default architectural choice is being challenged by event-driven patterns. This trend is fueled by escalating business demands for data freshness and greater system responsiveness. Historically, the high operational overhead of streaming systems was a major barrier, but advancements in managed services and a mature tooling ecosystem have made real-time processing more accessible and operationally viable for a wider range of organizations.

In this paradigm, data pipelines are designed around a continuous flow of events rather than fixed schedules, enabling data to be processed and enriched “in motion” and made available to consumers almost instantly. Mature event-driven systems exhibit several key architectural traits. They enforce rigorous schema discipline at the point of ingestion to prevent poor-quality data from corrupting the ecosystem. They also maintain a clear separation between data transport and processing, which improves modularity and resilience. Furthermore, these modern architectures are designed with built-in capabilities for data replay and recovery. This allows historical events to be reprocessed to regenerate state or backfill new systems, making failure recovery a predictable and reliable process. This conceptual shift requires engineers to think in terms of continuous flows, making concepts like schema evolution and idempotency first-class design considerations.

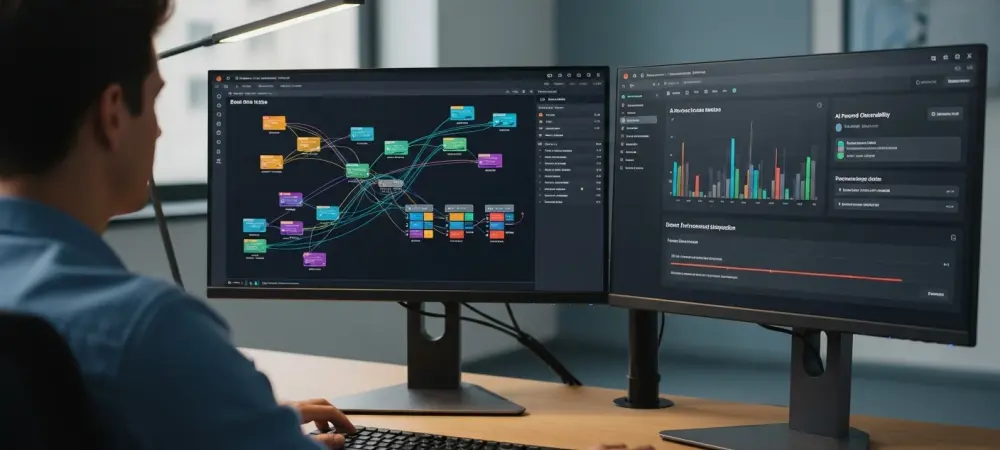

From Code Completion to Cognitive Partner: The Operationalization of AI in Data Systems

Artificial intelligence is evolving from a helpful developer aid into an embedded operational partner for managing complex data platforms. While AI-powered code completion has improved developer productivity, its most profound impact will be in monitoring, debugging, and optimizing data systems in real time. Modern platforms generate a massive volume of operational metadata—or “observability exhaust”—from query logs, data lineage graphs, and resource usage patterns. AI models are uniquely capable of analyzing this data at a scale and speed that is impossible for human engineers. Early applications are already demonstrating significant value by automatically surfacing performance regressions, detecting anomalous data patterns that signal quality issues, and proactively recommending optimizations like new indexes or more efficient partitioning strategies. This allows engineering teams to shift from reactively tracing failures to making informed, proactive decisions.

This trend challenges the notion that AI will replace engineers. Instead, the consensus is that it will augment their capabilities, acting as a force multiplier. By transforming raw observability data into actionable intelligence, AI empowers smaller teams to manage increasingly complex systems while meeting rising expectations for performance and reliability, freeing up valuable engineering time for more strategic work.

The New Accountability: Enforcing Quality with Data Contracts and Sustainability with Cost-Aware Design

In response to the high cost of data quality failures, the practice of implementing enforceable data contracts is becoming a mainstream reality. A data contract is a formal agreement between a data producer and its consumers that defines the schema, freshness, and semantic meaning of a dataset. The core principle is “shifting left,” where these contracts are integrated and enforced early in the development lifecycle, preventing bad data from ever reaching consumers. Running parallel to this push for data quality is the re-emergence of cost-aware engineering. After an era of prioritizing growth over efficiency, financial discipline is becoming a first-class architectural concern. Engineers are now expected to design and operate systems with a keen awareness of their financial impact, from deliberately managing storage tiers to actively optimizing pipelines to reduce compute consumption. Improved tooling for granular cost attribution is making this possible, transforming abstract conversations about efficiency into concrete, data-driven decisions.

Ultimately, these two themes converge to define true system maturity. A mature data engineering practice is one where both data trustworthiness and financial sustainability are treated as non-negotiable design principles. Building systems where quality and cost are proactively managed from the outset is the hallmark of an organization that is serious about creating long-term, scalable value from its data assets.

From Theory to Practice: Building a Future-Proof Data Organization

Synthesizing these trends reveals a cohesive vision of a mature data engineering practice: one that is platform-oriented, event-driven, AI-assisted, and fiscally responsible. Transitioning to this model requires deliberate and actionable steps. Organizations can begin by piloting an internal data platform for a single, high-impact use case, demonstrating its value in providing stability and reducing redundant work. Similarly, identifying a first business process that would benefit from real-time data, such as fraud detection, can serve as an ideal entry point for exploring event-driven architecture.

Introducing data contracts for a single critical dataset can establish a precedent for quality enforcement, while making cost dashboards visible to development teams can start building a culture of financial awareness. For leaders, the challenge is to champion these changes by fostering a culture of ownership and long-term thinking. This involves allocating resources for platform development, celebrating wins in reliability and efficiency, and reframing data engineering’s mission from simply fulfilling tickets to building a durable, strategic asset for the entire business.

The End of Adolescence: Data Engineering’s Strategic Ascent in the Modern Enterprise

The analysis of these converging trends reinforced the overarching conclusion that data engineering evolved from a reactive, technical support function into a proactive and strategic business discipline. The industry’s maturation was not defined by the adoption of newer, more complex technologies but by a fundamental commitment to stability, accountability, and operational excellence. This shift marked the end of the field’s adolescence.

It became clear that these maturation trends were not merely technical improvements but foundational requirements for any organization aiming to build a durable competitive advantage on data. The most successful enterprises were those that recognized this shift early and invested accordingly. The call to action for practitioners and leaders was to embrace this new era of discipline, moving beyond short-term fixes to build data ecosystems that could create reliable, sustainable value for years to come.