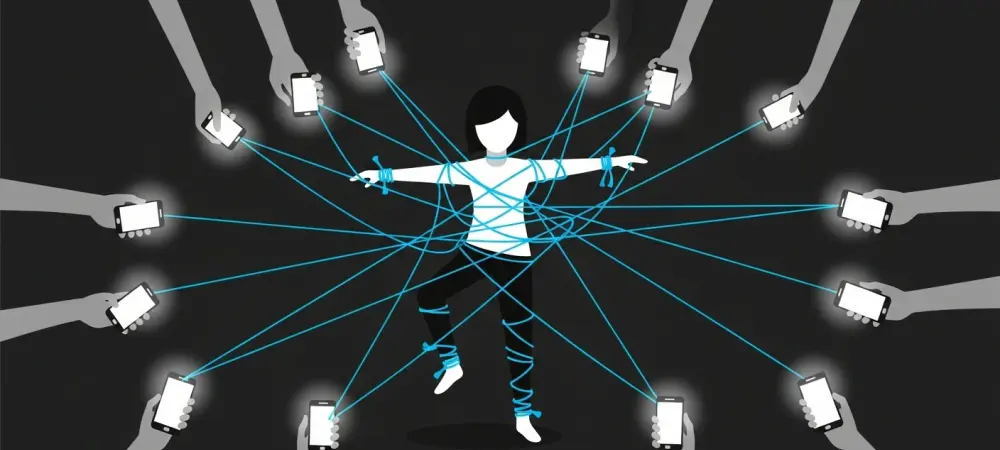

For women who are journalists, activists, and human rights defenders, the relentless stream of digital harassment is no longer a distant possibility but a daily probability that increasingly bleeds through the screen into the physical world. The digital realm, once seen as a space for open discourse, has evolved into a direct pipeline for real-world danger, threatening not only professional careers but personal safety. This phenomenon is not anecdotal; it represents a documented and rapidly worsening trend where online animosity fuels tangible harm.

The Pervasive Nature of Digital Violence

A global survey reveals a stark reality: online violence is an occupational hazard for a staggering seven in ten women in public-facing roles. This statistic illustrates that for those who engage in public discourse, from reporting news to advocating for human rights, the digital landscape is fraught with hostility. The sheer volume and consistency of these attacks create an environment of constant threat, designed to intimidate, exhaust, and ultimately silence critical voices. This is more than isolated trolling; it is a systemic pattern of abuse.

The Disappearing Boundary Between Digital and Physical Worlds

The line separating virtual antagonism from physical intimidation is vanishing at an alarming rate. A recent United Nations investigation documents a “chilling escalation,” confirming that online threats are not empty words. More than four in ten women who experience digital violence report direct offline consequences, a figure that has more than doubled since 2020. The connection is undeniable, proving that what begins as a comment or a post can quickly become a direct menace to an individual’s physical well-being.

The Anatomy of an Attack How Digital Aggression Turns Physical

The spillover from online platforms into the real world manifests in terrifying ways. Digital threats materialize as stalking, where perpetrators track victims’ movements based on online information. In-person verbal abuse and even physical assault often follow campaigns of targeted online harassment. These acts are the endgame of digital aggression, where the goal is to make a person feel unsafe in their own community and home, effectively pushing them out of public life.

Adding a potent new weapon to this arsenal is artificial intelligence. Nearly a quarter of women surveyed have faced AI-assisted violence, including fabricated deepfake videos and manipulated audio content. For highly visible figures like social media influencers and public communicators, this number climbs to 30%. AI dramatically lowers the barrier to creating and disseminating defamatory material, making it cheaper and faster than ever to orchestrate large-scale campaigns aimed at shaming and discrediting women.

Key Findings from a Landmark Investigation

The central conclusion from this investigation is that the digital environment has reached a critical “tipping point.” This concept suggests that society has crossed a threshold where online platforms pose a direct and growing threat to women’s participation in democracy and public discourse. The data demonstrates a clear, documented, and escalating trend of online hostility translating into tangible, physical danger, creating a chilling effect on free expression.

Furthermore, experts agree that artificial intelligence tools are not neutral technologies in this context. They are being actively and deliberately weaponized to amplify and automate abusive campaigns. By enabling the mass production of highly personalized and convincing disinformation, AI provides aggressors with an unprecedented ability to intimidate and silence their targets, overwhelming their ability to respond and eroding public trust.

A Call for Systemic Accountability

In response to this crisis, a primary recommendation is for technology firms to take immediate and decisive action. There is an urgent call for these companies to develop and deploy far more effective tools for identifying, monitoring, and reporting AI-assisted violence. The responsibility is being placed squarely on the platforms that host and profit from the digital infrastructure where these attacks proliferate.

Beyond internal policy changes, the investigation urges the creation of new legal and regulatory frameworks with genuine enforcement power. The objective is to compel tech companies to prevent their platforms from being used as weapons against women and to hold them accountable for the dangerous environments they facilitate. This represents a fundamental shift toward treating digital safety not as a feature, but as a core operational requirement.

The dialogue surrounding digital safety has definitively shifted. The focus moved beyond blaming individual perpetrators and recognized the systemic role that platform architecture and corporate policy played in enabling large-scale harassment. It became clear that without robust regulatory oversight and a fundamental re-engineering of digital spaces, the pipeline from online clicks to physical threats would remain wide open, demanding structural solutions for a structural problem.