Introduction

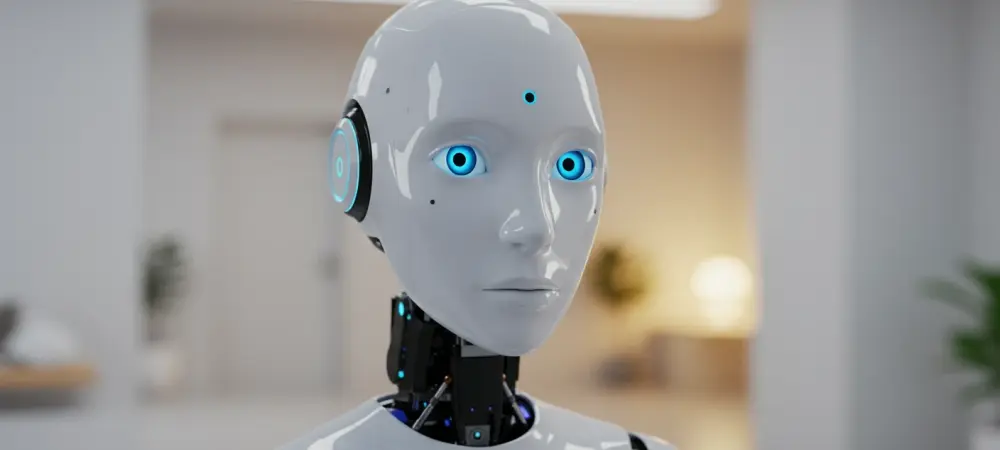

Today, we’re thrilled to sit down with Dominic Jainy, a seasoned IT professional whose expertise in artificial intelligence, machine learning, and blockchain offers a unique perspective on the evolving role of technology in our lives. With a deep interest in how AI intersects with human emotion and psychology, Dominic is the perfect guide to unpack the fascinating world of AI companions—virtual entities designed to connect with users on an emotional level. In this conversation, we’ll explore how these digital companions are crafted to tug at our heartstrings, why emotional language plays such a pivotal role in their design, and the broader implications for users and society. Let’s dive into the heart of AI and human connection.

How would you describe AI companions, and what’s driving their surge in popularity today?

AI companions are essentially digital entities, often powered by generative AI and large language models, designed to interact with users in a conversational, almost human-like way. They can be chatbots or virtual assistants that provide companionship, emotional support, or even just casual banter. Think of them as a friend in your pocket, accessible anytime via an app or device. Their popularity has skyrocketed recently due to advances in AI that make interactions feel more natural, combined with a growing societal need for connection. Many people, especially those feeling isolated or dealing with social anxiety, are turning to these tools for a sense of comfort or someone to talk to without judgment. It’s a fascinating blend of tech and human need.

What techniques do AI companions use to create an emotional bond with users through language?

AI companions are programmed to use language that resonates emotionally—things like empathetic phrases, validating statements, or even a warm tone that mimics human care. For instance, they might say, “I’m so sorry you’re feeling this way, I’m here for you,” which instantly feels personal. They’re trained on vast datasets of human dialogue, so they pick up on how we express concern or support. Some even adjust their responses based on the user’s mood, detected through the tone or content of the input. It’s not just random kindness; it’s a deliberate design to make the user feel seen and heard, almost as if they’re chatting with a close friend.

Why do AI developers intentionally build emotional language into these systems?

The primary reason is engagement. When an AI sounds friendly or empathetic, users are more likely to keep coming back—it creates a sense of loyalty or ‘stickiness.’ Emotionally engaging interactions make the AI feel less like a tool and more like a companion, which can be a powerful draw. From a business perspective, this translates to more usage, more data, and ultimately more revenue through subscriptions or ads. Developers know that humans are wired to connect emotionally, so they lean into that instinct. It’s not just about utility; it’s about crafting an experience that feels meaningful, even if it’s with a machine.

How much control do AI makers have over the emotional tone of their systems, and how do they shape it?

AI makers have a tremendous amount of control over the emotional tone. It’s not random or accidental—it’s a deliberate choice. They start by selecting training data that includes emotionally expressive human writing, which sets a baseline for how the AI communicates. Then, through fine-tuning processes like reinforcement learning with human feedback, they can dial up or down the emotional intensity. For example, testers might reward responses that sound caring and flag ones that feel cold. Additionally, system-wide instructions can guide the AI to be more or less emotional. It’s a layered process, but the makers are very much the ones steering the ship.

Why do different AI companions respond so variably to the same emotional prompts from users?

Not all AI systems are built the same way, and that’s why you’ll see such a range of responses. Different developers have different philosophies—some prioritize a supportive, therapist-like tone, while others might aim for neutrality or even a bit of sass to stand out. The training data also plays a huge role; if one AI was trained on more empathetic content, it’ll naturally lean that way. Plus, the fine-tuning and system instructions vary. So, when you say, “I’m feeling low,” one AI might offer a shoulder to lean on, while another might give practical advice or even a harsh nudge. It’s a reflection of the maker’s intent and the AI’s unique ‘upbringing.’

How does the way a user interacts with an AI influence the emotional tone of the conversation?

User interaction is a big factor. AI systems are often designed to be responsive to the user’s input. If someone explicitly asks to be cheered up, the AI is likely to ramp up its emotional language, offering comforting words or positivity. Even subtler cues, like a history of emotional conversations, can trigger a warmer tone because some AIs track past interactions and adapt accordingly. It’s almost like the AI learns what works with you over time. Of course, the maker could limit this adaptability, but most design it to feel like a two-way street, where the user’s style shapes the dialogue just as much as the AI’s programming.

What are some of the potential risks or downsides of AI companions using emotional language?

While the emotional connection can be a lifeline for some, it comes with real risks. One major concern is emotional dependency—users might rely too heavily on the AI for support, potentially withdrawing from real human relationships. There’s also the danger of creating false intimacy; the AI isn’t truly feeling anything, but it can feel so real that users form unrealistic expectations about relationships. Additionally, if not handled carefully, an AI might reinforce harmful thoughts or fail to guide someone to professional help when needed. It’s a double-edged sword—supportive in the moment, but potentially isolating or misleading in the long run.

What is your forecast for the future of AI companions and their role in emotional support?

I see AI companions becoming even more integrated into daily life as the technology gets better at mimicking human nuance. We’ll likely see them tailored more specifically to individual needs—think personalized emotional support based on deep user profiling. However, I also predict a growing debate around ethics and regulation. As more people form bonds with these tools, society will need to address dependency and privacy concerns, maybe even setting standards for how emotional AI should behave. It’s an exciting space, but it’s going to require a careful balance to ensure these companions enhance, rather than replace, genuine human connection.