In April 1974, the technology landscape underwent a monumental transformation with the release of the Intel 8080 microprocessor. This microchip was not the first of its kind, but it was the first to function as a truly general-purpose processor. Its remarkable versatility allowed it to handle a broad array of applications, fostering the foundation of the microprocessor market. This pivotal development laid the groundwork for modern computing and solidified Intel’s position as a global leader in microchip technology. The Intel 8080’s capability to perform various tasks, from personal computing to industrial automation, marked a significant step forward in computing history, demonstrating it could be broadly applied across numerous fields and industries.

Building on Previous Innovations

The Intel 8080 was not an isolated innovation but built upon the successes and limitations of its predecessors, the Intel 4004 and the Intel 8008, both designed by the esteemed chip engineer Federico Faggin. Released in 1971, the 4004 was a groundbreaking four-bit processor primarily intended for use in calculators. However, its scope was limited by its computational power and memory capacity. Seeking more robust solutions, Intel introduced the 8008 in 1972. This eight-bit processor offered greater potential but still faced limitations in speed, programming complexity, and addressable memory space. These constraints necessitated further innovation, propelling Intel towards developing a more advanced microprocessor—the 8080.

Federico Faggin articulated that the creation of the 8080 was possible due to the application of N-channel technology initially developed for Intel’s 4K dynamic memory. The architecture of the 8080 required significant enhancements, including the necessity of a 40-pin package that enabled better connectivity and an improved interrupt structure and stack pointer to boost overall performance. With contributions from Japanese chip architect Masatoshi Shima under Faggin’s direction, the 8080 was refined to expand the addressable memory space from 16 KB to 64 KB, offering significantly enhanced capacity for larger programs and additional memory. These improvements, coupled with an increased clock speed, provided a notable leap in computing performance.

Technical Advancements and Specifications

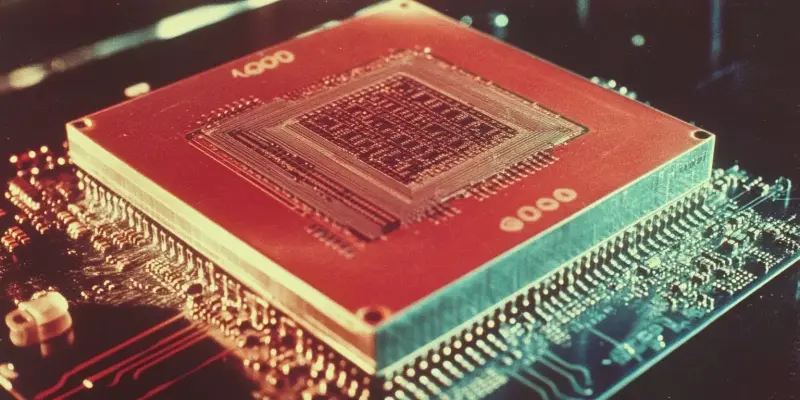

Hailed by Marco as the “first computer on a chip,” the Intel 8080 exhibited technical advancements that made it a groundbreaking achievement for its time. At its core, the 8080 was an eight-bit processor supported by a 16-bit address bus, which allowed it to access up to 64 KB of memory. Operating at a clock speed of 2 MHz, it could manage speeds significantly higher than its predecessors. Further variants, such as the 3.125 MHz 8080A-1, offered even greater performance. The processor included seven general-purpose eight-bit registers, a 16-bit stack pointer, and a 16-bit program counter, alongside a comprehensive instruction set comprising over 70 operations for data transfer, arithmetic functions, logic operations, and control flow tasks.

From a physical standpoint, the 8080 utilized a six-micron process technology and contained approximately 6,000 transistors. It was integrated into a 40-pin dual in-line package (DIP) that facilitated better connectivity and interfacing with support chips. Despite requiring multiple voltage supplies (+5 V, +12 V, and -5 V) and depending on external chips like the i8224 clock generator and the i8228 bus controller for full functionality, the 8080 still represented a significant advancement. This was especially notable when considering the numerous challenges associated with older processors. In 1974, these innovations made the 8080 a milestone in microprocessor technology, despite the power and connectivity requirements that appear modest by today’s standards.

Impact on Personal Computing and Software Development

The introduction of the 8080 was nothing short of revolutionary for the computing industry, setting off a chain reaction of innovations and developments. One of its most notable impacts was the powering of the Altair 8800, one of the first widely recognized personal computers. The success of the Altair 8800 inspired Bill Gates and Paul Allen to develop a BASIC interpreter for the machine, ultimately leading to the birth of Microsoft. The widespread adoption of the 8080 in personal computers heralded a new era where computing power was no longer confined to large, institutional mainframes but could be accessed by individual users at home and in small businesses.

In addition to its influence on personal computing, the 8080 also made substantial contributions to the gaming industry. Early arcade games like Midway’s Gun Fight and Taito’s Space Invaders utilized the 8080, playing essential roles in launching the video game revolution. The processor’s capabilities made it highly effective in embedded systems, capable of controlling a diverse range of devices from industrial equipment to medical instruments. The 8080 also spurred the development of advanced software. One prominent example is CP/M, one of the first operating systems designed for personal computers. CP/M’s architecture and features eventually influenced the development of MS-DOS, which went on to dominate the personal computer market for years, demonstrating the 8080’s enduring influence.

Legacy and Influence on Modern Computing

In April 1974, the technology landscape transformed profoundly with the introduction of the Intel 8080 microprocessor. While not the very first microchip, it stood out as the initial one capable of functioning as a truly general-purpose processor. This groundbreaking versatility enabled it to manage a wide range of applications, essentially creating the blueprint for the burgeoning microprocessor market. This critical advancement set the stage for contemporary computing and established Intel as a formidable leader in microchip technology globally. The Intel 8080 demonstrated its remarkable capacity by performing diverse tasks, spanning from personal computing to complex industrial automation. This microprocessor marked a significant leap in computing history, showing its potential for extensive use across various fields and industries. Additionally, it spurred innovation in software development, as programmers and engineers leveraged its capabilities, driving further technological progress and cementing the microprocessor’s role in modern electronic devices.