I’m thrilled to sit down with Dominic Jainy, a seasoned IT professional whose expertise spans artificial intelligence, machine learning, and blockchain. With a deep understanding of emerging technologies, Dominic has a unique perspective on the evolving world of CPU architectures and the shift toward heterogeneous computing. Today, we’ll explore how the industry has moved beyond the competition of instruction set architectures, the rise of multi-architecture systems driven by AI demands, and the distinct roles of x86, Arm, and RISC-V in modern computing. We’ll also dive into the future of chip design and the growing importance of performance efficiency across diverse applications.

Can you walk us through what’s changed in the so-called ‘ISA Wars’ and why the focus isn’t on one architecture dominating anymore?

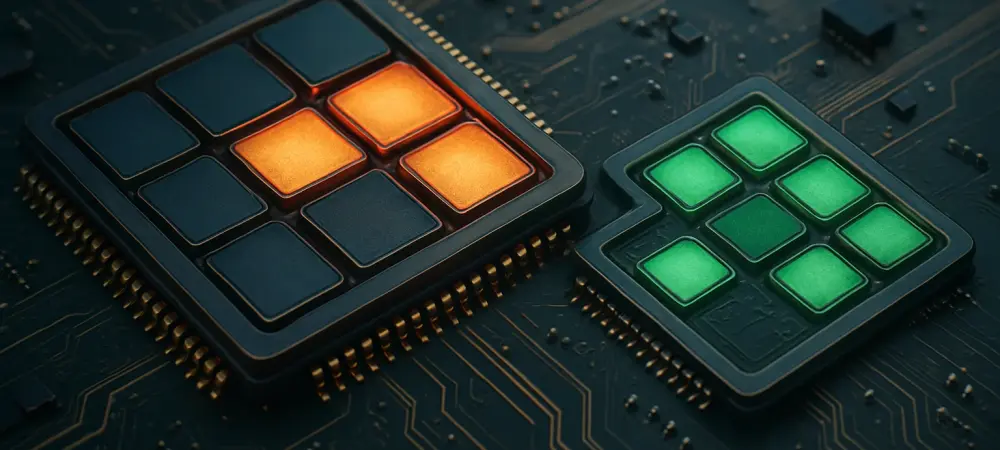

Absolutely. The ‘ISA Wars,’ where architectures like x86, Arm, and RISC-V were pitted against each other as the ultimate solution, have largely faded. The industry used to obsess over which architecture would reign supreme, but we’ve realized that no single ISA can perfectly address every workload. Today, it’s less about picking a winner and more about leveraging the strengths of multiple architectures within the same system. This shift comes from understanding that different tasks—whether it’s AI processing, power management, or general computing—benefit from specialized designs. Heterogeneous systems allow us to mix and match ISAs for optimal performance, efficiency, and cost.

What’s fueling this trend toward heterogeneous CPU systems in modern designs?

The biggest driver is the explosion of Artificial Intelligence and its insane demand for computational power. AI workloads require massive performance gains while keeping energy use in check, whether you’re in a cloud data center or on a tiny edge device. This has pushed designers to integrate different CPU architectures and accelerators like GPUs and NPUs into a single system-on-chip or platform. Beyond AI, the broader push for performance per watt across industries—from mobile to automotive—means we can’t rely on a one-size-fits-all approach. Heterogeneous systems let us tailor solutions to specific needs, balancing power and efficiency.

Could you share some real-world examples of how combining multiple architectures benefits specific applications?

Sure, take edge AI devices as a great example. You might have an Arm-based core handling general processing and running Linux, paired with a RISC-V-based neural processing unit for AI tasks. This combo ensures the system is both flexible and efficient for machine learning at the edge. Another case is in modern x86 processors for PCs and servers, which often embed auxiliary RISC cores for things like security or power management. Even in automotive systems, Arm dominates for its low power use in control units, while other architectures might handle specific real-time tasks. These hybrid setups optimize each component for its role.

Why has performance per watt become such a critical factor in computing today, from massive servers to tiny devices?

It’s all about sustainability and scalability. In cloud servers, power consumption directly ties to operational costs and environmental impact—hyperscale providers are under pressure to reduce their carbon footprint. On the other end, in small devices like IoT sensors or wearables, battery life is everything. Performance per watt ensures you’re squeezing the most compute out of every bit of energy, which is crucial as workloads grow more complex with AI and data processing. If you can’t scale efficiently, you’re either burning through power or failing to meet performance needs, and no one can afford that tradeoff anymore.

Let’s talk about the major CPU architectures—starting with x86. What are its core strengths, and where does it struggle compared to others?

x86, long championed by Intel and AMD, is still the backbone of PCs and general-purpose servers. Its biggest strength is the massive software ecosystem built around it—most legacy applications are designed for x86, ensuring compatibility and ease of use. It’s also incredibly versatile for high-performance computing. However, it often lags in power efficiency compared to architectures like Arm, especially in mobile or embedded contexts. x86 chips tend to be more complex and power-hungry, which makes them less ideal for small, battery-powered devices or applications where every watt counts.

How has Arm carved out such a dominant role in so many areas of computing?

Arm’s rise is a story of efficiency and adaptability. It started as a low-power, compact architecture perfect for embedded systems, which made it a natural fit for mobile devices and IoT. Over time, it built the largest hardware and software ecosystem, displacing older architectures in everything from automotive to consumer electronics. In the last decade, Arm tackled software compatibility issues and scaled up to challenge x86 in PCs and servers—think Apple’s transition to Arm-based Macs or custom server chips by hyperscalers like Amazon and Google. Its flexibility and energy efficiency have made it a go-to for diverse applications.

RISC-V is often called a game-changer due to its open-source nature. How does that play out in its adoption, and what hurdles does it face?

RISC-V’s open licensing is its superpower. Anyone can use and customize it without hefty fees, which is a huge draw for companies designing bespoke solutions, especially in embedded systems like microcontrollers or AI accelerators. Right now, many of its implementations are deeply embedded—think specific functions within larger x86 or Arm chips. However, it’s still early days for RISC-V. Its software tools and ecosystem aren’t as mature as Arm or x86, which limits broader adoption. Building that support and proving reliability at scale are its biggest challenges.

Looking at Arm’s incredible shipment numbers—29 billion processors in 2024—how does that reflect its market impact compared to x86’s much lower volume?

The sheer volume of Arm processors shipped—29 billion versus x86’s 250 to 300 million—shows how pervasive Arm has become in everyday tech. Arm dominates in mobile, IoT, and embedded markets, where devices are produced in massive quantities. Every smartphone, smartwatch, and countless sensors likely run on Arm. x86, while critical for PCs and servers, operates in a narrower, high-value space with fewer units but often higher complexity and cost per chip. Arm’s numbers highlight its role as the architecture of ubiquitous computing, while x86 remains the heavyweight for traditional computing power.

As we look to the future of chip design with AI and chiplets on the horizon, how do you see multiple architectures working together in a single system?

The future is all about integration and specialization. With AI driving unprecedented performance needs, and chiplets enabling modular designs, we’re moving toward systems where multiple architectures coexist seamlessly on the same chip or platform. Chiplets allow us to mix and match CPU cores, accelerators, and even different ISAs based on workload demands—think Arm for efficiency, RISC-V for custom AI tasks, and x86 for legacy support, all in one SoC. This approach maximizes performance efficiency and flexibility, and as chiplets trickle down to embedded applications, we’ll see even more innovation in how architectures collaborate.

What’s your forecast for the role of heterogeneous computing in shaping the next decade of technology?

I believe heterogeneous computing will be the foundation of tech innovation over the next decade. As workloads diversify—think AI, edge computing, and real-time processing in everything from cars to smart cities—the need for tailored, multi-architecture systems will only grow. We’ll see deeper integration of ISAs like Arm, RISC-V, and x86, supported by advancements in chiplet technology and open ecosystems. Performance per watt will remain the guiding metric, pushing designs to be smarter and more sustainable. Ultimately, it’s not about which architecture wins; it’s about how they team up to solve the complex challenges ahead.