Today we’re speaking with Dominic Jainy, an IT professional whose work at the intersection of artificial intelligence, machine learning, and blockchain offers a unique perspective on the future of enterprise technology. With data becoming ever more critical to business operations, the bottleneck between those who need data and those who can access it has become a major point of friction. Dominic is here to discuss how a new wave of AI-powered tools is revolutionizing our ability to interact with SQL databases, promising to democratize data access and accelerate insight generation.

This conversation explores the real-world impact of conversational SQL, delving into how these tools reshape team workflows and reduce the dependency on specialized data teams. We’ll examine the critical architectural differences between tools that translate language directly to SQL and those that use a sophisticated semantic layer for reasoning across multiple data sources. The discussion also covers the distinct user experiences of integrated IDE assistants versus standalone chat interfaces, the value of transparent tools that upskill users, and the essential security and governance practices for deploying these AIs safely in an enterprise environment.

We often see a bottleneck where business users need data, but data teams are overwhelmed with ad-hoc requests. How exactly do conversational SQL tools change this dynamic, and what are the first positive impacts an organization typically sees after adoption?

That bottleneck is a source of immense frustration on both sides, and it’s where these tools have their most immediate and visceral impact. The dynamic shifts from a ticket-based, sequential workflow to a self-service, interactive one. Imagine a marketing manager who needs to understand the performance of a recent campaign. In the old world, she files a request, waits in a queue for days, and gets a static report. If she has a follow-up question, the cycle starts all over again. After adopting a conversational tool, that same manager can simply type, “Show me the conversion rates for the new campaign, broken down by customer acquisition channel in the last 30 days.” She gets her answer in seconds. The first positive impact is a dramatic reduction in the backlog of these ad-hoc requests. The data team suddenly has breathing room, their time freed up from routine queries to focus on much larger, more strategic projects like building new data models or optimizing core infrastructure. The entire atmosphere changes from one of dependency and delay to one of empowerment and speed.

Some tools translate natural language directly into SQL queries, while others use a semantic metadata layer for reasoning across multiple data sources. In what specific scenarios would you recommend one approach over the other, and what risks do teams face if they choose the wrong one?

This is one of the most critical distinctions, and choosing incorrectly can lead to significant friction down the line. The direct translation approach, like you’d see with a tool like Chat2DB, is fantastic for teams that need immediate value and are working with a well-understood, singular data source. A small, agile analytics team wanting to quickly explore sales trends in their primary database would find this perfect. It’s fast, intuitive, and gets the job done. However, the risk here is a lack of consistency and accuracy when things get complex. The AI might misinterpret a nuanced business term or struggle with joining tables correctly, leading to subtly wrong answers that erode trust.

On the other hand, a semantic metadata layer, like the one used by GigaSpaces, is built for the enterprise. I’d recommend this approach in any environment with high stakes and high complexity—think finance, healthcare, or logistics, where data from multiple, disparate systems must be queried. This layer acts as a bridge, translating the business’s vocabulary and logic for the AI. It ensures that when a user asks about “quarterly profit,” the AI understands the precise, governed definition, including all the necessary calculations and data sources. The risk of choosing a direct tool in this scenario is catastrophic; you could have different departments getting wildly different answers to the same question, undermining the entire concept of a single source of truth.

Tools like DataGrip and DBeaver embed AI directly into a developer’s IDE, whereas others offer a standalone chat interface. For what type of user or task is an integrated approach superior, and how does it practically change a data professional’s day-to-day workflow?

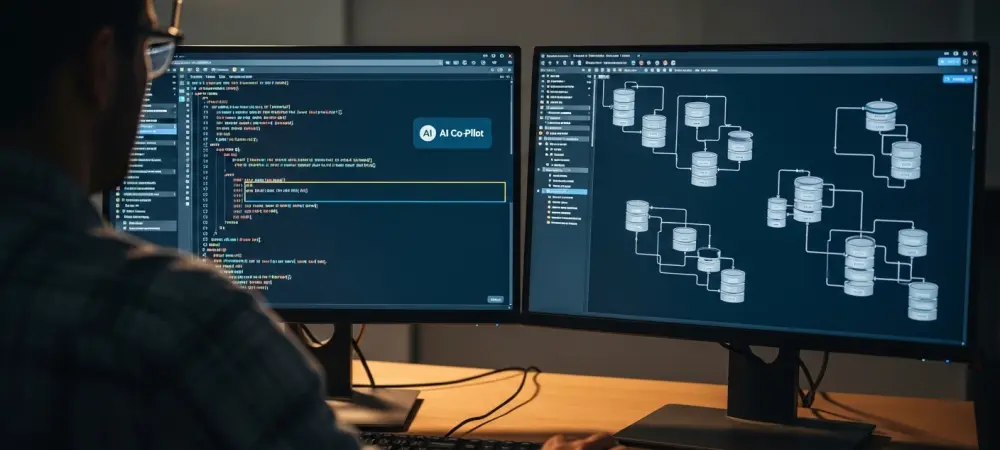

The integrated approach is a game-changer for the hands-on data professional—the data engineer, the database administrator, the seasoned analyst. For these users, SQL isn’t a foreign language; it’s their native tongue. They live inside IDEs like DataGrip. For them, a standalone chat interface would feel like an interruption, an unnecessary context switch. The integrated AI acts as an intelligent co-pilot. Their day-to-day workflow is transformed. Instead of painstakingly writing a complex query from scratch, they can write a comment describing the logic, and the AI generates the SQL. When a query is running slow, the AI can offer optimization suggestions right there, in context, leveraging its awareness of the database schema. It doesn’t just generate code; it explains it, validates it, and helps refine it. It’s about augmenting expertise, not replacing it, making a skilled professional faster, more accurate, and more productive.

A tool like AI2sql focuses narrowly on converting language to SQL, acting as a productivity aid rather than hiding the query. In what ways does this transparent approach help upskill users, and how does it compare to tools that aim to completely abstract SQL away?

This transparent approach is incredibly powerful because it turns a productivity tool into an educational one. When a user, perhaps a junior analyst or a product manager, asks a question in plain English and AI2sql provides not just the answer but the SQL query that produced it, a learning moment happens. They see the direct connection between their business question and the technical syntax. The first time, it might look like gibberish. But after a few uses, they start to recognize patterns: SELECT, FROM, WHERE, GROUP BY. This demystifies SQL. It’s like learning a new language with an instant translator at your side. Over time, they gain the confidence to tweak the generated query or even write simple ones themselves. This stands in stark contrast to tools that completely hide the SQL. While abstraction offers simplicity, it also creates a dependency. The transparent method, however, fosters literacy and upskills your entire team, creating a more data-fluent organization from the ground up.

When connecting an AI to a production or analytical database, concerns around performance, governance, and security are paramount. What architectural safeguards or best practices should a company implement when deploying these tools to ensure both safety and reliable access for users?

This is where the rubber meets the road for enterprise adoption. You simply cannot point a conversational AI at a core production database and hope for the best. The first and most critical safeguard is architectural isolation. Many mature platforms achieve this by introducing an intermediary data layer or relying on metadata. This ensures that user queries, which can be unpredictable, don’t directly hit and potentially overload operational systems. It creates a necessary buffer. Secondly, robust governance is non-negotiable. You need fine-grained access controls that mirror your existing security policies, ensuring a user can only query data they are explicitly authorized to see. Finally, context retention is key for reliability. An effective tool must remember the conversation’s context to refine answers iteratively, preventing inconsistent or nonsensical results. Without these safeguards, you risk performance degradation, data breaches, and a general loss of trust in the system, turning a promising technology into a liability.

What is your forecast for the evolution of AI-driven data interaction?

I believe we are just scratching the surface. The current generation of tools is focused on translating human intent into machine-readable queries, which is a massive leap forward. But the next evolution will be a shift from reactive Q&A to proactive partnership. The AI won’t just wait for you to ask a question; it will anticipate your needs. It will analyze your data and your query patterns to surface insights you didn’t even know to look for, saying things like, “I’ve noticed a significant drop in user engagement in the Midwest region. Would you like to see the contributing factors?” Interaction will also become far more multi-modal, seamlessly blending voice commands, text, and dynamic visualizations. Ultimately, I forecast that these AI agents will become deeply embedded, ambient partners in our workflows, transforming data interaction from a distinct task we perform into an intelligent, continuous dialogue that drives decision-making in real time.