In the ever-evolving field of artificial intelligence (AI), it is crucial to stay updated with the latest trends and advancements. Oftentimes, identifying these trends can be achieved by recognizing common patterns in the questions posed by reporters. In this article, we will explore the misconception surrounding the processing requirements of generative AI and delve into more cost-effective alternatives that can handle AI workloads effectively.

Misconceptions About Generative AI and Processing Requirements

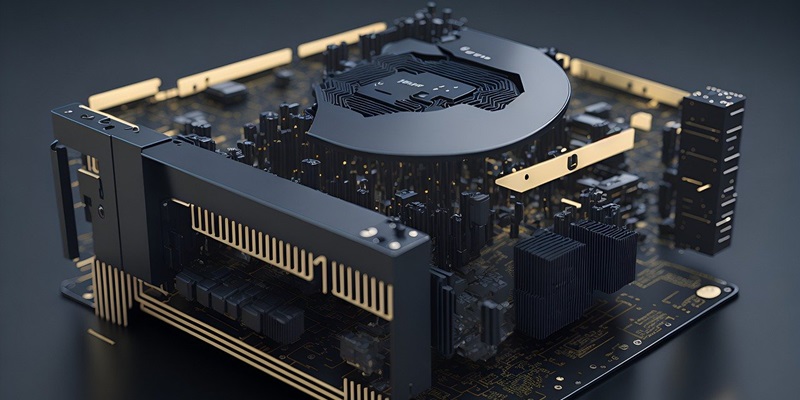

A prevailing assumption among many is that generative AI necessitates the use of specialized processing units such as GPUs or even quantum computing. While it is true that GPUs significantly enhance performance, they do come at a staggering cost. The misconception lies in assuming that GPUs are the only viable option for generative AI tasks.

Alternative Processing Option: CPUs

Contrary to popular belief, central processing units (CPUs) are fully capable of handling AI workloads, including generative AI. CPUs provide a viable and cost-effective solution, particularly for smaller organizations or individuals with limited resources. Unlike GPUs, CPUs are more accessible in terms of initial investment and power consumption.

Advancements in AI Algorithms and SLIDE

The field of AI is constantly evolving, leading to exciting advancements in algorithms. One such development is the Sub-Linear Deep Learning Engine (SLIDE). SLIDE represents a breakthrough in AI algorithms, paving the way for improved efficiency and performance in generative AI tasks. With the advent of SLIDE, the reliance on resource-intensive processing units can be reduced, making cost optimization a viable prospect.

Exploring Other Processor Options: FPGAs

Additionally, field-programmable gate arrays (FPGAs) provide an interesting alternative for AI processing. FPGAs have the unique ability to be programmed after manufacturing, enabling them to perform specific tasks, such as generative AI, with great efficiency. These processors offer a more streamlined approach, targeting the specific requirements of AI workloads without the excessive costs associated with GPUs.

Cost-effectiveness of non-GPU Processors

Despite the prevailing belief, there are numerous instances where non-GPU processors outshine their GPU counterparts in terms of cost-effectiveness. This is especially true for organizations that do not require the immense processing power provided by GPUs. By understanding and leveraging the capabilities of CPUs and FPGAs, these organizations can avoid unnecessary expenditures on high-cost GPU solutions.

Potential Overspending and Cost Optimization

Enterprises often find themselves spending exorbitant amounts of money on GPU processors simply because they perceive the cost as justifiable for the performance gains. However, with the availability of more cost-effective options, it becomes essential for system architects, cloud architects, and generative AI architects to evaluate the trade-offs between cost and performance. It is their core responsibility to find the most cost-optimized solutions that harness the power of processing units without straining the budget.

As the field of AI continues to advance, it is vital to recognize that generative AI tasks can be achieved without solely relying on GPUs or specialized processing units. CPUs and FPGAs present viable alternatives, offering cost-effective solutions for organizations and individuals with limited resources. By staying abreast of the latest advancements in AI algorithms, such as SLIDE, and being open to exploring alternative processors, the path to cost-optimized generative AI architecture becomes clear. The future of AI lies in finding the perfect balance between performance and cost, enabling widespread adoption and innovation in the field.