As a seasoned IT professional with deep expertise in artificial intelligence and machine learning, Dominic Jainy has a unique vantage point on the evolution of enterprise technology. He has witnessed firsthand the shift from the initial hype of generative AI to its practical, and often challenging, implementation within large organizations. Today, he joins us to dissect the most significant trends shaping the AI landscape, moving beyond simple chatbots to the complex world of agentic systems. We will explore how these intelligent workflows are driven by “Supervisor Agents,” the immense pressure they place on traditional data infrastructure, and why a multi-model strategy is becoming the new standard. We will also delve into the counterintuitive finding that strong governance is actually accelerating deployment and discuss how the most valuable AI applications are often the most practical ones.

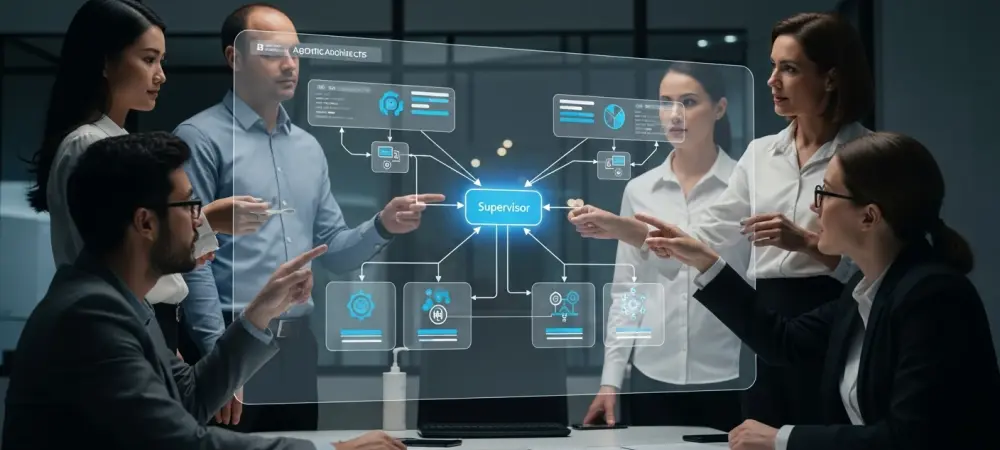

We’re seeing a rapid move toward “agentic” architectures where AI plans and executes tasks. Can you describe the role of a ‘Supervisor Agent’ in this model and why this orchestrator approach is proving more effective than using a single, monolithic AI for complex enterprise workflows?

It’s a fundamental shift in how we think about AI in a system. For a while, the goal was to build one massive, all-knowing model, but that’s like asking a CEO to also write code and file expense reports. It’s incredibly inefficient. The Supervisor Agent model works like a skilled manager. It doesn’t do all the work itself. Instead, it receives a complex request, understands the core intent, and then masterfully breaks it down, delegating smaller, specific tasks to specialized sub-agents or tools. One agent might be an expert at retrieving documents, another at running compliance checks, and a third at summarizing data. This approach has exploded, growing 327 percent on some platforms in just a few months, because it’s robust, scalable, and mirrors how effective human teams operate. It’s about smart orchestration, not just raw power.

With AI agents now programmatically creating 80% of new databases, traditional infrastructure is under immense pressure. What specific challenges do these high-frequency, automated workflows pose for OLTP databases, and what architectural changes must companies make to support this new paradigm effectively?

The pressure is immense, and frankly, most legacy systems are cracking under the strain. Traditional OLTP databases were built for a human rhythm—predictable, structured transactions happening at a manageable pace. Now, imagine an army of AI agents working 24/7, programmatically creating and destroying environments to test code or run simulations. We’ve gone from AI creating a negligible 0.1 percent of databases to a staggering 80 percent in just two years. These agents generate a relentless, high-frequency storm of read-and-write operations that old architectures simply cannot handle. Companies must fundamentally re-architect for this reality. This means embracing platforms designed for both massive scale and high concurrency, and infrastructure that can spin up and tear down resources ephemerally, without the hours-long wait times developers used to endure.

Many enterprises now use multiple LLM families—like Claude, Llama, and Gemini—simultaneously, with retail leading this trend. Beyond avoiding vendor lock-in, what are the key technical and financial advantages of this multi-model strategy, and how do teams orchestrate which model handles a given task?

Avoiding vendor lock-in is a great start, but the real magic is in optimization. It’s just not financially or technically sound to use a sledgehammer to crack a nut. The most sophisticated companies—and we see 78 percent of them using two or more model families—treat their LLMs like a toolbox. They use a router or a supervisor agent to analyze an incoming task. Is it a simple classification or sentiment analysis? Route it to a smaller, faster, and much cheaper open-source model. Is it a complex, multi-step reasoning problem that requires nuanced understanding? That’s when you call on the expensive, frontier model. Retailers are at the forefront here, with 83 percent employing this strategy to balance the high cost of cutting-edge performance with the sheer volume of daily tasks. It’s a portfolio approach that delivers the best performance-per-dollar.

It might seem counterintuitive, but organizations with strong AI governance frameworks are deploying projects over 12 times faster. Could you walk us through how establishing these guardrails and evaluation tools actually accelerates production, perhaps with an anecdote of a project that succeeded specifically because of them?

It absolutely feels backward at first glance, but it’s the single biggest accelerator I see in the field. Without governance, an AI project is a black box. The legal team is nervous, the security team is skeptical, and business leaders are hesitant to approve something they can’t control or understand. Pilots get stuck in an endless proof-of-concept phase because the risks are unquantified. I worked with a financial services firm that wanted to use an agent for client communication. The project was stalled for months over compliance fears. Once we implemented a governance framework—defining clear data usage policies, setting rate limits, and using evaluation tools to rigorously test for model drift and accuracy—everything changed. The stakeholders suddenly had a dashboard and a set of rules they could trust. That confidence allowed them to approve the project, and it went from a stalled pilot to a production system in weeks. Those guardrails didn’t slow them down; they paved the road for deployment.

The most valuable AI use cases today seem to focus on practical automation—like predictive maintenance or customer support—rather than futuristic autonomy. What steps should a business leader take to identify these high-impact “boring” problems within their organization and successfully build an agentic system to solve them?

Business leaders need to stop chasing science fiction and start looking for friction. The greatest immediate value isn’t in creating a fully autonomous company; it’s in automating the mundane, repetitive tasks that drain resources and kill productivity. The first step is to simply ask your teams: “What are the routine, data-intensive tasks that you wish you didn’t have to do?” You’ll find gold in the answers. In manufacturing, it’s often about sifting through sensor data for predictive maintenance, a use case that accounts for 35 percent of their AI projects. For others, it’s customer support, which makes up a huge chunk—40 percent—of top use cases across all industries. Once you identify a high-friction, high-volume problem, you can build a focused agentic system to solve it. Success in these “boring” areas builds tangible ROI and the organizational confidence needed to tackle more complex challenges down the line.

The overwhelming majority of AI inference requests—96%—are now processed in real-time. For sectors like healthcare or tech, what are the primary infrastructure requirements for handling these latency-sensitive tasks at scale, and what are the consequences for companies that fail to move beyond batch processing?

The shift to real-time is not a trend; it’s the new default. Batch processing is a relic of an older data era. For industries where speed is value—like tech or healthcare—it’s a complete non-starter. In healthcare, where the ratio of real-time to batch requests is 13 to one, a delay could impact clinical decision support. In tech, where the ratio is a massive 32 to one, latency ruins the user experience. The core infrastructure requirement is a robust, scalable serving platform that can handle unpredictable traffic spikes without faltering. This means optimized model serving, auto-scaling capabilities, and GPU management that can deliver responses in milliseconds. The consequences of failing to adapt are severe: you’ll deliver a sluggish, unresponsive product, lose customers to competitors who got it right, and be fundamentally incapable of competing in an AI-driven market that operates at machine speed.

What is your forecast for enterprise AI over the next two years?

Over the next two years, the conversation will completely shift from model-centric to data-centric. The novelty of “what a model can do” will fade, and the competitive advantage will move decisively toward how organizations use AI on their own proprietary data. We’ll see a massive emphasis on building open, interoperable platforms that allow companies to mix and match the best models for the job, rather than getting locked into a single ecosystem. The real differentiator won’t be buying the fanciest off-the-shelf AI feature; it will be the engineering rigor and governance frameworks that allow a company to safely and effectively apply these powerful tools to their unique business challenges. The winners will be those who treat AI not as a magic box, but as a core component of their engineering and data strategy.