DeepSeek, a Chinese AI startup, in collaboration with researchers from Tsinghua University, has made ground-breaking advancements in artificial intelligence (AI) reward models. The innovative approach they have developed is meticulously detailed in their paper, “Inference-Time Scaling for Generalist Reward Modeling.” These cutting-edge models are crucial for guiding large language models (LLMs) to better align with human preferences and behaviors, thus optimizing their responses through a process known as reinforcement learning. Their pioneering work stands to significantly impact the field, reshaping the way AI systems interpret and fulfill human needs.

Understanding AI Reward Models

AI reward models play an essential role in the reinforcement learning systems employed by large language models (LLMs). These models function as sophisticated feedback mechanisms, akin to digital instructors that guide artificial intelligence systems toward delivering responses that align more closely with human expectations and behaviors. The importance of these models extends beyond mere question-answering tasks; they are vital for more complex applications, where achieving the desired outcome requires nuanced understanding and adaptation based on human feedback. By providing clear and accurate reward signals, these models enable AI systems to exhibit adaptive and preferable behaviors, making them indispensable for advanced AI applications.

In the realm of LLMs, reward models serve to fine-tune the responses generated by the AI, ensuring they meet specific criteria set by human users. Their role is pivotal as AI continues to infiltrate various industries and domains, necessitating a higher level of interaction and response quality. The primary purpose of these models is to furnish AIs with the ability to interpret and act upon diverse, and often intricate, human preferences. This guidance is crucial for creating AI systems that are not only responsive but also capable of evolving their behavior to meet changing needs and contexts, thereby enhancing the overall user experience with AI technologies.

DeepSeek’s Innovations

Traditional reward models often excel in environments with well-defined, verifiable queries or artificial rules but struggle significantly when tackling general domains characterized by diverse and complex criteria. This glaring limitation has sparked the need for advancements in how AI systems interpret and respond to the myriad of human preferences that they encounter. Addressing this challenge, DeepSeek introduced two novel methods aiming to overcome these bottlenecks and achieve better alignment of AI behaviors with human expectations, even in broader, less-controlled domains.

The first of these innovative approaches is Generative Reward Modeling (GRM), which enhances the adaptive capabilities of AI systems by accommodating a wide range of input types and scaling capabilities during inference. Unlike previous reward models that relied on scalar or semi-scalar reward representations, GRM offers a more robust and flexible language-based representation of rewards. This adaptability ensures consistent and smooth performance across various computational constraints and types of queries, making it a significant step forward in reward model design. GRM’s capacity to adjust dynamically during inference allows for more nuanced and contextually appropriate responses from AI systems, bridging the gap between human expectations and machine output.

Complementing GRM, DeepSeek developed Self-Principled Critique Tuning (SPCT), an advanced learning strategy that promotes scalable reward generation behaviors within generative reward models. Through the use of online reinforcement learning, SPCT enables the generation of adaptive principles that evolve in response to input queries and responses. This novel tuning mechanism ensures that reward models remain continuously aligned with evolving inputs and feedback mechanisms, fostering an environment of perpetual learning and improvement. By maintaining this dynamic alignment, SPCT ensures that reward models are always up-to-date with current human preferences, leading to more relevant and satisfactory AI interactions.

Mechanism and Performance

Zijun Liu, a key contributor to the project from Tsinghua University and DeepSeek-AI, elaborated on the combined methods’ capabilities in dynamically aligning reward generation processes. The combined methods particularly stand out for their efficacy in “inference-time scaling,” a technique that enhances performance by ramping up computational resources during the inference stage, rather than the training stage. This approach has shown that increasing sampling rates during inference can lead to superior reward outcomes compared to simply enlarging model sizes during training. By focusing on computational resource allocation at the inference phase, these methods offer a more efficient and effective way to enhance AI performance.

In practical terms, the evidence presented by researchers demonstrated that models utilizing inference-time scaling produced better results with increased sampling. The ability to achieve higher-quality rewards and responses by optimizing computational resource usage at inference time suggests a significant shift in how AI development can be approached. This method enables smaller, more resource-efficient models to compete with larger counterparts, provided they are given sufficient computational resources during the inference process. This efficiency not only enhances model performance but also offers a pathway to more sustainable and cost-effective AI operation. The implications of this performance enhancement are profound, as it suggests that advancements in computational strategies can outweigh mere increases in model size. By refining the techniques used during inference, AI developers can create more responsive and adaptable systems without necessarily incurring the extensive training costs associated with larger models. This breakthrough in inference-time scaling presents a paradigm shift, fostering the development of smarter, more efficient AI systems capable of delivering superior performance in a wide array of real-world applications.

Implications for the AI Industry

The refinements in reward models introduced by DeepSeek promise to bring about several significant shifts in the AI landscape. One of the foremost benefits is enhanced accuracy, as more precise feedback mechanisms enable AI systems to fine-tune their responses more effectively over time. By honing the reward signals that AI systems receive, developers can cultivate more accurate and reliable outputs, ensuring that AI behaviors are closely aligned with human expectations and requirements. This continuous improvement cycle is essential for developing AI technologies capable of handling increasingly complex and context-sensitive tasks.

Furthermore, the ability to scale model performance during the inference phase introduces a new level of adaptability and flexibility. This innovation allows AI systems to meet various computational demands more effectively, facilitating smoother integration across different platforms and use cases. The capability to allocate resources dynamically based on current needs fosters a more versatile approach to AI development and deployment. This flexibility is particularly beneficial in resource-constrained environments, where efficient computational utilization is paramount. By enabling AI systems to adapt to varying stresses and demands, DeepSeek’s innovations promote broader applicability and wider adoption of advanced AI technologies.

Scalable reward generation is another crucial advancement, contributing to improved performance across a range of general domain tasks. By refining reward models to perform better in diverse environments, AI systems can tackle a broader spectrum of applications with higher efficacy. This wider applicability underscores the importance of developing reward models that can adapt to different contexts without losing their effectiveness. Additionally, the ability to achieve high performance through inference-time scaling suggests a more resource-efficient approach to AI development. By optimizing computational resources, smaller models can rival or even surpass the performance of larger counterparts, leading to more sustainable and cost-effective AI solutions.

DeepSeek’s Industry Influence

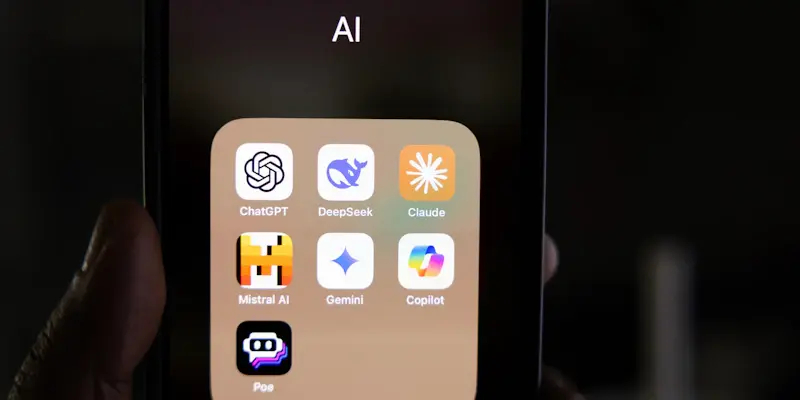

DeepSeek, founded by the visionary entrepreneur Liang Wenfeng in 2023, has quickly established itself as a leading force within the global AI sector. The startup’s notable contributions include the development of the V3 foundation and R1 reasoning models. The latest iteration, DeepSeek-V3-0324, exemplifies substantial advancements in several key areas, including enhanced reasoning capabilities, optimized front-end web development, and significant improvements in Chinese writing proficiency. These developments reflect DeepSeek’s commitment to pushing the boundaries of what AI technologies can achieve, setting new standards for innovation and excellence within the industry.

In addition to their technical advancements, DeepSeek’s dedication to open-source development is a testament to their commitment to fostering collaborative growth and transparency in AI research and innovation. By releasing five code repositories earlier this year, DeepSeek has demonstrated a willingness to share their advancements and invite the broader AI community to contribute to and benefit from their breakthroughs. This open-source approach not only accelerates the pace of innovation but also ensures that the benefits of technological advancements are widely accessible, fostering a more inclusive and collaborative AI landscape.

Despite the numerous accomplishments, there is burgeoning anticipation surrounding the potential release of DeepSeek-R2, the next iteration in their line of reasoning models. While official comments from the company have been sparse, the AI community remains eager to see what further innovations and improvements DeepSeek will introduce. This speculative excitement highlights the company’s influential position within the industry and underscores the high expectations placed on their continued contributions to AI development. DeepSeek’s trajectory of rapid growth and groundbreaking innovation sets a promising precedent for the future of AI technologies.

Future Directions for AI Reward Models

DeepSeek’s intent to open-source the Generative Reward Modeling (GRM) models marks a strategic move designed to accelerate broader progress in AI reward modeling. Open-sourcing these models not only democratizes access to cutting-edge technologies but also encourages broader experimentation and refinement by the global AI community. This collaborative spirit fosters innovation in reinforcement learning models, allowing researchers and developers to build upon DeepSeek’s work, identify new applications, and enhance the overall efficacy and adaptability of AI technologies. The open-sourcing of GRM models is expected to drive significant advancements in reinforcement learning (RL) methodologies, which continue to be a cornerstone of cutting-edge AI development. As RL techniques evolve, they provide increasingly sophisticated means for AI systems to align more closely with human values and preferences. By making GRM models accessible, DeepSeek paves the way for innovations that could further bridge the gap between AI capabilities and human expectations, leading to more coherent, efficient, and value-aligned AI systems. This commitment to collaborative progress underscores the importance of shared knowledge in advancing the field of artificial intelligence. Looking ahead, the breakthroughs achieved by DeepSeek are likely to play a critical role in shaping the future trajectory of AI development. As reinforcement learning methodologies advance, innovations like those presented by DeepSeek will drive the next generation of AI systems to be more responsive, adaptable, and aligned with human needs. These advancements not only promise to enhance individual applications but also have the potential to transform entire industries by enabling more intuitive and effective human-AI interactions. The strategic adoption and refinement of GRM models will likely catalyze further research and development endeavors, fostering a more intelligent and empathetic future for AI technologies.

Conclusion

DeepSeek, a pioneering AI startup from China, has teamed up with researchers from Tsinghua University to make significant strides in AI reward models. Their innovative methods are comprehensively outlined in the paper titled “Inference-Time Scaling for Generalist Reward Modeling.” These advanced models play a crucial role in steering large language models (LLMs) to be more in tune with human preferences and behaviors, thereby enhancing their capabilities through a method known as reinforcement learning. The groundbreaking work by DeepSeek and Tsinghua University researchers has the potential to dramatically transform AI systems, improving how they understand and meet human needs. This collaboration signifies a major leap forward in the field of artificial intelligence, pushing the boundaries of current technology and setting new standards for future developments. Their contributions are not just theoretical but hold promise for practical applications, making AI more responsive and aligned with what humans expect from these systems.