Data Science

- Data Science

The long-promised revolution of AI-powered retail has often stumbled over a surprisingly mundane obstacle, the pervasive issue of inconsistent and unreliable data that undercuts even the most sophisticated algorithms. The integration of Artificial Intelligence with Master Data Management (MDM) represents

- Data Science

The long-promised revolution of AI-powered retail has often stumbled over a surprisingly mundane obstacle, the pervasive issue of inconsistent and unreliable data that undercuts even the most sophisticated algorithms. The integration of Artificial Intelligence with Master Data Management (MDM) represents

Popular Stories

- Data Science

The long-promised revolution of AI-powered retail has often stumbled over a surprisingly mundane obstacle, the pervasive issue of inconsistent and unreliable data that undercuts even the most sophisticated algorithms. The integration of Artificial Intelligence with Master Data Management (MDM) represents

Deeper Sections Await

- Data Science

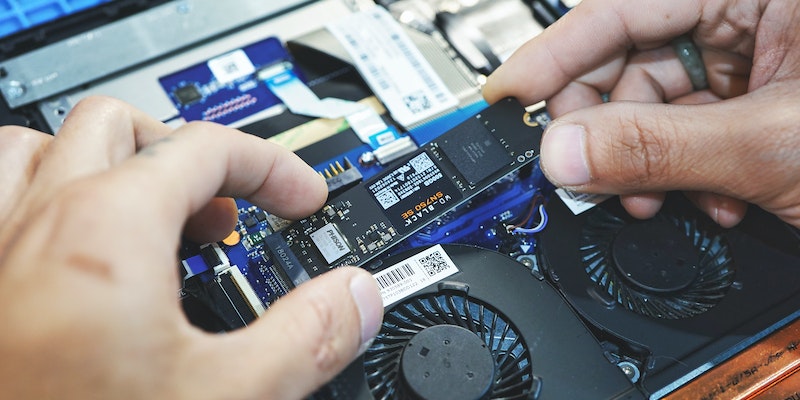

Solid State Drives (SSDs) are becoming more popular in the market due to their faster performance, lower power consumption, and smaller size compared to traditional hard drives. However, they are not immune to failures. It is important to understand the

- Data Science

In today’s data-driven world, having a comprehensive data strategy is crucial for businesses to confront day-to-day challenges and achieve pre-defined business goals using data. A data strategy is a master plan or blueprint that outlines how an organization’s data will

Browse Different Divisions

- Data Science

Solid State Drives (SSDs) are becoming more popular in the market due to their faster performance, lower power consumption, and smaller size compared to traditional hard drives. However, they are not immune to failures. It is important to understand the

- Data Science

In today’s world, data is becoming more important than ever before. With massive amounts of data being generated by individuals and businesses alike, it has become increasingly important to ensure that the data is of high quality and easily accessible.

- Data Science

Technology is constantly evolving, and one area that has seen significant advancements in recent years is data storage. Flash storage has become an increasingly popular technology in the storage industry, powering the majority of today’s solid-state drives (SSDs). In this

- Data Science

In today’s fast-paced business environment, access to accurate, trustworthy, and timely data is critical for making informed decisions. With the ever-expanding volume, variety, and sources of data, businesses face challenges in managing and processing large datasets to gain a competitive

- Data Science

The demand for storage space has grown exponentially since the advent of big data, artificial intelligence, and the internet of things. Companies now require vast amounts of storage space to store and manage their data. This demand has led to

- Data Science

In today’s data-driven world, having a comprehensive data strategy is crucial for businesses to confront day-to-day challenges and achieve pre-defined business goals using data. A data strategy is a master plan or blueprint that outlines how an organization’s data will

Browse Different Divisions

Popular Stories

Uncover What’s Next