The rapid advancement of artificial intelligence has propelled a fundamental shift in how developers interact with large language models, moving the industry beyond the initial art of crafting clever instructions toward the sophisticated science of architecting entire informational environments. While prompt engineering served as a crucial first step in coaxing desired outputs from these complex systems, it is now being recognized as a component of a much larger, more critical discipline known as context engineering. This new paradigm treats the development of AI not as a matter of writing the perfect command, but as a rigorous architectural challenge focused on systematically designing and managing the complete flow of information a model receives. By orchestrating this informational ecosystem, engineers can ensure AI systems operate with greater reliability, accuracy, and sophistication, paving the way for truly autonomous and capable agents that can tackle complex, multi-step problems.

From Prompting to Architectural Design

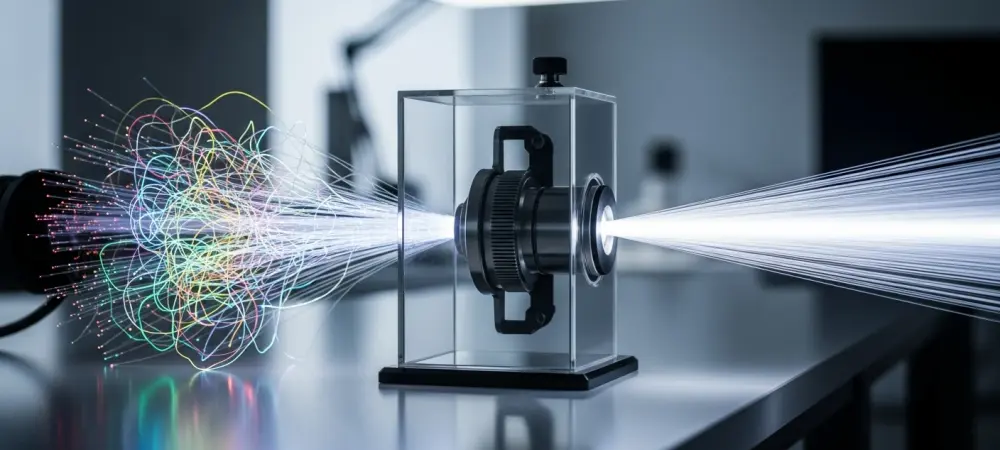

Context engineering is the systematic practice of designing and managing the complete informational ecosystem that a large language model uses before generating a response. This discipline transcends the simple act of crafting an effective prompt; it involves the architectural orchestration of the model’s entire operational environment. This environment is a complex tapestry woven from grounding data, available tools, behavioral policies, data schemas, and the dynamic mechanisms that govern which pieces of information are selected and presented to the model at any given moment. The ultimate objective of effective context engineering is to distill a vast universe of potential information down to a curated, high-signal set of tokens. This process dramatically increases the probability that the model will produce a high-quality, accurate, and relevant outcome, transforming its performance from probabilistic guesswork into a reliable and repeatable process that can be integrated into production-grade software systems.

This represents a significant evolutionary leap from prompt engineering, which is more accurately framed as a predecessor discipline. Prompt engineering primarily focuses on the micro-level details of prompt construction, such as the specific wording, the sequence of instructions, and the inclusion of few-shot examples to guide the model. While undeniably valuable as an initial technique, this approach is akin to a manual hack, a way to work around a model’s limitations on a case-by-case basis. In contrast, context engineering elevates this practice to a macro-level architectural concern. It treats the prompt not as the entire solution but as a single, interactive layer within a much larger, more complex system. This broader system is responsible for the critical tasks of selecting, structuring, filtering, and delivering precisely the right information, in the right format, at the right time, thereby enabling the AI to successfully and reliably accomplish its assigned tasks with a much higher degree of autonomy and consistency.

The Anatomy of AI Context

To fully grasp the principles of context engineering, it is essential to deconstruct the multifaceted nature of “context” within an AI system. The term refers to the “context window”—the total envelope of information that a model can process at one time. This window is not a monolithic block of text but a carefully structured, multi-layered framework where each component serves a unique purpose in guiding the model’s interpretation and behavior. The most foundational layer is the system prompt, which acts as the model’s persistent charter. It defines the AI’s core identity, its primary purpose, and its operational boundaries, dictating its persona, imposing critical rules and guardrails, and establishing a consistent tone that should persist across an entire interaction. Complementing this is the user prompt, which represents the immediate, task-specific instruction from the user. This layer is ephemeral and direct, telling the model what to do in the present moment.

Beyond these foundational prompts, several other layers work in concert to create a rich and dynamic operational environment for the AI. The state or conversation history functions as the system’s short-term memory, providing continuity across conversational turns by including preceding dialogue and intermediate reasoning steps. In contrast, long-term memory introduces persistence across different sessions, storing durable information such as user preferences or project-specific facts, allowing the system to build a continuous relationship with the user. Another critical layer is retrieved information, which dynamically grounds the model in external, up-to-date, and domain-specific data through techniques like Retrieval-Augmented Generation (RAG). Furthermore, the context includes a definition of available tools, which specifies the functions and API endpoints the model can invoke to perform actions in the real world. Finally, structured output definitions impose a specific format on the model’s response, ensuring the output is predictable, machine-readable, and reliably consumed by downstream systems.

Why Unmanaged Context Fails

A common misconception in AI development is that simply expanding the size of the context window will solve the challenges of building reliable systems. In reality, larger, unmanaged context windows are not a panacea; they can be more susceptible to a set of common issues collectively termed “context failure.” These failures represent critical breakdown modes that robust context engineering aims to prevent. One of the most insidious of these is context poisoning, which occurs when erroneous information, such as a factual error or a model-generated hallucination, is introduced into the context and is subsequently treated as a ground truth. Once this flawed premise is accepted, the model may build upon it in later turns, compounding the initial mistake and leading to a significant derailment of its reasoning process. This can spiral quickly, transforming a minor inaccuracy into a completely nonsensical or incorrect outcome, making the AI untrustworthy for critical applications.

Other failure modes are equally disruptive to reliable AI performance. Context distraction arises when the context window becomes excessively large, verbose, or filled with conversational history, causing the model to lose its focus. Instead of leveraging its foundational training to synthesize a novel and relevant answer, it may become overly fixated on the accumulated text, leading it to repeat past actions or get lost in irrelevant details. A related issue is context confusion, which emerges when irrelevant or noisy material contaminates the context, such as superfluous data from a retrieval process or the inclusion of tools not pertinent to the current task. The model may misinterpret this noise as a signal, resulting in poor-quality outputs or incorrect tool calls. Finally, context clash occurs when new information introduced into the context directly contradicts earlier information or assumptions. This can result in inconsistent, contradictory, or completely broken model behavior, undermining the logical integrity of its entire operation.

Core Strategies for Effective Context Engineering

To combat these potential failures and build robust, reliable AI systems, practitioners of context engineering employ a variety of techniques and strategies aimed at optimizing the content and structure of the context window. One of the most fundamental of these is the careful and deliberate selection of knowledge bases and tools. This involves the meticulous curation of external data sources, such as documents and databases, and the specific set of tools that the system is permitted to use. A well-designed selection process ensures that any information retrieval is directed toward high-quality, relevant content and that the model is only presented with tools that are applicable to its designated function. This strategic curation effectively reduces noise and confusion from the outset, providing the model with a clean and focused operational environment. This proactive approach prevents many of the issues related to context distraction and confusion before they can even arise. Another critical strategy is the dynamic management of the limited space within the context window through context ordering and compression. This technique focuses on prioritizing high-signal information while summarizing or pruning less critical content. For instance, a long and rambling conversation history might be automatically replaced with a concise summary that preserves key decisions and facts while omitting conversational filler. Similarly, retrieved documents can be programmatically sorted by relevance, with only the top-ranked excerpts injected into the prompt, ensuring that the most important information is always front and center. Furthermore, the use of structured information and output schemas is essential for enforcing predictable formats. Providing context in a structured format, such as a list of fields in a form, reduces ambiguity for the model. Likewise, requiring the model to produce a response that conforms to a specific schema, like a JSON object, ensures that its output is reliable and can be easily integrated with other software components, creating a more stable and predictable system.

The Imperative for Autonomous AI Agents

The principles of context engineering are not merely beneficial for improving AI performance; they are absolutely essential for the successful development of effective autonomous AI agents. These are sophisticated systems designed to perform complex, multi-step tasks that require intricate planning, sustained reasoning, and the dynamic use of external tools. The ability of these agents to function reliably and achieve their goals hinges entirely on the quality and meticulous management of their context. A well-engineered context acts as a protective mechanism, consistently filtering out noise and preventing the accumulation of errors that can derail long-horizon tasks. Without this discipline, agents are highly prone to failure, as their operational environment quickly becomes polluted with irrelevant information, contradictory data, and compounded hallucinations, leading them to take incorrect or nonsensical actions.

Ultimately, context engineering provides the architectural scaffolding that enables an agent to be both efficient and stateful. By providing agents with only the most relevant information through techniques like selective retrieval and context compression, this practice minimizes the token load on the model, which in turn reduces computational costs and improves the responsiveness and speed of the agent. More importantly, it is the foundation for statefulness—the ability of an agent to remember past interactions, user preferences, and project states across multiple sessions. This transforms agents from one-off problem-solvers into adaptive systems capable of long-term collaboration and learning. The context window is the operating environment where an agent reasons about and decides to take action. The quality of this context, from the specifications for tools to the data retrieved from APIs, directly determines whether the agent can successfully and accurately interact with the real world, making context engineering the lynchpin for building truly functional and tool-integrated AI.