The rapid globalization of digital media has effectively dissolved geographical boundaries for content creators, but the persistent barrier of language has historically limited the reach of even the most compelling messages. For educators, marketers, and entertainers, communicating in a single language has meant forfeiting access to millions of potential viewers who are more engaged by content delivered in their native tongue. Until recently, the solutions were imperfect and costly: subtitles that are often overlooked or expensive professional dubbing studios that strip away the original speaker’s unique vocal identity. Now, a transformative shift is underway, powered by artificial intelligence. Advanced AI video translator tools have emerged that can not only translate content with remarkable accuracy but also clone the speaker’s voice, synchronize lip movements, and navigate distinct dialects, offering an unprecedented level of authenticity that was once the domain of science fiction. The market is now populated with various platforms promising these capabilities, yet only a select few truly deliver the natural-sounding, immersive results that captivate and retain a global audience.

1. The Dawn of Authentic Voice Replication

The most significant breakthrough in AI-powered video translation is the ability to maintain the speaker’s vocal identity across languages, a feature epitomized by platforms like Blipcut. This tool stands out by focusing on authenticity rather than simply layering a generic, robotic text-to-speech voice over a video. It employs sophisticated AI algorithms to analyze the nuanced characteristics of the original speaker’s voice—including pitch, cadence, and intonation—and then generates the translated audio using a synthetic clone of that very voice. This process is crucial for creators and brands whose personal connection with their audience is built on trust and familiarity. The technology supports an extensive array of over 140 languages, empowering users to localize English content for a diverse South American market or translate a niche dialect into a global language with equal finesse. Beyond its flagship voice cloning feature, the platform integrates several tools designed to streamline the localization workflow. It automatically generates frame-accurate subtitles that can be directly edited, ensuring precision. Furthermore, an integrated rewriting tool powered by advanced language models helps refine scripts, guaranteeing that the translation is not merely literal but also culturally resonant and appropriate for the target audience. To harness this technology, the process has been simplified into a clear and efficient workflow designed to transform a raw video file into a polished, localized asset in minutes. The journey begins by uploading the source content, which can be done either by selecting a local file from a computer or by pasting a URL directly from popular platforms like YouTube or TikTok. This direct link integration is particularly advantageous for social media creators looking to repurpose existing content for new regions. Once the video is loaded into the workspace, the user configures the translation settings, selecting both the source and target languages. While an auto-detect feature is available, manual selection ensures accuracy. The most critical decision in this step is voice selection; for optimal results, choosing the “Voice Cloning” option is recommended over pre-set AI voices. Before the final processing, the platform presents a transcript editor. This is an essential quality control step, allowing the user to review the generated text and manually correct any misinterpretations, especially proper nouns or brand names that should remain in their original language. After making adjustments, the user initiates the translation. The final output can be previewed and then exported, with options to either burn the subtitles directly into the video (hardcoding) or download them as a separate SRT or VTT file for greater flexibility. While the voice cloning accuracy and user-friendly interface are significant advantages, users should note that perfecting lip-sync on lengthy videos may require additional processing time, and the credit-based system necessitates careful monitoring for high-volume projects.

2. Advanced Tools for Specialized Content Needs

For professionals dealing with more complex audio-visual formats, such as interviews or panel discussions, specialized tools like Rask AI have emerged as a powerful solution. This platform distinguishes itself with a “Multispeaker” detection feature that automatically identifies different individuals speaking in a single video and assigns a unique cloned voice to each one. This capability is indispensable for maintaining clarity and realism in conversational content, preventing the confusing scenario where multiple speakers are dubbed with the same AI voice. Rask AI is engineered to handle long-form content, capably processing files that exceed twenty minutes without the audio drifting out of sync—a common issue with less robust tools. This makes it a preferred choice for corporate trainers and educational institutions that need to localize entire course modules or lengthy webinars. Its interface provides a side-by-side comparison view, enabling editors to meticulously tweak the translated script and immediately visualize its alignment with the video timeline. This ensures that the pacing remains natural, even when translating between languages with vastly different syllable structures and speech patterns. While its “VoiceClone” technology and lip-syncing are among the best available, these high-end features come at a premium price point, positioning Rask AI as a top-tier option for users with substantial budgets and complex requirements.

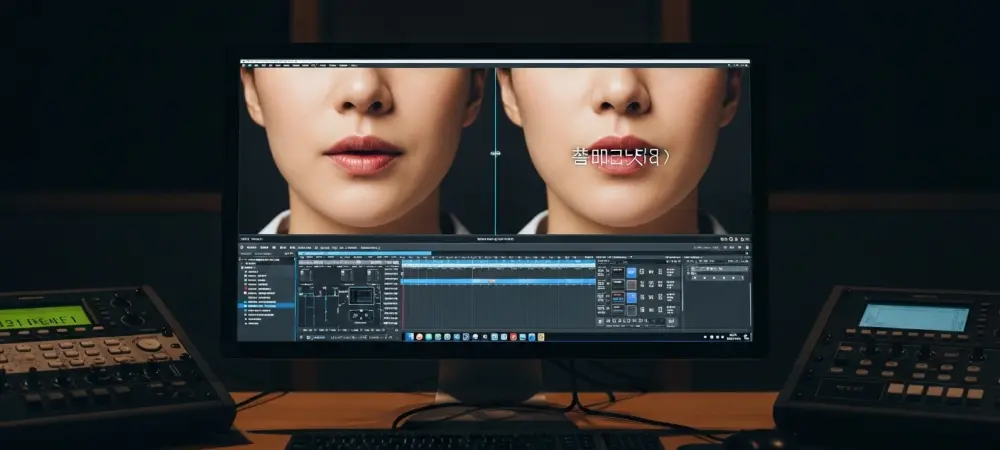

In contrast, other platforms prioritize different aspects of the localization process. ElevenLabs, for instance, has established itself as the gold standard in synthetic speech quality. Although primarily an audio engine that powers many other translation tools, its standalone “Dubbing Studio” offers unparalleled control over the emotional delivery of translated audio. This makes it the ideal choice for content where audio quality is paramount, such as documentaries, audiobooks, and narrative films. Its AI models are trained to detect and replicate subtle emotional cues from the original speech—like whispers, shouts, or laughter—and infuse that same emotional weight into the generated audio. This level of nuance is critical for immersive storytelling, where a flat, robotic delivery would shatter the audience’s engagement. HeyGen, on the other hand, has garnered widespread attention for its revolutionary visual translation capabilities. Its “Video Translate” feature uses generative AI to re-render the pixels around a speaker’s mouth, making it appear as if they natively spoke the translated language. This uncanny lip-sync technology is exceptionally effective for marketing videos and “talking head” content where visual authenticity is key. HeyGen also functions as a complete video generation suite, offering custom avatars that can deliver translated messages, providing an alternative for creators who lack the resources for a reshoot. While the visual rendering is resource-intensive and can lead to longer processing times, the result is a level of realism that is virtually indistinguishable from an original recording.

3. High-Precision Solutions for Business and Education

While creative industries benefit from emotional nuance and visual perfection, corporate and educational sectors often prioritize textual accuracy and workflow integration above all else. Notta, a platform with its roots in transcription services, has expanded into video translation with a sharp focus on precision. It is the ideal tool for localizing corporate meetings, webinars, and educational lectures, where the exact meaning of specialized terminology must be preserved without alteration. The platform’s core strength lies in its high-precision transcription engine, which creates a flawless text base for the subsequent translation. This foundation minimizes errors and ensures that the final translated audio or subtitles faithfully represent the source material. Unlike tools designed for creative flair, Notta forgoes advanced voice-cloning and cinematic effects in favor of reliability and clarity. This makes it perfectly suited for internal communications, compliance training, and academic content, where the primary goal is to disseminate information accurately across multilingual teams and student bodies. Its straightforward approach ensures that the integrity of the original message remains intact, which is a non-negotiable requirement in many professional contexts. The true value of Notta for enterprise users is revealed through its seamless integration with major video conferencing platforms such as Zoom, Google Meet, and Microsoft Teams. Organizations can configure the “Notta Bot” to automatically join scheduled meetings, where it records the session and generates a real-time transcription. Immediately following the call, it can provide a complete, translated summary, making meeting highlights instantly accessible to international team members in their native languages. This functionality transforms post-meeting workflows, eliminating the need for manual note-taking and the lengthy delays associated with traditional translation services. For global businesses, this feature is a productivity powerhouse, enabling cross-border teams to stay aligned and informed without communication friction. While it does not offer the visually stunning lip-syncing of a tool like HeyGen, its ability to convert hours of spoken dialogue into searchable, accurately translated text makes it an indispensable asset for international business operations. The platform effectively bridges the gap between live communication and accessible, multilingual documentation, ensuring that no information is lost in translation.

4. Deconstructing the Technology and Its Practical Applications

Understanding the underlying technology is key to appreciating the distinction between different AI dubbing tools. The core innovation, voice cloning, involves a deep analysis of a speaker’s vocal patterns. The AI processes audio input to identify unique characteristics like pitch, tone, cadence, and even breathing patterns. It then uses this vocal fingerprint to generate new speech in a different language that sounds authentically like the original speaker. This is a world away from traditional text-to-speech systems, which rely on generic, pre-recorded voice fonts. A further distinction exists between standard dubbing and AI-powered lip-syncing. Standard dubbing, common in older foreign films, simply replaces the original audio track, often resulting in a jarring disconnect where the speaker’s mouth movements do not match the new words. AI Lip-Sync, a feature offered by advanced platforms, addresses this by using generative AI to digitally alter the video itself. The algorithm analyzes the phonemes of the translated words and reshapes the speaker’s mouth on a frame-by-frame basis to match them. This process is computationally intensive but delivers a stunning level of realism, creating the convincing illusion that the person on screen is a native speaker of the target language.

For users considering these tools, practical questions about accuracy, accent handling, and cost are paramount. Achieving high accuracy, particularly when translating into a language as nuanced as English, often requires more than just raw AI processing. The most effective platforms incorporate a “human-in-the-loop” approach, where the AI generates the initial translation, and a human editor then refines it. This hybrid workflow allows for the correction of misinterpreted slang, cultural references, or specific terminology, ensuring a polished and professional final product. Modern AI models have become adept at understanding a wide range of accents, but the quality of the source audio remains a critical factor. Clear, crisp audio with minimal background noise will always yield better results. To mitigate issues with strong accents or mumbling, tools that allow users to edit the source transcript before translation are invaluable. In terms of accessibility, most premium services operate on a “freemium” model. This typically involves a free trial or a limited number of credits that allow users to translate a short clip to evaluate the quality. However, advanced features like high-definition exports, watermark removal, and unlimited translation minutes are generally reserved for paid subscription plans.

5. A New Era of Global Content Engagement

The evolution of AI-powered translation marked a definitive turning point for global content distribution. The era dominated by silent subtitles and disconnected dubbing had given way to an expectation of immersive, localized experiences where content spoke directly and authentically to audiences everywhere. The platforms that rose to prominence during this shift each offered a unique solution tailored to different creator needs. The central challenge for any creator or business had shifted from a question of if they should localize to how effectively they could execute a global content strategy. The decision ultimately depended on a careful balancing of priorities.

Those who prized authenticity and a seamless brand identity across borders found their solution in tools that mastered voice cloning. Others, for whom visual perfection was paramount to maintaining audience immersion, invested in platforms with cutting-edge lip-sync technology. For storytellers and educators, the purity of audio and the conveyance of emotion remained the highest goal, guiding them toward specialized audio engines. Meanwhile, large organizations and social media managers gravitated toward integrated platforms that combined translation with broader editing suites and collaborative workflows. The most successful strategies were those that carefully aligned the choice of tool with specific content goals. This deliberate approach had unlocked previously inaccessible markets, proving that the final barrier between a creator and a global audience was no longer language itself, but the strategic implementation of technology.