In today’s data-driven world, businesses must manage and analyze vast amounts of data from various sources such as social media, IoT sensors, and consumer transactions, all while ensuring they can scale effectively. Data engineers face the challenge of achieving this balance while managing two primary data processing methods: real-time and batch processing. Each method has its strengths and weaknesses, and the key to success lies in blending them appropriately to deliver both speed and scalability, making data handling both efficient and effective for complex business environments.

Real-time processing involves continuously monitoring and analyzing data as it is generated, offering near-instantaneous insights that can be crucial for scenarios where timely information is essential, such as in stock market applications or fraud detection systems. Technologies commonly employed for real-time data pipelines include Amazon Kinesis, Apache Flink, and Apache Kafka, all of which are tailored to handle massive streams of data in real-time, enabling businesses to react swiftly and accurately to emerging trends and threats as they happen. But real-time processing, while powerful, is not without its challenges and costs.

Batch processing, on the other hand, remains an indispensable method for handling large-scale data efficiently. Unlike real-time processing, batch processing accumulates data over a period before processing it all at once, a method well-suited to tasks that do not require immediate action such as payroll calculations or generating quarterly reports. Popular tools for batch processing include Apache Hadoop, Apache Spark, and AWS Glue, all of which are designed to manage vast amounts of data cost-effectively while ensuring high reliability and integrity. Batch processing is particularly advantageous for extensive datasets that demand in-depth analysis without the urgency of real-time updates.

Understanding Real-Time Data Processing

Real-time data processing offers immediate insights, vital for quick decision-making scenarios such as fraud detection or personalized recommendations. This method significantly improves user experience by enabling features like instant notifications, enhancing user engagement and satisfaction by providing swift responses to their actions or needs. Additionally, real-time processing allows businesses to address issues or capitalize on opportunities as they arise, ensuring they remain competitive and responsive rather than reactive in their business operations.

However, the benefits of real-time processing come with inherent complexities. Designing and scaling real-time systems requires substantial computing resources, which can be expensive. The necessity of distributed computing and low-latency networks adds layers of complexity to the infrastructure. Moreover, for non-urgent tasks, employing real-time processing might waste valuable resources that could be better utilized elsewhere. Therefore, it is essential for businesses to carefully evaluate the necessity of real-time processing for each specific use case to ensure they are not overspending or overcomplicating their data management strategies.

Understanding Batch Processing

Batch processing offers a resource-efficient method as it handles data in bulk, making it suitable for extensive datasets typical in data warehouses or Extract, Transform, Load (ETL) processes. This approach allows businesses to process large volumes of data in a cost-effective manner, ensuring high throughput and efficiency. Additionally, batch processing systems are generally easier to design and maintain compared to their real-time counterparts, as they do not require constant monitoring or immediate scalability solutions.

Despite its efficiency, batch processing has inherent delays, which limit its suitability for time-sensitive tasks. The time lag between data generation and analysis can mean that businesses miss critical opportunities or react too late to emerging issues. Furthermore, batch processing offers less flexibility as adapting quickly to new data or conditions is challenging. This inflexibility means businesses must carefully consider the latency requirements for their tasks and applications to determine when batch processing is appropriate and when a real-time approach would yield better results.

The Need for Both Real-Time and Batch Processing

In practical business environments, neither real-time nor batch processing alone can meet all data needs comprehensively. A hybrid approach that leverages both methods provides a balanced solution, often proving to be the most effective strategy. For instance, e-commerce platforms can utilize real-time data processing to make immediate product recommendations during user browsing while relying on batch processing for analyzing sales trends to optimize inventory and understand long-term customer preferences.

Hybrid approaches can be seen in various industries. In streaming services, real-time processing suggests shows based on current viewing while batch processing evaluates long-term trends to enhance content strategies. Similarly, in IoT applications, real-time systems manage critical events such as temperature spikes in industrial equipment, while batch processing provides insights from historical data for operational improvements and predictive maintenance. This balanced approach ensures that businesses can respond instantaneously where needed while also gaining comprehensive, in-depth insights from accumulated data over time.

Strategies for Balancing Real-Time and Batch Processing

Achieving the right balance between real-time and batch processing requires a thoughtful approach that includes understanding specific use cases, embracing suitable architecture paradigms, and maintaining a sharp focus on data quality and infrastructure robustness. Knowing when to deploy each processing method based on the priority and latency requirements of tasks is crucial for resource optimization.

Know Your Use Cases

Understanding specific business needs is the first step in balancing processing methods. High-priority, low-latency tasks, such as fraud detection and dynamic pricing, are well-suited for real-time processing due to their need for immediate action. Conversely, low-priority, high-latency tasks, like quarterly reports or churn analysis, are better handled through batch processing as they can tolerate delays. By categorizing and prioritizing tasks based on their urgency and data requirements, businesses can allocate resources more effectively, ensuring both efficiency and cost-effectiveness in their data handling strategies.

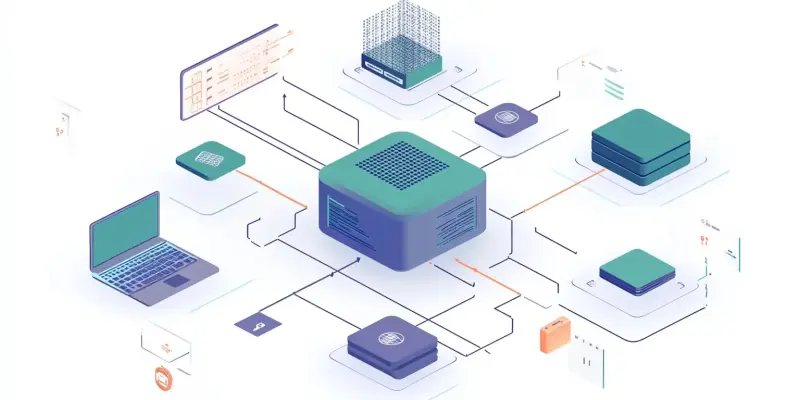

Use a Lambda Architecture

Lambda Architecture presents a robust solution for integrating both batch and real-time processing within a single system. It consists of three layers: the batch layer processes historical data for large-scale analysis; the speed layer manages real-time data for instant insights; the serving layer merges results from both layers to provide a unified view of data. Although complex to set up, Lambda Architecture leverages the strengths of both processing methods, providing a comprehensive framework that allows businesses to gain quick insights while also performing deep, historical analyses. This hybrid approach ensures that insights are not just immediate but also contextually rich and well-informed.

Prioritize Data Quality

High-quality data is the cornerstone of accurate insights, regardless of the processing method used. Investing in tools and protocols for data monitoring, cleaning, and validation is essential for maintaining data integrity. Tools such as Apache NiFi, dbt (data build tool), and Great Expectations can support these efforts, ensuring that data used in both real-time and batch processing is reliable, clean, and trustworthy. High data quality reduces the likelihood of errors and enhances the accuracy of insights, ultimately leading to better decision-making and operational efficiency.

Leverage Cloud Platforms

Cloud platforms such as AWS, Azure, and Google Cloud provide scalable and flexible solutions for deploying both real-time and batch processing systems. Managed services like AWS Glue for batch processing, Amazon Kinesis for real-time streaming, and Google BigQuery for data querying allow businesses to focus on their core operations rather than the complexities of infrastructure management. Leveraging these cloud platforms enables businesses to scale their data pipelines seamlessly, adapt to varying workloads, and ensure high availability and reliability in their data processing tasks.

Continuously Monitor and Optimize

Balancing real-time and batch processing is not a one-time task but an ongoing process. Regular performance and cost monitoring ensures that the chosen strategies continue to align with evolving business needs. It is crucial to make adjustments based on emerging data requirements and technology advancements to maintain efficiency. This continuous optimization ensures that data handling processes remain agile and responsive, allowing businesses to adapt quickly to new challenges and opportunities as they arise. By regularly reviewing and refining their data strategies, businesses can maintain a dynamic and effective data processing ecosystem.

Real-World Application: A Food Delivery App

A practical example of balancing real-time and batch processing can be seen in the operations of a food delivery app. Real-time processing in such an app provides users with immediate updates on driver locations, enhancing customer experience by offering transparency and up-to-the-minute information. It also helps in detecting fraudulent orders instantaneously, ensuring security and trust within the platform. Additionally, real-time processing enables the app to send personalized push notifications to users, informing them of special deals or updates relevant to their preferences.

On the other hand, batch processing in a food delivery app handles tasks that do not require immediate action but are essential for long-term operational efficiency. For example, batch processing can be used to analyze delivery times and optimize routes, ensuring efficient use of resources and timely deliveries. It can also be utilized to generate monthly revenue reports, providing insights into financial performance and helping with strategic planning. Furthermore, batch processing can train machine learning models on historical data to improve future recommendations and predictions, thus enhancing the overall user experience and operational capabilities.

Final Thoughts

In today’s data-driven environment, businesses need to manage and analyze extensive data from sources like social media, IoT sensors, and consumer transactions, while ensuring scalability. Data engineers tackle this by balancing two main data processing methods: real-time and batch processing. Each method has its pros and cons, and success hinges on integrating both to achieve speed and scalability, making data management efficient and effective in complex business environments.

Real-time processing involves continuously monitoring and analyzing data as it is generated, providing near-instant insights crucial for scenarios like stock market applications or fraud detection. Technologies like Amazon Kinesis, Apache Flink, and Apache Kafka handle large data streams in real-time, enabling businesses to react quickly to trends and threats. However, real-time processing comes with its own set of challenges and costs.

Conversely, batch processing efficiently handles large-scale data by accumulating information over time and processing it all at once, ideal for tasks not needing immediate action, like payroll or quarterly reports. Tools such as Apache Hadoop, Apache Spark, and AWS Glue are cost-effective for managing large datasets with high reliability making it particularly advantageous for in-depth analysis without the urgency of real-time updates.