The surge of generative AI and Large Language Models (LLMs) is revolutionizing business communications and data analysis. Companies are grappling with the choice between public models that are readily available and private LLMs customized to their specific needs. This crucial decision will define enterprise AI strategies and impact innovation, competitiveness, and data security. Public LLMs offer wide-reaching accessibility, but private LLMs provide a tailored experience that could lead to significant strategic advantages. However, there are trade-offs, including higher costs and resource investment for private solutions. Conversely, while public models are cost-effective and easy to deploy, they offer less control over data privacy. Balancing these factors is key as businesses navigate the AI-driven landscape of the future.

The Allure and Risks of Public Large Language Models

Public LLMs like ChatGPT have captured the attention of the business world due to their expansive knowledge base and adaptive intelligence. Enterprises are drawn to these models for their immediacy and the promise of scaling customer interactions and information retrieval. However, the honeymoon phase with public LLMs can be short-lived as inaccuracies and misleading outputs—often termed “hallucinations”—pose considerable threats. These issues can lead to grave consequences, from operational mishaps to lawsuits rooted in the dissemination of faulty information, making public LLMs a double-edged sword.

The possibility of data breaches and the misuse of sensitive information only accentuate the perils of public LLMs. In an era where data is as valuable as currency, the ease of integration of public models is overshadowed by the looming risk of compromised corporate or client data. The concerns are not misplaced, as recent cyber incidents have laid bare the vulnerabilities of public AI platforms. As enterprises confront these risks, the call for deeper introspection into AI strategies becomes more urgent.

The Strategic Edge of Private Large Language Models

Private Large Language Models (LLMs) are rapidly gaining traction in the business world as they offer strategic advantages through tailored AI solutions. By leveraging specific data and operational processes unique to their company, businesses can carve out a competitive edge. These AI models provide a highly personalized service that aligns with a company’s particular expertise and strategic objectives. As a result, adopting private LLMs enables businesses to manage their own narrative and use AI to enhance their unique selling points.

Such bespoke models do not merely offer an exclusive benefit but also represent a strategic enterprise asset that drives innovation. Private LLMs give businesses the opportunity not just to keep up with industry trends, but to be pioneers in their field. Consequently, these companies are positioned at the vanguard of progress, setting the direction for future industry standards.

The Case for Tailored AI in a Competitive Market

In the competitive jungle of the business world, differentiation is not merely an advantage but a necessity. Private LLMs serve as the catalyst for differentiation, distancing enterprises from the unpredictability of public data pools. By building their own AI systems, companies not only secure their trade secrets but also tailor their customer engagement, product development, and market strategies with unparalleled precision. This bespoke approach positions them distinctively in the marketplace, asserting a robust stance against competitors.

The shift to private LLMs underscores a commitment to long-term excellence and sustainability. It’s a strategic investment in an enterprise’s future, where the reliance on a privately curated data fortress translates into a repository of unique capabilities and market insights. As businesses zero in on this approach, they chart a course toward a future where their bespoke AI acts as a keystone of their success.

Mitigating Security Concerns with Private AI Solutions

Security takes center stage in the discourse on LLMs, with the vulnerabilities of public models inducing justifiable wariness among businesses. The threat of cyberattacks and unauthorized extraction of confidential data has galvanized enterprises to seek safer havens for their AI endeavors. Private LLMs emerge as the bulwark against these threats, carving a secure space where enterprises can explore AI’s potential without the overhanging dread of data compromise.

In the refuge of private LLMs, the fears associated with public models dissipate. Companies gain the peace of mind that comes with fortified data channels and the added assurance of compliance with stringent governance standards. This protective embrace allows businesses to innovate boldly, confident in the security of their data and the integrity of their AI outputs.

The Rise of Supportive Tools for Building Private LLMs

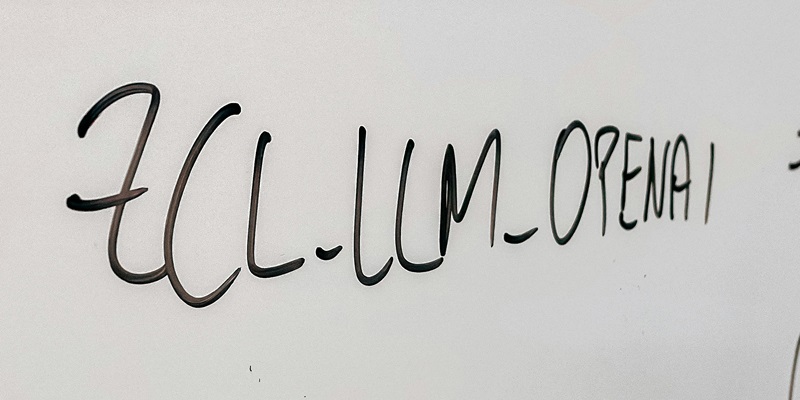

Recognizing the uptick in demand for individually aligned AI systems, tech behemoths are shaping an ecosystem conducive to the crafting of private LLMs. This landscape is replete with tools and frameworks tailored to foster the construction of bespoke models, suggesting a paradigm shift in technology strategy for businesses. As the infrastructure for private AI development solidifies, a new era of innovation is beckoning enterprises to forge their paths.

The proliferation of resources such as IBM’s Watson and other enterprise-grade solutions is enabling a transformative move toward personalized AI. These instrumentalities not only facilitate the creation of private LLMs but also embolden companies to refine their AI to a degree previously unattainable. As this trend gains momentum, the narrative is clear, the future of enterprise AI lies in the architecture of private, secure, and bespoke Large Language Models.