The landscape of virtualization is undergoing significant shifts as organizations grapple with new licensing structures and emerging alternatives. Recent developments, notably Broadcom’s acquisition of VMware, have prompted many businesses to reassess their virtualization strategies. Organizations reliant on VMware find themselves facing a turning point, whereby they must decide whether to continue with Broadcom’s altered terms or explore other avenues. As VMware’s licensing model undergoes transformations, dissatisfaction among clients has led to an exploration of alternatives, with a multi-hypervisor approach emerging as a potential solution. Gartner’s research underlines widespread discontent, citing that two-thirds of organizations express negative sentiments toward the recent licensing changes. This dissatisfaction has steered enterprises towards contemplating a multi-hypervisor environment, a trend that seems inevitable due to the absence of simple replacements, compounded by approaching deadlines associated with VMware’s vSphere 8 support lifecycle.

Navigating Licensing Changes and Exploring Alternatives

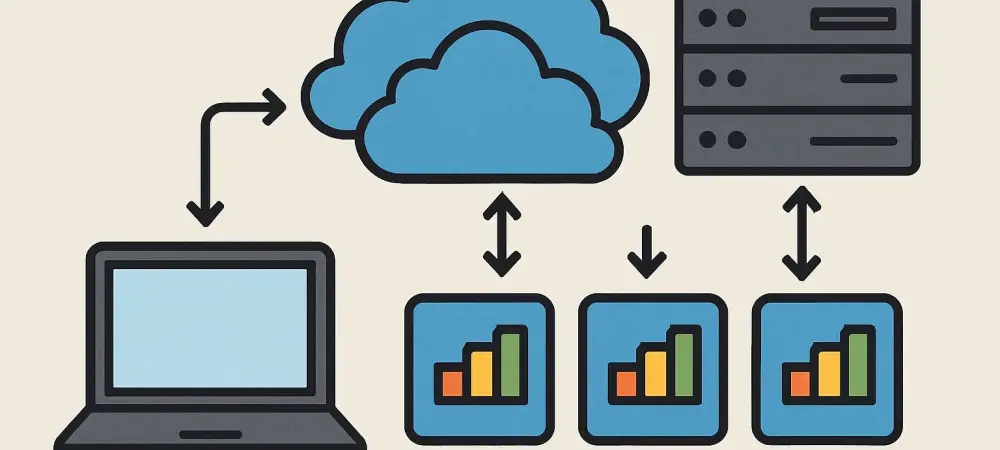

The dissatisfaction with Broadcom’s licensing structure is not merely anecdotal but is supported by empirical data from esteemed industry research. The Gartner report underscores how most organizations have expressed reluctance towards accepting the new terms. Consequently, businesses find themselves compelled to consider alternative virtualization platforms to forge more sustainable strategies. VMware served as the backbone for many data centers, with its unrivaled uptime and performance. Now, the impending sunset of vSphere 8 by 2027 urges organizations to act without delay. Crucially, a viable path forward involves deploying a multi-hypervisor setup that marries varied hypervisor technologies. This pivot promises robust support for mission-critical applications while accommodating diverse infrastructures. Yet, such a transition requires foresight and methodical planning, delicate enough to address medium-to-large-scale environments that are integral to major business operations.

Furthermore, the need for strategy updates intensifies given the strategic timeframes involved. Expert insights suggest that migration plans could span anywhere from one and a half to four years. Businesses can no longer afford to postpone such plans, as remaining ahead of technological curves demands proactive consideration of alternative virtualization options. Planning this migration necessitates aligning new infrastructures with existing performance capacities, a process underscored by complexities across various IT domains. Striking the right balance between cost, capability, and uptime remains a challenge but constitutes the core of a successful multi-hypervisor strategy.

Challenges and Decisions in Infrastructure Migration

Multiple factors contribute to the reluctance toward adopting hyperconverged infrastructure, as perceived by about 70% of Gartner clients. The entrenched systems that characterize today’s IT environments, specifically those dependent on three-tier architectures, pose significant hurdles. External storage arrays stand as a testament to organizations’ ingrained habits, where changing these infrastructures entails substantial implications. Additionally, networking components loiter as another persistent aspect, given many businesses find themselves halfway through implementing tools like NSX. Despite interest in alternatives, these components remain tied to ongoing projects, obstructing seamless migrations. As organizations navigate these layers of complexity, networking influences significantly hold the key to unlocking a multi-hypervisor advantage.

Some businesses may feel tempted to migrate less-critical applications first as part of initial attempts toward multi-hypervisor adoption. While seemingly straightforward with prospects of an easy win, this approach confines the organization to a limited view. Such endeavors often give rise to misconceptions about reduced VMware reliance, which Broadcom might counteract by withdrawing applicable discounts during license renewals. Furthermore, less-critical workloads do not exploit advanced features like high availability, vital for essential applications. Concentrating on these areas potentially results in inflated costs. Therefore, roundly analyzing applications based on performance, cost-setting, and strategic fit remains necessary for long-term infrastructure stability and effectiveness.

Strategic Pathways and Future-Focused Planning

Simply swapping out hypervisors does not suffice for a comprehensive virtualization strategy. A mere change in the underlying architecture often follows with sizable time outlays with little perceived value. Besides, the switch may lead to unnoticed transformations that barely influence organizational performance or value delivery. Only through embedding transitions within a holistic strategy can businesses avoid such pitfalls. Key considerations involve robust virtualization management, focusing on auxiliary components encompassing redundancy, disaster recovery, and availability. The quest for replacements and tools becomes primary, with components like vCenter demanding proven alternatives to support distributed systems management.

Aligning forward-looking strategies necessitates that organizations tailor their approach closely with strategic requirements. For those invested in reliable networking, rapid data processing, or compliance mandates, maintaining on-premises orientations hinges upon weighing the benefits against the associated overheads. Available alternatives include continuing under VMware’s revised model, transitioning to different platforms, or enhancing flexibility with containerization. Modernizing applications to operate as containers enables businesses to choose operational platforms, whether on-premise or via cloud setups. Organizations embracing cloud-focused strategies may pursue shifting virtual applications to hosted environments or look toward infrastructure-as-a-service models. Vital to such strategies are considerations around predictable cost structures, highlighting the importance of financial instruments like Microsoft’s five-year reserved instances.

Embracing Multi-Hypervisor Environments for Competitive Edge

The virtualization landscape is experiencing notable changes as companies confront evolving licensing formats and new alternatives. One significant recent event is Broadcom’s acquisition of VMware, prompting many businesses to reevaluate their virtualization strategies. Companies dependent on VMware now face a critical decision: stick with Broadcom’s revised terms or consider other options. Changes in VMware’s licensing model have stirred dissatisfaction among clients, driving them to seek alternatives, with a multi-hypervisor strategy presenting itself as a potential solution. Research from Gartner highlights this dissatisfaction, revealing that two-thirds of organizations hold negative views about the new licensing adjustments. This discontent has led enterprises to contemplate a multi-hypervisor setup, a trend that appears inevitable given the lack of straightforward replacements and looming deadlines tied to VMware’s vSphere 8 support lifecycle. The pressure mounts as businesses weigh their options amid these shifts.