Imagine a world where machines can think and adapt like humans, solving problems they’ve never encountered with creativity and insight, a vision that represents the ambitious goal of Artificial General Intelligence (AGI). Standing at the forefront of this pursuit is the Abstraction and Reasoning Corpus for Artificial General Intelligence (ARC AGI), a benchmark designed to test an AI’s ability to reason abstractly and tackle novel challenges. As a critical yardstick for measuring progress toward true machine intelligence, ARC AGI offers a unique window into how close—or far—the field is from achieving a monumental leap in capability as AI continues to evolve at a breathtaking pace.

Understanding the Foundation of ARC AGI

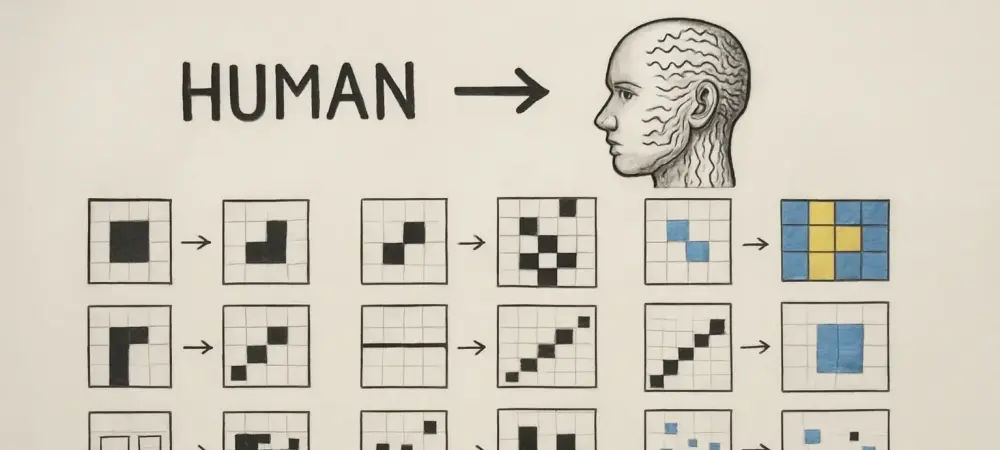

Introduced by Francois Chollet in 2019, ARC AGI challenges AI systems to demonstrate fluid intelligence, the capacity to adapt and solve problems without relying on memorized patterns or domain-specific training. Unlike traditional benchmarks that reward rote learning, this framework focuses on abstract reasoning, pushing models to think in ways that mirror human cognition. Its significance lies in its departure from conventional metrics, positioning it as a gold standard for evaluating whether machines can truly generalize across unfamiliar tasks.

The benchmark’s relevance extends beyond academic curiosity, serving as a litmus test for the broader AI landscape. By emphasizing adaptability over static knowledge, it compels researchers to rethink how intelligence is defined and measured. This shift has sparked widespread interest, as success on ARC AGI could signal a transformative step toward AGI, where machines might one day match or surpass human intellectual versatility.

Analyzing the Iterations and Performance Metrics

ARC AGI 1: Testing the Basics of Fluid Intelligence

The first iteration, ARC AGI 1, laid the groundwork by presenting tasks that required abstract problem-solving rather than pre-learned solutions. Models were evaluated on their ability to identify patterns and apply logic in unfamiliar contexts, a direct challenge to conventional AI strengths. This initial set established a baseline for assessing how well systems could handle non-routine scenarios. Notable performance came from OpenAI’s o3 model, which achieved an impressive 85% success rate on this benchmark. Such a score highlighted early AI capabilities in managing structured yet novel tasks, suggesting that certain systems could mimic aspects of human reasoning under controlled conditions. However, this success also raised questions about whether high scores reflected genuine understanding or merely sophisticated pattern recognition.

ARC AGI 2: Confronting Greater Complexity

Building on the first iteration, ARC AGI 2 introduced heightened complexity, with tasks designed to push models beyond their comfort zones. The sharp decline in performance was telling—OpenAI’s o3 model, previously dominant, scored a mere 3% on this set. This stark contrast underscored significant limitations in handling more intricate and less predictable challenges. The performance gap revealed a critical insight: current AI systems, while adept at familiar structures, often falter when faced with truly novel scenarios. This iteration exposed the brittleness of many approaches, prompting a reevaluation of how adaptability is engineered into models. It became evident that deeper innovation was needed to bridge the divide between static skills and dynamic reasoning.

ARC AGI 3: Interactive Games as the Next Frontier

Looking ahead, ARC AGI 3 promises to raise the bar further by incorporating small interactive games without explicit instructions. Models must intuit objectives through visual cues alone, testing their capacity for spontaneous understanding in real-time environments. This evolution marks a significant departure from static problem sets, aiming to simulate more lifelike decision-making contexts. Expected to be a formidable challenge, this iteration could redefine benchmarks for AGI readiness. Success here would indicate a leap in intuitive reasoning, bringing AI closer to human-like cognition. As development continues, the anticipation around ARC AGI 3 underscores its potential as a pivotal milestone in the journey toward general intelligence.

Theoretical Underpinnings and Philosophical Context

Francois Chollet’s vision for ARC AGI is deeply rooted in contrasting views of intelligence, drawing from historical perspectives like Marvin Minsky’s focus on mimicking human thought and John McCarthy’s emphasis on adapting to the unknown. Chollet champions the latter, arguing that true intelligence lies in navigating uncharted territory rather than replicating known behaviors. This philosophy shapes the benchmark’s design, prioritizing fluid over crystallized intelligence.

A compelling metaphor Chollet employs likens existing AI to roads—fixed paths of capability—while likening AGI to a road-building company, capable of forging new routes as needed. This distinction highlights the need for generative problem-solving, where systems create solutions rather than follow pre-built frameworks. Such insights challenge the field to move beyond incremental gains and aim for transformative adaptability.

Cutting-Edge Approaches to ARC Challenges

Modern strategies for tackling ARC tasks reflect a shift toward dynamic adaptability during inference, with techniques like test-time training allowing models to adjust on the fly. Program synthesis, another key method, enables systems to construct novel algorithms for unique problems, mirroring a form of creative reasoning. These approaches have become dominant in addressing the benchmark’s unpredictable nature.

Chain-of-thought synthesis further enhances performance by breaking down complex tasks into logical steps, guiding models through intricate reasoning processes. By integrating such methods, AI systems can better navigate the abstract demands of ARC challenges, offering glimpses of how general intelligence might be engineered. These innovations illustrate a promising trajectory, even as full mastery remains elusive.

Impact on the AI Community and Industry

ARC AGI’s influence extends far beyond research labs, spurring competitive engagement through contests and substantial prizes for top performers. This environment fosters rapid innovation, as developers strive to outpace one another in solving the benchmark’s toughest problems. The resulting momentum has galvanized the AI community, creating a shared focus on abstract reasoning as a critical frontier.

Industries, too, feel the ripple effects, as insights from ARC AGI shape research priorities and model enhancements across sectors like healthcare, logistics, and education. The benchmark’s emphasis on adaptability informs how AI is integrated into real-world applications, driving a broader push for versatile systems. This competitive and practical interplay accelerates the field’s overall progress toward AGI.

Persistent Barriers and Technical Hurdles

Despite notable achievements, stark performance disparities across ARC iterations reveal enduring technical challenges. The drastic drop from ARC AGI 1 to 2 suggests that scaling complexity exposes fundamental weaknesses in current architectures. Overcoming these gaps requires rethinking how models learn and generalize in unprecedented situations. True adaptability for novel tasks—a cornerstone of AGI—remains a formidable obstacle. Many systems still lean on latent patterns from training data, struggling when faced with entirely new paradigms. Addressing this limitation demands not just incremental tweaks but potentially radical shifts in AI design, a process fraught with uncertainty regarding timelines and feasibility.

Looking Ahead: The Path to AGI

Speculation abounds on what high scores on ARC AGI 3, possibly within the next couple of years from 2025, could signify for AGI’s proximity. Such a breakthrough would mark a profound shift, suggesting that machines are nearing the ability to reason intuitively across diverse contexts. This potential milestone keeps the AI community on edge, eagerly awaiting the next wave of results.

Anticipated advancements in techniques and testing methodologies offer hope for closing existing gaps. Enhanced frameworks for dynamic learning and more sophisticated evaluation tools could redefine how progress is measured. As these developments unfold, ARC AGI will likely remain a central gauge of AI’s evolving capabilities, shaping expectations for the future.

Reflecting on the Journey and Next Steps

Looking back, the exploration of ARC AGI illuminated both the remarkable strides and stubborn challenges in AI’s pursuit of general intelligence. The benchmark stood as a rigorous test of fluid reasoning, revealing the strengths of models like OpenAI’s o3 on initial tasks while exposing their fragility under escalating complexity. Each iteration provided critical lessons, shaping a deeper understanding of what true adaptability entails. Moving forward, the focus must shift to fostering innovation in dynamic learning strategies, encouraging models to not just react but create solutions in real time. Collaborative efforts within the AI community, bolstered by competitive incentives, should prioritize novel architectures that tackle the unpredictability of future challenges. By investing in these areas, the field can build on past insights to pave a clearer path toward machines that think and adapt with human-like ingenuity.