The same algorithms designed to unlock individual student potential are now at risk of erecting digital barriers that mirror centuries of societal division, threatening the very promise of equitable education they were meant to advance. As artificial intelligence becomes increasingly woven into the fabric of modern learning, from guiding lesson plans to evaluating student performance, a critical examination of its hidden impacts is no longer optional—it is an ethical necessity. These sophisticated systems, intended to be objective and data-driven, can inadvertently become powerful engines for perpetuating historical biases, creating a new digital frontier for systemic inequality. Understanding this risk is the first step toward harnessing AI’s power for good while safeguarding the futures of all learners.

The Promise and Peril of AI in the Classroom

The rapid integration of AI tools into educational settings offers a compelling vision of the future. Schools are adopting intelligent systems to create personalized learning paths that adapt to each student’s pace, automate the burdensome task of grading, and provide teachers with real-time insights into classroom comprehension. This technological shift promises to make education more efficient, responsive, and tailored to individual needs, freeing up educators to focus on the uniquely human aspects of mentorship and inspiration. The potential to close achievement gaps and provide targeted support at an unprecedented scale has made AI a cornerstone of modern educational strategy.

However, beneath this promising surface lies a significant peril. These systems learn from data, and the data they consume is a reflection of a world replete with long-standing societal inequities. Without careful design and vigilant oversight, AI tools can absorb and amplify these biases, undermining the core mission of providing every student with an equal opportunity to succeed. This risk transforms a tool of potential progress into a mechanism for entrenching disadvantage, where a student’s future is shaped not by their potential but by the biased patterns of the past.

This exploration will delve into the origins of algorithmic bias, tracing how flawed data translates into tangible, and often harmful, consequences for students. It will examine the real-world impact of these digital decisions, from academic tracking to the erosion of student privacy and agency. Finally, it will outline the essential steps that educators, administrators, and policymakers must take to ensure that the implementation of AI serves to dismantle barriers, not reinforce them, paving the way for a more responsible and equitable technological future in education.

The High Stakes of Algorithmic Decision-Making in Education

Addressing bias in educational AI is not merely a technical challenge; it is a fundamental issue of civil rights and educational equity. When algorithms influence decisions about which students are placed in advanced courses, who receives extra support, or who is flagged as “at-risk,” they are actively shaping life trajectories. Allowing these systems to operate with unchecked biases risks creating a permanent digital caste system, where students from historically marginalized communities are systematically disadvantaged by automated judgments they cannot see, understand, or appeal. Upholding the principles of fairness and equal opportunity, therefore, requires a proactive and critical approach to AI deployment.

Conversely, the benefits of implementing AI ethically and equitably are profound. A well-designed system can foster genuine personalization that empowers students rather than predetermining their paths. By ensuring transparency and fairness, schools can build and maintain the trust of students and their families, a crucial component of any successful educational environment. Most importantly, ethical AI can become a tool for actively counteracting historical disadvantages. Instead of simply reflecting past inequalities, these systems can be calibrated to identify and support underserved students, helping to prevent the digital entrenchment of societal divides and creating a more just educational landscape for all.

How AI Systems Learn and Perpetuate Societal Biases

The mechanisms through which AI systems absorb and amplify bias are often subtle but deeply impactful. These models are not inherently prejudiced; they are powerful pattern-recognition machines that learn from the vast datasets they are trained on. When this data contains the echoes of historical inequity, the AI learns these patterns as objective truths, creating a feedback loop where past injustices are codified into future predictions. This process turns a supposedly neutral technology into a conduit for perpetuating systemic bias.

The Foundation of Bias Flawed Data and Historical Inequity

At the heart of algorithmic bias lies a simple but powerful principle: “garbage in, garbage out.” AI models in education are trained on historical data, which includes everything from standardized test scores and attendance records to demographic information. This data is not neutral; it is a product of decades of societal inequities, including disparities in school funding, unequal access to resources, and cultural biases embedded in assessment methods. The algorithms learn to associate certain indicators—often proxies for race and socioeconomic status—with academic outcomes.

This digital reflection of past injustices becomes particularly dangerous when used for predictive purposes. Consider how an AI system might interpret a student’s zip code. While seemingly a neutral data point, a zip code is often a powerful proxy for socioeconomic status, racial demographics, and the level of funding available to local schools. An algorithm trained on historical data may learn that students from certain zip codes have, on average, lower test scores. Consequently, it might unfairly predict lower academic potential for a bright student from an under-resourced community, not because of their individual ability but because of the statistical shadow cast by their address. This codifies a historical disadvantage into a predictive judgment, creating a significant barrier to the student’s future opportunities.

From Biased Data to Damaging Outcomes The Real-World Impact on Students

The theoretical problem of biased data becomes a tangible reality when algorithmic predictions influence educational decisions. A flawed prediction can lead to a student being mislabeled or unfairly denied access to advanced learning tracks, scholarships, or gifted programs. These decisions, cloaked in the objectivity of data, carry immense weight and can have lasting consequences on a student’s academic confidence and career prospects. The AI’s output is often treated as an impartial assessment, making it difficult for educators or parents to challenge its conclusions, even when they conflict with their own observations of a student’s capabilities. These outcomes can quickly create self-fulfilling prophecies, where the system’s prediction actively shapes a student’s educational trajectory. A student incorrectly flagged as “at-risk” by an algorithm may be placed in a less rigorous academic track, fundamentally altering the quality of their education and limiting their exposure to challenging material. For example, an AI system disproportionately identifying students from marginalized backgrounds as high-risk might lead not to additional support but to their exclusion from advanced placement courses or enrichment programs. In this scenario, the “at-risk” label becomes a justification for providing fewer opportunities, thereby institutionalizing a lower academic path and ensuring the algorithm’s initial prediction becomes true.

Beyond Academics The Erosion of Privacy and Student Agency

The implementation of educational AI extends beyond academic tracking, raising profound concerns about student privacy. To create personalized learning profiles, these systems collect a vast amount of data, far beyond grades and test scores. They can monitor mouse clicks, reading speed, time spent on tasks, and even behavioral patterns. This extensive data collection often occurs under vague consent agreements, creating significant privacy risks if the data is mishandled, breached, or shared with third-party vendors without transparent oversight. The constant surveillance can create a chilling effect in the classroom, discouraging the very creativity and exploration that education is meant to foster.

Furthermore, an over-reliance on automated “personalized learning” can inadvertently limit a student’s agency and intellectual growth. When an AI system dictates the curriculum, sets the pace, and suggests a narrow path forward, it can reduce learning to a process of completing predetermined tasks. This can stifle curiosity and discourage students from taking the intellectual risks necessary for deep, independent thinking. Instead of becoming active explorers of knowledge, students risk becoming passive recipients of algorithmically delivered content, diminishing their ability to develop critical decision-making skills and a sense of ownership over their own education.

A critical issue compounding these problems is the “black box” dilemma, where AI systems make high-stakes decisions without providing a clear rationale. Imagine an AI used in college admissions or for scholarship awards that rejects a qualified applicant. If the system cannot explain its reasoning, providing only a final decision, there is no way for the student, their family, or educators to understand, challenge, or correct a potentially biased or inaccurate outcome. This lack of transparency erodes trust in the educational process and leaves individuals with no recourse against automated judgments that can profoundly impact their futures.

Charting a Course for Equitable AI in Education

It became clear that the ultimate value of AI in education was determined not by its technical power, but by the strength of its ethical guardrails and the steadfast commitment to human oversight. The path toward responsible implementation required a multi-layered approach, recognizing that technology alone could not solve problems rooted in systemic inequality. Lasting change depended on a holistic strategy that combined technical diligence with institutional reform and robust policy.

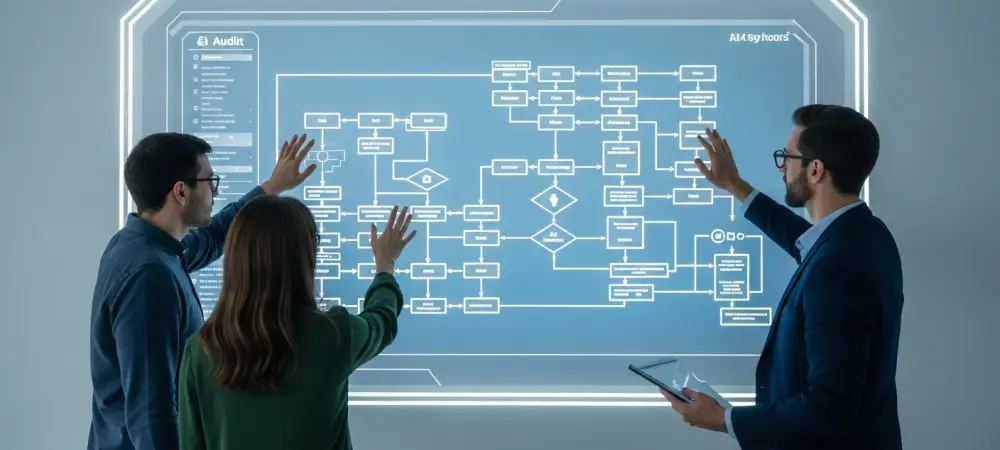

For educators and administrators, this meant adopting a proactive, human-centered stance. Best practices involved conducting rigorous audits for bias in any AI tool both before and after its deployment and ensuring that educators always retained the final authority to override algorithmic recommendations. Furthermore, educational institutions prioritized professional development, equipping teachers with the skills to understand how these tools worked, interpret their outputs critically, and spot potential biases in action. Consent for data collection was transformed from a one-time checkbox into a transparent, ongoing dialogue with students and families, ensuring that all stakeholders understood what data was being gathered and why.

At the policy level, a consensus emerged around the need for strong regulatory frameworks to protect students. Drawing inspiration from forward-thinking regulations, policymakers classified educational AI as a high-risk application, mandating stringent requirements for transparency, accountability, and human oversight. Existing privacy laws were updated to address the unique challenges posed by AI-driven data collection. These combined efforts from educators, institutions, and governments created a foundation for harnessing the benefits of AI while actively safeguarding the principles of equity, privacy, and human agency in education.