The relentless hum of digital communication now carries a threat that evolves faster than many defenses can adapt, with malicious emails arriving in inboxes at a rate that has more than doubled over the past year. This dramatic escalation is not the work of larger human teams but the product of a powerful new ally for cybercriminals: Artificial Intelligence. As AI becomes the engine behind modern cybercrime, it is rewriting the rules of digital security, rendering long-trusted defenses inadequate and forcing a fundamental reevaluation of how organizations protect their most sensitive information. The era of predictable, easily spotted phishing scams is over, replaced by a new generation of intelligent, automated, and highly personalized attacks.

Did You Just Get Phished An Attack Now Happens Every 19 Seconds

The acceleration of digital threats has reached an unprecedented pace, with a new phishing attack now launched every 19 seconds. This figure represents a startling increase from just one year ago when the rate was a comparatively slower one every 42 seconds. This is not a cyclical trend but a systemic shift in the cybercrime landscape, driven by the widespread operational deployment of artificial intelligence. No longer a theoretical or future concern, AI is the primary catalyst behind this surge. Threat actors are leveraging AI to automate and scale their operations to a degree previously unimaginable. The technology allows for the rapid creation and distribution of sophisticated phishing campaigns, overwhelming security systems with sheer volume and speed. This shift marks a critical inflection point where offensive AI capabilities are outpacing defensive measures.

The End of Old School Security Why Your Spam Filter Is Failing

For years, organizations have relied on perimeter-based security controls, such as spam filters and secure email gateways, to act as a digital fortress. These systems were designed to identify and block known threats based on established signatures, keywords, and sender reputations. However, this model is becoming increasingly obsolete in the face of AI-driven cyberattacks that are engineered to be adaptive and evasive. The fundamental flaw in legacy systems is their reliance on static rules to combat a dynamic threat. AI-powered phishing campaigns do not use a single, repeatable template; instead, they are polymorphic, constantly altering their code, language, and delivery methods to appear benign to automated scanners. By generating unique malicious URLs for each target and crafting contextually aware messages, these attacks are designed to slip through the cracks of traditional defenses, landing directly in the user’s inbox where the real battle begins.

The New Playbook How AI Is Rewriting the Rules of Phishing

The new generation of phishing attacks leverages hyper-personalization at an industrial scale. AI algorithms can craft emails in any language with flawless grammar and syntax, effectively eliminating one of the most common red flags that once betrayed fraudulent messages. These campaigns go a step further by dynamically tailoring malicious links and spoofed brand assets based on a victim’s device, browser, and publicly available personal data. The depth of this customization is staggering, with security analyses revealing that 76% of initial infection URLs in some campaigns were unique to the recipient.

Moreover, threat actors are deploying increasingly evasive and malicious tactics. There has been a significant rise in “conversational” phishing, a technique that omits obvious links or attachments in initial emails to appear harmless. This method is often a precursor to sophisticated Business Email Compromise (BEC) schemes. Alongside this trend, there has been a 204% surge in phishing attacks designed specifically to deliver malware and a 105% increase in the deployment of Remote Access Tools (RATs), which are often managed by AI-powered automation to handle large-scale infiltrations.

Cybercriminals are also continuously evolving their infrastructure to evade detection. One notable trend is the massive 19-fold increase in the use of the “.es” top-level domain for credential theft, making it one of the most abused domains globally. AI enables these polymorphic campaigns to constantly shift their appearance and infrastructure, making it nearly impossible for signature-based detection systems to keep up. This constant state of flux is the new standard, challenging security teams to defend against a threat that never looks the same way twice.

From the Front Lines A Cybersecurity Firms Alarming Findings

Recent analysis from leading cybersecurity firms confirms that AI is the definitive factor behind the dramatic escalation in phishing frequency and sophistication. Experts assert that these AI-driven advancements fundamentally challenge and weaken the effectiveness of legacy security systems. The ability of AI to generate novel, high-quality phishing lures at scale has effectively nullified many of the automated checks that organizations have relied on for years.

A critical observation from these frontline reports is that as automated defenses falter, the role of human intelligence has become more crucial than ever. While AI empowers attackers, it also creates subtle anomalies that automated systems, trained on past data, are not yet equipped to recognize. Consequently, the last line of defense is often an alert and well-trained employee who can spot the nuances of a sophisticated, AI-generated phishing attempt that a machine might miss. This underscores a growing gap between what technology can prevent and what a vigilant human can detect.

Rethinking Defense Countering the AI Powered Threat

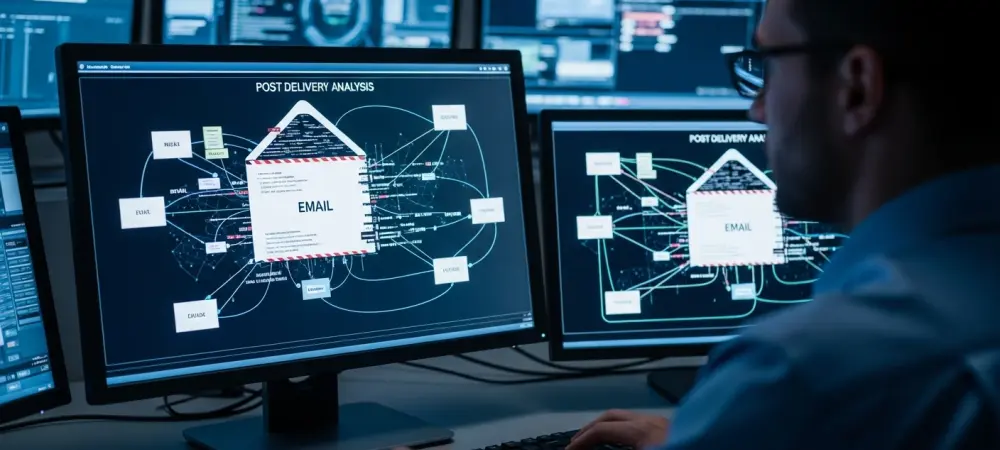

To effectively combat this evolving threat, organizations had to shift their focus from prevention-at-the-perimeter to robust post-delivery analysis. The reality was that sophisticated, AI-crafted emails would inevitably bypass initial filters and land in user inboxes. The new defensive posture therefore required solutions that could analyze emails already delivered, scrutinizing them for behavioral and contextual clues that might indicate malicious intent, even in the absence of a known bad link or attachment.

This paradigm shift ultimately placed a renewed emphasis on empowering the human element of security. The most effective defense strategies combined advanced technological analysis with consistent human validation. It became clear that creating an educated and security-conscious workforce was not just a best practice but an irreplaceable component of modern cyber defense. Employees, trained to identify and report suspicious messages, acted as a distributed sensor network, providing the critical intelligence needed to neutralize threats that technology alone could no longer stop.