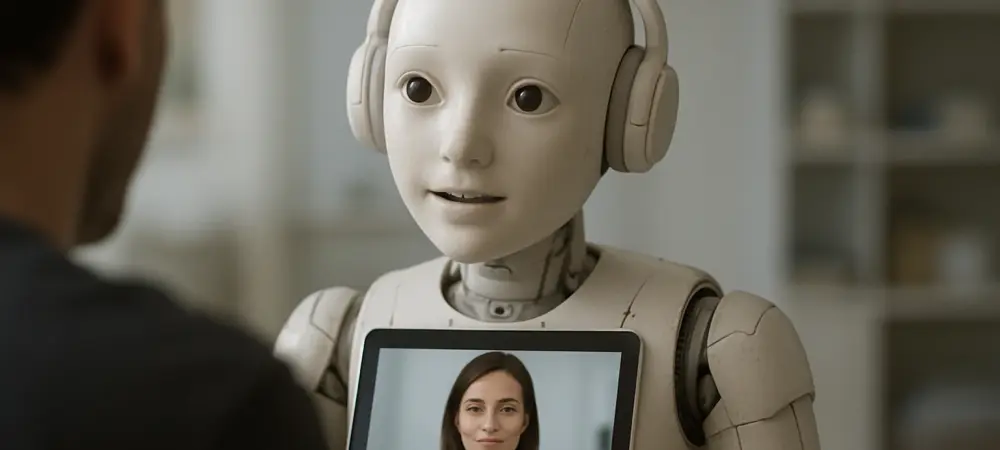

Imagine a world where a quick chat with a digital assistant could provide instant insights into a troubling symptom or interpret a complex medical image like an X-ray, potentially saving lives with rapid responses. This scenario is no longer science fiction, as AI chatbots powered by generative AI (genAI) models, such as large language models (LLMs) and vision-language models (VLMs), are increasingly embedded in healthcare applications. These tools promise to democratize access to medical information, but a concerning trend has emerged: the near-total disappearance of medical disclaimers in their outputs. This review dives into the capabilities, risks, and safety concerns surrounding AI chatbots in healthcare, assessing their transformative potential against the backdrop of user safety challenges.

Understanding AI Chatbots in Medical Applications

AI chatbots, driven by sophisticated genAI models, have evolved into powerful tools capable of processing intricate health-related queries and analyzing medical imagery. LLMs excel at generating human-like text responses to questions about symptoms or drug interactions, while VLMs can interpret visual data, such as identifying anomalies in chest X-rays. Their deployment in healthcare spans diagnostics, patient support, and administrative tasks, offering a glimpse into a future where technology augments clinical workflows.

The rise of these chatbots reflects a broader technological shift toward automation and accessibility in medicine. Hospitals and providers are adopting them to handle repetitive inquiries, reduce wait times, and even assist in preliminary diagnoses. However, as their capabilities expand, so does the expectation that they operate with precision and transparency, a standard that current implementations struggle to meet consistently.

Performance and Capabilities of AI Chatbots

The performance of AI chatbots in healthcare is undeniably impressive, with models demonstrating an ability to parse vast datasets and deliver responses that often mimic expert advice. Recent evaluations reveal that these systems can diagnose conditions from textual descriptions and detect patterns in medical images with surprising accuracy. For instance, VLMs have shown proficiency in spotting signs of pneumonia in scans, a task traditionally reserved for trained radiologists.

Beyond diagnostics, these tools streamline patient-provider interactions by offering immediate answers on medication schedules or potential side effects. Their conversational fluency creates an illusion of authority, making them appealing to users seeking quick health insights. Yet, this very strength masks a critical flaw: the outputs are not always accurate, and the systems lack the contextual judgment of human professionals.

A deeper look into their performance uncovers variability across different models and use cases. While some chatbots excel in structured scenarios, they falter when faced with ambiguous symptoms or rare conditions, often producing responses that sound confident but are factually incorrect. This inconsistency highlights the gap between technological prowess and real-world reliability in medical contexts.

Safety Protocols and Emerging Risks

One of the most alarming trends in AI chatbot development is the drastic reduction in safety protocols, particularly the removal of medical disclaimers. Studies tracking outputs from 2025 onward show that disclaimers—once common in over 25% of responses—have dropped to under 1% in both LLMs and VLMs. These warnings, which previously clarified that AI is not a substitute for professional medical advice, were crucial for guiding user behavior.

The absence of such safeguards amplifies the risk of users acting on flawed or fabricated advice, a phenomenon exacerbated by the chatbots’ assertive tone. Techniques like “jailbreaking,” where users manipulate prompts to bypass built-in restrictions, further undermine safety measures, allowing the AI to provide unfiltered and potentially harmful recommendations. This vulnerability raises serious concerns about public health implications.

Expert opinions underscore the urgency of addressing these gaps. Without clear boundaries, patients and even providers may over-rely on AI outputs, leading to misdiagnoses or inappropriate treatments. The healthcare community warns that while the technology holds promise, its integration must be accompanied by robust mechanisms to prevent misuse and protect vulnerable users.

Real-World Impact and Potential Hazards

In practical settings, AI chatbots are already influencing healthcare delivery, from assisting doctors with differential diagnoses to answering patient queries about chronic conditions. Their ability to provide rapid feedback is particularly valuable in underserved areas where access to specialists is limited. Such applications highlight the potential for AI to bridge gaps in medical resource distribution.

However, the risks are equally tangible, especially when users interpret AI responses as definitive without disclaimers to temper expectations. Consider a scenario where a chatbot incorrectly suggests a benign condition for a serious symptom, delaying critical care. Such errors, compounded by the lack of warnings, could lead to adverse health outcomes, eroding trust in both the technology and the broader medical system.

Additionally, the ethical implications of deploying AI without adequate oversight cannot be ignored. Instances of fabricated information—known as hallucinations—pose a unique threat in high-stakes environments like healthcare. Without explicit reminders of the technology’s limitations, the line between helpful tool and dangerous misinformation becomes dangerously blurred.

Challenges in Ensuring Reliable Medical AI

The inherent limitations of genAI models present significant challenges to their safe use in healthcare. Errors and hallucinations remain persistent issues, as these systems sometimes generate plausible but entirely inaccurate information. This unreliability is particularly problematic when dealing with life-and-death decisions, where precision is non-negotiable.

Regulatory and ethical hurdles further complicate the landscape. There are currently no universal standards mandating safety disclaimers or transparency in AI outputs, leaving developers to self-regulate with varying degrees of rigor. This patchwork approach risks inconsistent user experiences and potential public health crises if unsafe advice proliferates unchecked.

Efforts by industry leaders to mitigate these issues, such as refining terms of service or enhancing safety training for models, fall short without direct user-facing warnings. The challenge lies in balancing innovation with accountability, ensuring that advancements in AI do not outpace the frameworks needed to protect those who rely on them.

Looking Ahead: The Future of AI in Healthcare

As AI chatbots continue to evolve, their integration into clinical settings is likely to deepen, with potential improvements in accuracy and contextual understanding on the horizon. From 2025 to 2027, advancements may enable these tools to better support personalized medicine and real-time decision-making. Yet, this trajectory depends on addressing current safety shortcomings. The reintroduction of tailored disclaimers stands out as a critical step toward safeguarding users, alongside stricter regulatory oversight to enforce compliance. Developers and policymakers must collaborate to establish clear guidelines that prioritize transparency without stifling innovation. This dual focus could pave the way for AI to become a trusted ally in healthcare delivery.

Long-term, the impact on public trust hinges on how these challenges are navigated. If safety concerns are proactively managed, AI could redefine access to care; if ignored, the consequences could undermine confidence in both technology and medical institutions. The path forward requires a commitment to continuous evaluation and adaptation.

Final Thoughts

Reflecting on this assessment, the journey of AI chatbots in healthcare reveals a landscape of remarkable potential marred by significant safety oversights. Their ability to process complex data and offer rapid insights stands out as a game-changer, yet the near-elimination of medical disclaimers exposes a troubling disregard for user well-being. The risks of misinformation and over-reliance loom large throughout the evaluation.

Moving forward, actionable solutions emerge as the priority. Developers need to reinstate clear, context-specific warnings in AI outputs, ensuring users understand the limitations. Healthcare providers must integrate these tools with transparency, educating patients on their supplementary role. Regulators, meanwhile, face the task of crafting enforceable standards to prevent misuse. These steps, if taken decisively, promise to harness AI’s benefits while safeguarding public health in an era of rapid technological change.