As we look ahead to 2024, the data center technology sector is on the brink of a major evolution, with specialized processors increasingly taking center stage to manage complex AI tasks. This shift signals a move away from one-size-fits-all computing solutions toward chips designed for specific functions, highlighting the importance of co-processing units.

Developments in the chip market point to enhanced CPUs that work in tandem with these specialized processors, optimizing performance for AI applications. The integration of these co-processors is not just a trend but a necessity, as demands for AI inferencing—where AI algorithms make decisions based on data analysis—are on the rise.

As artificial intelligence becomes more ingrained in technology, data centers must adapt. This adaptation means a greater focus on AI inferencing capabilities within processors. Analyzing data and making decisions at rapid speeds require not only powerful CPUs but also co-processors dedicated to these tasks, enabling faster and more efficient processing.

The future projections for the chip market are clear: there will be a continued emphasis on specialized co-processors tailored for AI workloads, alongside ongoing advancements in CPU technology. These developments are set to redefine the capabilities of data centers, ensuring they are equipped to handle the increasing demands of AI-driven applications.

Co-Processors: Enhancing Compute Capacity

The Rise of Specialized Co-Processing Units

The relentless pursuit of superior computational efficiency and performance has led the data center industry toward the growing integration of specialized co-processors. These auxiliary chips are crafted to execute precise tasks, such as AI operations, that would otherwise tax a general-purpose CPU. The advantage of these co-processors lies in their ability to work in tandem with CPUs—handling parallel processing tasks with aplomb, hence enhancing overall system throughput and efficiency.

The implementation of co-processors has seen a significant rise as businesses tackle increasingly complex datasets and more intricate computational workloads. This trend marks a shift in server design philosophy, from accommodating homogeneous processing units to a more heterogeneous architecture, which can adapt and distribute tasks to the most effective processing unit available.

Advances in GPU and ASIC Technology

Leading the charge in the expansion of co-processors are innovations in GPU and ASIC technology. Nvidia’s #00 and AMD’s MI300 GPUs stand as beacons of advancement, offering unprecedented boosts in AI training and inference tasks. These GPUs accelerate processes that would otherwise occupy extensive CPU resources, freeing the CPUs for other duties and enhancing the overall efficiency of data centers.

In addition to GPUs, companies are diligently crafting application-specific integrated circuits (ASICs) that are tailored to perfect a gamut of specialized tasks. In the burgeoning field of AI and machine learning, these ASICs are offering optimized solutions to reduce the overall computational burden. The hyperscale cloud service providers, recognizing the potential of these technologies, are actively investing in their development—underscoring their commitment to the future of data center efficiency.

The Changing Faces of CPUs

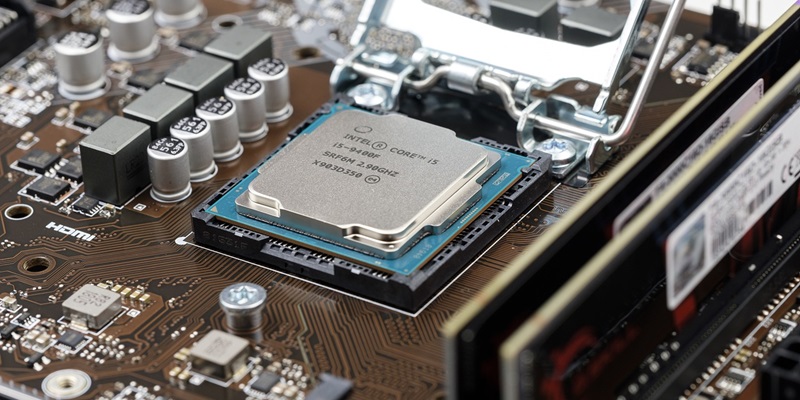

Intel Versus AMD: The Battle for Market Share

Intel’s dominance in the server chip market is now being rigorously challenged by AMD, with both companies locked in a battle for supremacy. As AMD’s market share continues to increase, courtesy of their competitive EPYC series, Intel is strategically positioning its next-generation Xeon processors to defend and reclaim its market share. The competition has sparked a flurry of innovations, with both companies releasing CPUs that tout superior performance and energy efficiency.

AMD’s upcoming fifth-generation EPYC CPU, codenamed Turin, is built on the new Zen 5 core architecture and pledges significant performance leaps and energy efficiency gains. Meanwhile, Intel’s Xeon series is not resting on its laurels, with their own launches aimed at countering AMD’s offerings. This rivalry is critical as it propels the server CPU market forward, engendering a diverse range of powerful and efficient chips.

The Emergence of Arm-Based Processors

Arm-based processors are increasingly prominent, chiefly for their power efficiency and capacity for multiple cores. Google has recently stepped into the hyperscale data realm with its Axion Processor, indicating the potential of Arm technology in such environments. As an internet titan makes its mark, it signals a broader industry evolution.

With its promise of low-energy consumption while maintaining processing prowess, Arm is capturing market share. This is a trend that the CPU industry giants cannot overlook. As organizations seek to balance energy concerns with computational needs, it’s expected that Arm’s presence in enterprise and cloud data centers will only expand.

This shift toward Arm technology is also propelled by the broader industry’s drive to optimize energy, an aim consistent with growing environmental awareness and operational cost management. As the conversation continues to focus on balancing ecological sustainability with advanced computing needs, Arm’s market position in data centers is set to strengthen, challenging the status quo and presenting new opportunities for innovation in processor design and application.

AI Inferencing: The Frontier of Chip Innovation

Transitioning from AI Training to Inferencing

As the AI training chip sector matures, attention is pivoting towards inferencing – using trained AI algorithms on fresh data. Initially dominated by Nvidia’s GPU advancements for AI training, the industry is now witnessing a burgeoning market for inferencing chips designed for swift and precise application of AI models in real-time scenarios.

Major players such as Qualcomm, AWS, and Meta are leading this transition, pouring resources into creating these bespoke inferencing processors. These chips are tailored specifically for the fast-paced and accuracy-intensive requirements of running AI models efficiently. As such, they’re engineered to deliver high performance with minimal delay, which is critical for real-time AI tasks.

This strategic shift towards developing and deploying specialized inferencing chips underscores the industry’s outlook on AI’s future. There’s a growing consensus that for AI applications to be truly effective and integrated into our digital ecosystem, they must hinge on these specialized chips, designed for the high-stakes domain of inferencing. Such a trend points to a new chapter in silicon innovation, one focused on optimizing the practical, day-to-day utility of artificial intelligence. This evolution of the hardware landscape implies a significant evolution—where AI’s potential can be fully harnessed in real-world applications through the cutting-edge capabilities of inference-focused chips.

Hardware Customization for AI Workloads

The drive towards bespoke AI hardware has become crucial, reflecting the reality that generalist computing options no longer satisfy the demands of diverse AI tasks. Chip manufacturers are keenly developing processors that are not only robust but also fine-tuned for particular types of AI processes.

Such specialized chips enable the balancing act between power efficiency and cost-effectiveness while delivering enhanced performance. They cater to the growing intricacies of AI functions, providing a means to scale with precision. This shift to workload-specific hardware for AI has emerged as part of a larger trend towards tailored computing solutions throughout the tech sector.

The creation of chips designed for specific AI tasks allows for a high degree of optimization. This strategy helps in managing the escalating demands placed on computing infrastructure as AI applications proliferate and evolve. By adopting a more targeted approach, chipmakers are helping users to unlock the full potential of AI technologies.

This move toward custom hardware solutions reflects an industry-wide acknowledgment that the future of AI computing is not in a one-size-fits-all approach but in a mosaic of specialized tools adept at handling distinct challenges. As the AI landscape continues to expand, this tailored approach is likely to become ever more prevalent, with hardware that closely aligns with the unique requirements of various AI workloads.

The Financial Trajectory of Server and Chip Sales

Server Revenue Growth and Market Dynamics

The outlook for server revenue is highly positive, with expectations set for it to potentially more than double by the year 2028. This notable expansion goes beyond just an increase in sales numbers—it reflects an evolution in server technology, whereby the value and complexity have significantly grown.

Serving as a catalyst for innovation, the chip industry is positioning itself at the forefront of this surge by creating advanced solutions tailored to the intensifying demands of data center tasks. This transition points towards a more mature market landscape that prioritizes high performance and energy efficiency rather than sheer volume, aligning with the trajectory toward technological refinement. The intersection of server sophistication and market demand suggests that data centers will increasingly gravitate towards cutting-edge, higher-value server systems. This evolution is fundamental in understanding the tech sector’s trajectory, where the fusion of performance and cost-effectiveness is becoming the cornerstone of industry progression and economic gains.

Price Scales with Sophistication

Server market dynamics are shifting, evidenced by a stark trend: unit shipments are up only slightly, yet server prices are climbing significantly. This surge in price is a direct reflection of the technological evolution in the server space. Advanced servers now include state-of-the-art processors and are designed to support cutting-edge computing paradigms, both of which boost their intrinsic value. It’s clear that the industry is prioritizing performance enhancement, with the latest generation of chips at the heart of this push.

Manufacturers of chips are at the forefront of this shift, churning out an array of sophisticated products to meet the growing demand for powerful, efficient servers. Customers show an increasing willingness to invest in these enhanced servers, signaling their recognition of the tangible benefits in terms of performance and the promise of improved computational efficiency. This trend is more than a simple market fluctuation; it highlights an industry-wide movement towards more advanced computing solutions, aiming to fulfill the evolving requirements of modern technology infrastructure.

Strategic Directions and Innovations in the Chip Market

Diversification of Chip Architectures

Companies are increasingly adopting a multifaceted approach to chip design, with their product ranges evolving to better cater to specific industry needs. Central Processing Units (CPUs) are being enhanced to tackle a broad spectrum of tasks, while Graphics Processing Units (GPUs) are sharpening their focus on processing-intensive activities, particularly within the realm of Artificial Intelligence (AI). Additionally, the rise of custom-made chips is evident as businesses tailor silicon to power unique cloud services.

This strategy of meticulously crafting hardware to serve distinct purposes marks a significant shift in the semiconductor industry. Manufacturers are channeling the notion that creating specialized hardware is not just beneficial but essential to stand out amidst intense market competition. It also enables them to meet the particular demands of today’s data centers with greater precision. By investing in segmentation and developing products with specific capabilities, silicon companies are positioning themselves to better serve the myriad computational tasks demanded by contemporary technology infrastructure.

Novel Processors and the Competitive Landscape

The processor roadmap for 2024 highlights a competitive tech arena spearheaded by cutting-edge CPUs from leading companies. AMD is making strides with its upcoming Turin processors, while Intel is not far behind with its formidable Xeon 6 series. Moreover, Arm is gaining significant ground, particularly with its integration into Google’s Axion Processor.

This progress embodies an industry rich in diversity and driven by the relentless pursuit of enhancement in server performance. Also, cloud giants like AWS are stepping up with their specialized CPUs and AI chips, intensifying the competitive landscape. Each iteration in silicon innovation is pushing the boundaries of what data centers can achieve, ensuring a robust and forward-moving chip market. As the calendar progresses towards 2024, the processor domain is set to witness an era of rapid evolution, fueled by technological breakthroughs and a battle for supremacy in efficiency and computational power.