Today, we’re thrilled to sit down with Ling-Yi Tsai, a seasoned HRTech expert with decades of experience helping organizations navigate transformative change through technology. Specializing in HR analytics and the seamless integration of tech across recruitment, onboarding, and talent management, Ling-Yi has a unique perspective on the growing challenge of AI-driven hiring fraud. In this interview, we dive into the evolving landscape of fraudulent job applications, explore strategies for spotting AI-generated fakes, and discuss how companies can protect themselves in an era of sophisticated deception.

How has the rise of AI-generated fake job applicants changed the hiring landscape, and what trends have you noticed in this space?

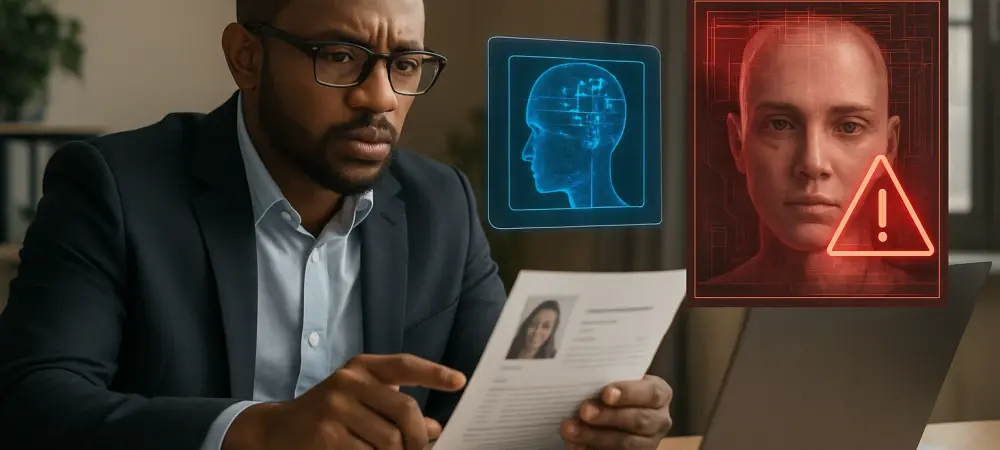

The rise of AI-generated fake applicants has turned hiring into a bit of a battlefield. We’re not just dealing with padded résumés anymore; malicious actors are using generative AI, deepfakes, and stolen data to create incredibly convincing personas. I’ve seen trends like coordinated schemes targeting remote-first companies, where fraudsters impersonate candidates through synthetic video interviews or cloned voices. It’s particularly alarming because these efforts often go beyond just landing a job—they’re about infiltrating organizations for data theft or espionage. The remote hiring boom has only amplified this, as it’s harder to verify someone’s authenticity without face-to-face interaction.

Why do you think certain industries, like cybersecurity and tech, seem to be more at risk from fraudulent applicants?

These industries are prime targets because of what’s at stake—intellectual property, sensitive data, and network access are incredibly valuable to bad actors. Cybersecurity and tech firms, especially those with remote teams, often hold the keys to critical infrastructure or proprietary innovations. I’ve noticed that fraudsters are drawn to these sectors because even a short stint as an insider can yield massive payoffs, whether through installing malware or extracting trade secrets. The high demand for talent also means hiring happens fast, sometimes skipping thorough vetting, which creates an opening for deception.

During a video interview, what clues might suggest a candidate is using deepfake technology, and how can HR teams spot them?

In video interviews, deepfakes often reveal themselves through subtle inconsistencies. I look for things like mismatched lip movements with spoken words, unnatural blinking patterns, or odd head tilts that don’t align with human behavior. Sometimes the lighting or background glitches in ways that don’t make sense. I’ve advised teams to pay close attention to emotional cues—if a candidate’s facial expressions don’t match the tone of their answers, that’s a red flag. Beyond intuition, tools like liveness detection software or biometric verification can help flag avatars. It’s about combining human observation with tech to catch these fakes in real time.

How valuable is a candidate’s digital footprint in verifying their authenticity, and what do you focus on when reviewing it?

A candidate’s digital footprint is incredibly valuable—it’s often the first line of defense against fraud. I focus on platforms like LinkedIn for professional history, connections, and activity, as well as niche spaces like GitHub for developers to see their project contributions. Genuine candidates usually leave a trail, whether it’s conference mentions, endorsements, or interactions with peers. If someone claims a decade of experience but their profile is brand new or lacks depth, that’s a warning sign. For those with little online presence, I dig deeper through references or direct conversations to ensure their story holds up.

What techniques do you use in behavioral interviews to uncover potential inconsistencies in a candidate’s background?

Behavioral interviews are a goldmine for spotting fraud because they force candidates to get specific. I ask detailed questions about past projects—like challenges they faced, team dynamics, or specific outcomes—and listen for vague or generic answers. I might say, “Walk me through a complex problem you solved and who you collaborated with.” If they can’t name specifics or their story feels rehearsed, I probe further. Red flags include dodging details or inconsistencies in timelines. It’s about creating a conversation where a fake can’t rely on scripted responses.

How do you approach identity verification early in the hiring process to prevent fraud before it escalates?

Early identity verification is critical to stop fraud in its tracks. I advocate for digital credentialing right at the pre-screening stage, using tools like biometric liveness testing and document verification services. These can cross-check IDs against databases to catch stolen or forged credentials before a candidate even gets to an interview. At the same time, it’s important to keep the process smooth for legitimate applicants—clear communication about why these steps are necessary helps. I’ve seen companies integrate facial recognition that matches submitted docs with real-time data, which adds a strong layer of security without much friction.

Why is standardizing the application process so important in deterring fraudulent submissions, and how do you make it effective?

Standardizing the application process creates hurdles that fake applicants struggle to clear. When you require detailed, verifiable information—like specific project descriptions, past colleagues, or certifications—it’s harder for fraudsters to mass-submit generic profiles. I’ve worked with teams to design forms that ask for granular data and use applicant tracking systems to spot patterns like duplicate IPs or inconsistent timelines. The goal isn’t to complicate things for real candidates but to build in checkpoints that expose fakes. Technology plays a huge role here, flagging anomalies before HR even reviews the application.

What role do skills assessments play in validating a candidate’s legitimacy, and how do you ensure their integrity?

Skills assessments are a fantastic way to separate the real from the fake because they test actual ability, not just claims on a résumé. I design assessments to be role-specific—think coding challenges for developers or case studies for analysts—and ideally conduct them in real-time or with recorded sessions. To prevent cheating, I’ve used tools that analyze keystroke patterns or browser behavior to detect automation or impersonation. It’s also helpful to follow up with a discussion about their test results during the interview to see if they can explain their approach. This dual approach really helps confirm legitimacy.

What is your forecast for the future of AI-driven hiring fraud and the strategies companies will need to adopt?

I see AI-driven hiring fraud becoming even more sophisticated in the coming years as generative AI tools improve. Fraudsters will likely create deeper, more consistent digital personas, making detection harder. On the flip side, I expect companies to lean heavily into advanced verification tech—think AI-powered deepfake detection, blockchain-based credentialing, and real-time behavioral analytics. But tech alone won’t cut it; organizations will need to foster a culture of vigilance, with HR and IT working hand-in-hand. Training teams to spot red flags and embedding security into every hiring step will be non-negotiable. The arms race between deception and detection is only going to heat up, and adaptability will be key.