What if the ideal candidate on your shortlist turns out to be a complete fabrication, crafted by artificial intelligence with flawless credentials and a convincing persona? In 2025, this scenario is no longer a distant possibility but a pressing concern for hiring managers across industries. As AI tools become increasingly sophisticated, they are being weaponized to create synthetic identities that slip through traditional screening nets, posing risks to organizational integrity and safety. This feature delves into the hidden dangers of AI-generated hiring fraud, uncovering its implications and offering actionable strategies to protect companies from this evolving threat.

The Silent Infiltration of Synthetic Candidates

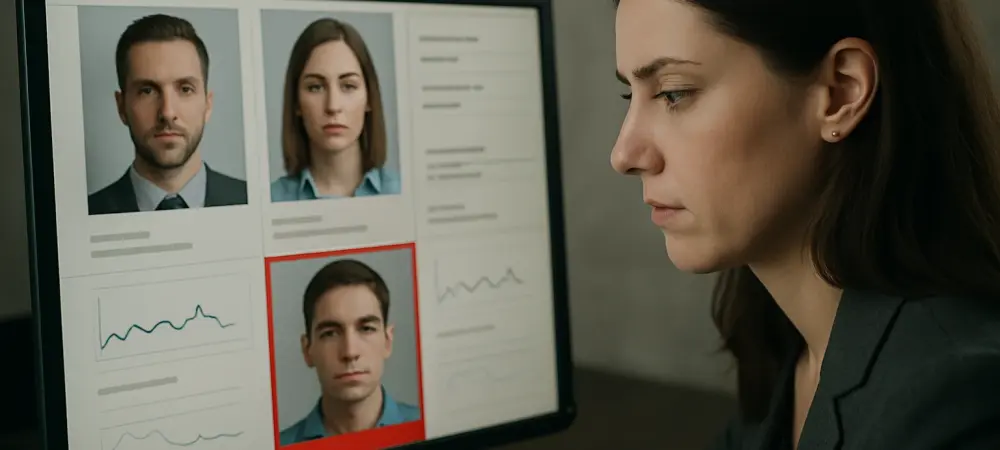

In a world where remote hiring has become the norm, distinguishing a real candidate from an AI-generated imposter is a daunting challenge. Fraudsters leverage cutting-edge technology to produce resumes that seem impeccable, complete with fabricated work histories and degrees from prestigious universities. Even video interviews, once considered a reliable way to assess authenticity, can now be faked using deepfake technology, leaving recruiters vulnerable to deception. The scale of this issue is staggering. Recent studies estimate that up to 15% of job applications in high-risk sectors like healthcare and finance may contain AI-generated elements, often undetected until significant harm occurs. Companies face not just financial losses but also reputational damage when fraudulent hires are exposed, making it imperative to recognize this silent infiltration before it spirals out of control.

Why This Threat Demands Immediate Attention

The accessibility of AI tools has transformed the hiring landscape, empowering candidates to refine applications with unprecedented ease. However, this same accessibility allows malicious actors to exploit the technology, crafting synthetic candidates who appear legitimate at every stage of the process. In regulated industries, the consequences of hiring unqualified individuals can be catastrophic—think of patient safety risks in healthcare or compliance breaches in finance.

Beyond immediate damages, this trend erodes trust within organizations and across sectors. When fraudulent hires gain access to sensitive data or critical roles, the ripple effects can undermine operational stability and stakeholder confidence. With traditional background checks proving inadequate against AI-driven deception, the urgency to adapt screening methods has never been greater.

Peeling Back the Layers of AI-Driven Deception

AI-generated hiring fraud takes on multiple deceptive forms, each presenting distinct challenges for employers. Synthetic identities combine real and fake data to create candidates who seem verifiable, often listing credentials that appear authentic on paper. Meanwhile, deepfake technology enables fraudsters to impersonate individuals during virtual interviews, making detection nearly impossible without specialized tools.

Credential forgery adds another layer of complexity, as AI can produce fake diplomas and certifications that fool even experienced recruiters at first glance. Industry-specific risks amplify the danger—healthcare organizations risk patient harm, financial firms face regulatory penalties, and tech companies confront potential intellectual property theft. These varied threats highlight the need for comprehensive defenses tailored to combat each facet of fraud.

Expert Warnings on the Rising Tide of Fraud

Industry leaders are increasingly vocal about the dangers of AI in hiring. A prominent cybersecurity expert recently cautioned, “The sophistication of these tools means fraudulent candidates can bypass initial screenings with alarming ease.” This sentiment is backed by data showing a sharp rise in undetected AI-generated applications, particularly in sectors with high-stakes roles.

Hiring managers share chilling anecdotes that bring the issue to life. One tech firm narrowly avoided onboarding a candidate whose entire background was artificially constructed, only catching the fraud through a last-minute manual verification. Such close calls underscore the inadequacy of relying solely on outdated processes and emphasize the critical need for modern solutions paired with human vigilance.

Strategies to Fortify Hiring Defenses

Combating AI-generated hiring fraud demands a multi-pronged approach that blends technology with human insight. Direct-source validation stands as a cornerstone—verifying credentials straight from issuing institutions or licensing bodies ensures authenticity beyond potentially forged documents. Real-time data checks using automated tools can also flag discrepancies swiftly, such as inconsistent employment timelines or revoked certifications. Continuous credential monitoring post-hire adds another layer of protection, catching issues that may emerge after onboarding. AI-powered detection systems help identify patterns of fraud, like overly polished resumes that might signal artificial creation, while trained screening teams provide essential oversight to spot contextual red flags algorithms might miss. Tailoring these strategies to industry-specific risks—stricter protocols for healthcare and finance, for instance—ensures a hiring process that prioritizes integrity without hindering efficiency.

Reflecting on a Path Forward

Looking back, the battle against AI-generated hiring fraud revealed a critical vulnerability in modern recruitment practices that demanded urgent action. Companies that took proactive steps to implement layered verification processes, from direct-source checks to continuous monitoring, positioned themselves as leaders in safeguarding organizational trust. Those who integrated AI detection tools alongside human judgment often found the balance needed to stay ahead of sophisticated deception.

Moving forward, the focus shifted toward building adaptable systems capable of evolving with AI advancements. Collaboration across industries to share best practices and develop standardized defenses became a priority, ensuring that no sector remained an easy target. By committing to these actionable measures, organizations not only protected their hiring pipelines but also set a precedent for resilience in an era of relentless technological change.